Leaderboard

Popular Content

Showing content with the highest reputation on 05/31/20 in all areas

-

2 points

-

OK. There are a lot that is not quite right in this, so I'll start with the more obvious ones and we can see where we go from there... Dark energy is not responsible for the expansion of space. We knew, from both theory and evidence, that the universe was expanding for nearly 100 years before dark energy was discovered. Dark energy is a (hypothetical) explanation for the observed accelerating rate of expansion. (It is the simplest way of mathematically explaining the acceleration. Whether it is correct or not is still to be seen.) That is determined by things like the average energy density of the universe. Dark energy has caused it to increase slightly in the last few billion years, but it would have a very similar value without dark energy. More accurately, the more dark energy the faster the Hubble constant changes. Dark energy is (I think - we are skirting the limits of my knowledge here!) a scalar field and the same everywhere. But I'm not sure we have enough data to be absolutely certain about that. The energy density of dark energy is very, very low. It is not going to have any significant effect on a black hole. (Calculating what the effect of a varying external energy field would be on the form of a black hole is probably a difficult problem that can only be solved by simulation.) Also, the expansion of space only takes place in the large areas of space between galaxies - because it only happens where there is a uniform mass distribution. There is no expansion of space inside galaxies (or even clusters of galaxies).2 points

-

This is irrelevant, as it still cannot model tidal effects, for reasons already explained numerous times. The necessary information content just isn’t there in a scalar field, and it won’t magically appear by taking the gradient. I didn’t mention anything about cosmology, the FLRW metric describes the interior of any matter distribution that is homogenous, isotropic, and only gravitationally interacting. Of course it is most often used as a cosmological model, but doesn’t have to be. The frustrating part about this is that you are simply ignoring most of the things we say to you, which makes me feel like I’m wasting my time with this. Also, claiming that you have “explained” something when in fact you haven’t, is also really frustrating. The other thing is that you still haven’t presented an actual model, you just keep verbally describing an idea in your head - there is nothing wrong with that in itself, it is in fact commendable that you spend time thinking about these issues. Nonetheless, until you write down a mathematical model, you can’t be sure just what the implications are - you obviously think you are right, but you won’t know either way until you actually run some numbers. Then I don’t think you really understand what the term “gravity” actually means, because if you did, you would immediately see yourself that this idea of yours cannot work in the general case, and why. Just this one point is already enough; gravity is geodesic deviation. I’ll write it down formally for you: \[\xi {^{\alpha }}{_{||\tau }} =-R{^{\alpha }}{_{\beta \gamma \delta }} \thinspace x{^{\beta }}{_{|\tau }} \thinspace \xi ^{\gamma } \thinspace x{^{\delta }}{_{|\tau }}\] wherein \(\xi^{\alpha}\) is the separation vector between geodesics, and \(x^{\alpha}\) is the unit tangent vector on your fiducial geodesic. Can you find a way to replace the dependence on the metric tensor in these equations with a dependence on just a scalar field and its derivatives, in such a way that the same physical information is captured? If, and only if, you can do so, then you might be onto something with your idea.2 points

-

Mathematica uses this exact sequence as a random number generator for large integers. Does that mean it's truly random? I guess that's a question for the philosophy of maths and above my pay grade. But it's certainly impossible to predict - else the prize would have been claimed and Mathematica would have to stop using it as a random number generator. In terms of free will i'm not sure how a stochastic system offers a better solution than a determined one. That we can't predict an outcome doesn't imply free will (though if we could predict an outcome, that would seem to eradicate free will). If you made all your life decisions by the roll of a die would you say you are exercising free will?2 points

-

As I understand entropy and the Second Law of Thermodynamics, things stand as follows: A closed system has a set Mi of possible microstates between which it randomly changes. The set Mi of all possible microstates is partitioned into macrostates, resulting in a partition Ma of Mi. The members of Ma are pairwise disjoint subsets of Mi, and their union is Mi. The entropy S(ma) of a macrostate ma in Ma is the logarithm of the probability P(ma) of ma happening, which is in turn the sum Sum_{mi ∊ ma}p(mi) of the probabilities p(mi) of all microstates mi in ma. The entropy s_{Ma}(mi) of a microstate mi with respect to Ma is the probability of the macrostate in Ma to which mi belongs. The current entropy s_{Ma} of the system with reprect to Ma is the entropy of the microstate in which the system is currently in with respect to Ma. The Second Law of Thermodynamics simply states that a closed system is more likely to pass from a less probable state into a more probable one than from a more probable state into a less probable one. Thus, it is merely a stochastical truism. By thermal fluctuations, the fluctuation theorem, and the Poincaré recurrence theorem, and generally by basic stochastical laws, the system will someday go back to a low-entropy state. However, also by basic stochastical considerations, the time during which the system has a high entropy and is thus boring and hostile to life and information processing is vastly greater than the time during which it has a low entropy and is thus interesting and friendly to info-processing and life. Thus, there are vast time swathes during which the system is dull and boring, interspersed by tiny whiles during which it is interesting. Or so it might seem... Now, what caught my eye is that the entropy we ascribe to a microstate depends on which partition Ma of Mi into macrostates we choose. Physicists usually choose Ma in terms of thermodynamic properties like pressure, temperature and volume. Let’s call this partition of macrostates “Ma_thermo”. However, who says that Ma_thermo is the most natural partition of Mi into macrostates? For example, I can also define macrostates in terms of, say, how well the particles in the system spell out runes. Let’s call this partition Ma_rune. Now, the system-entropy s_{Ma_thermo} with respect to Ma_thermo can be very different from the system-entropy s_{Ma_rune} with respect to Ma_rune. For example, a microstate in which all the particles spell out tiny Fehu-runes ‘ᚠ’ probably has a high thermodynamic entropy but a low rune entropy. What’s very interesting is that at any point in time t, we can choose a partition Ma_t of Mi into macrostates such that the entropy s_{Ma_t}(mi_t) of the system at t w.r.t. Ma_t is very low. Doesn’t that mean the following?: At any time-point t, the entropy s_{Ma_t} of the system is low with respect to some partition Ma_t of Mi into macrostates. Therefore, information processing and life at time t work according to the measure s_{Ma_t} of entropy induced by Ma_t. The system entropy s_{Ma_t}rises as time goes on until info-processing and life based on the Ma_t measure of entropy can no longer work. However, at that later time t’, there will be another partition Ma_t’ of Mi into macrostates such that the system entropy is low w.r.t. Ma_t’. Therefore, at t’, info-processing and life based on the measure s_{Ma_t’} of entropy will be possible at t’. It follows that information processing and life are always possible, it’s just that different forms thereof happen at different times. Why, then, do we regard thermodynamic entropy as a particularly natural measure of entropy? Simply because we happen to live in a time during which thermodynamic entropy is low, so the life that works in our time, including us, is based on the thermodynamic measure of entopy. Some minor adjusments might have to be made. For instance, it may be the case that a useful partition of Mi into macrostates has to meet certain criteria, e.g. that the macrostates have some measure of neighborhood and closeness to each other such that the system can pass directly from one macrostate only to the same macrostate or a neighboring one. However, won’t there still be many more measures of entropy equally natural as thermodynamic entropy? Also, once complex structures have been established, these structures will depend on the entropy measure which gave rise to them even if the current optimal entropy measure is a little different. Together, these adjusments would lead to the following picture: During each time interval [t1, t2], there is a natural measure of entropy s1 with respect to which the system’s entropy is low at t1. During [t1, t2] – at least during its early part – life and info-processing based on s1 are therefore possible. During the next interval [t2, t3], s1 is very high, but another shape of entropy s2 is very low at t2. Therefore, during [t2, t3] (at least in the beginning), info-processing and life based on s1 are no longer possible, but info-processing and life based on s2 works just fine. During each time interval, the intelligent life that exists then regards as natural the entropy measure which is low in that interval. For example, at a time during which thermodynamic entropy is low, intelligent lifes (including humans) regard thermodynamic entropy as THE entropy, and at a time during which rune entropy is low, intelligent life (likely very different from humans) regards rune entropy as THE entropy. Therefore my question: Doesn’t all that mean that entropy is low and that info-processing and life in general are possible for a much greater fraction of time than thought before?1 point

-

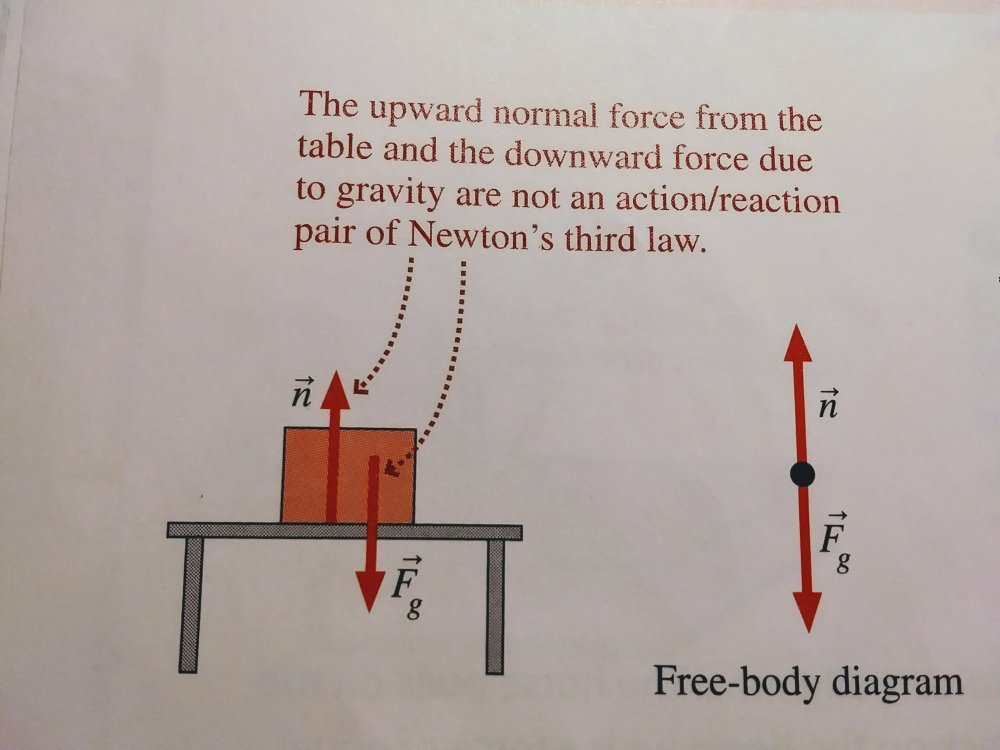

The diagram mentions gravity, that implies a third object: earth. Here's a list of third-law-force pairs I see in the diagram where a book is laying on a table standing on earth. First force -- Second force Downward force of gravity on the book exerted by Earth -- Upward force of gravity on Earth exerted by the book Downward force on the table exerted by the book -- Upward force on the book exerted by the table Downward force on the ground exerted by the table -- Upward force on the table exerted by the ground (one pair at each leg of the table) Downward force of gravity on the table exerted by Earth -- Upward force of gravity on Earth exerted by the table Note: third law pairs do not have to be contact forces.1 point

-

A good keyword for the search engine is the key.. https://www.google.com/search?q=oricycles+mathematics You just had to add "mathematics" to clear up results from tricycles and similar articles and receive what @joigus gave you above.1 point

-

Next to a large deep gravitational well, such as a Black Hole, expansion and Dark energy would be insignificant. We only note their effects where gravity is so weak that expansion/Dark energy exceeds the 'threshold' and its effects become apparent. ( we don't see expansion at solar system, galactic or even galactic cluster levels ) This is in the order of 100s of Megaparsec separation.1 point

-

Communication with the past in your causal light cone is no problem, nor is it a paradox, even for classical mechanics, if I'm not mistaken. Notice: All systems are affected by their past. And because physics microscopically is reversible to a great extent (CP violation excluded,) I surmise you could argue that something that you see as the present affecting the past, what really is is you learning about aspects of the state that weren't obvious to you before (see below in relation to @MigL's argument.) The same question turned upside down is: Does the future affect the present? That would sound more paradoxical, but it's not. If you are within the future causal cone, there is no reason why you couldn't say in some sense that the future "affects" the past. It would be a contorted way of saying it, but it would be OK, I think, e.g.: What the Earth is today "determines" what the Earth was more than 4 billion years ago when it collided with planet Theia to form the Earth-Moon system. The real problem would be communication with your "unreachable present." That is, outside your causal light cone. I don't think that's possible and I don't think that's what QM is saying. But if you feel confused, you're not alone. Some 5 years ago I saw a paper, accepted for publication on PRL, and it was about the possibility of sending signals based on the observable to be measured. All the problems people are having for decades (and still are) are to do with (1st): QM not allowing you to think of certain properties A and B at the same time (incompatibility,) even if you devise extremely clever ways to measure A in subsystem (1) and property B in subsystem (2) and then use conservation laws to infer the incompatible property in the other subsystem to circumvent Heisenberg's uncertainty principle. And (2nd): States being in general non-separable. But I wouldn't want to go off topic. And quantum erasing really has to do with removing the effects of a measurement AFAIK, not with affecting the past. I would have to review down to the basics to say more. I agree with @Strange that retrocausality is a human-level perception of the results of the experiment. Something similar happens with so-called "non-locality." It goes more in the direction of not knowing the state precisely, as @MigL suggests, if I understand him correctly. And I don't understand @Kuyukov Vitaly's point. But he may well have a valid one. I do intuit he's thinking really farther afield.1 point

-

@drumbo For the most part mainstream plan or policy for transition to zero emission is NOT based on enforced energy poverty; high emissions infrastructure is not being prematurely closed without alternatives in place. Better policy, that accounts for potential inequality, is the best result emerging from studies that show potential for inequality. Climate policy, for all the lies that are made about it by climate responsibility denying opponents, is not about reducing prosperity, it is about preserving it in the face of accumulating and economically damaging global warming. I note that it is often people who have shown no enduring interest in reducing poverty or inequality who argue against climate action on the basis that reduced fossil fuel use increases them - many of whom, from lives of extraordinary plenty, fiercely oppose accountability and affordable carbon pricing on their emissions. Justified very often by claims the science on climate must be wrong. Assumption that the consequences and costs of continued rising emissions are not significant and therefore emissions reductions are not necessary requires turning aside from the mainstream science based expert advice. For people in positions of trust and responsibility to fail to heed the expert advice is dangerously irresponsible and negligent. But Alarmist - as in unfounded - economic fear of reducing and ultimately reaching near zero fossil fuel burning - has been a potent argument used to impede the legitimate policies and actions of those seeking ways to net zero emissions and the OP looks like an example. If you believe that a shift away from high emissions energy to low is a threat solely because it introduces change then that is an alarmist position. From the linked article - I would have to disagree with that last sentence from McGee - not using fossil fuels in the first place means avoided future emissions by alleviating energy poverty in those communities by other means than fossil fuels.1 point

-

A book, object 'A', on a table, object 'B', exert equal and opposite forces on each other. They are an action-reaction pair. The book stays stationary due to gravitational force of the Earth on the book, which though equal and opposite to the force of the table on the book, isn't an action-reaction pair with it. Let's say you had an identical twin standing on the South Pole, while you stood on the North Pole. Each of you would exert an equal but opposite force on the Earth. This would not be an example of an action-reaction pair.1 point

-

ARPA contribution was setting up the network. I presume, based on your reference, that ASCII was the code used in transmission. At that time IBM had a competing code called EBCDIC. I don't know the subsequent history.1 point

-

I've just seen one dP hanging there that you must clear out when dividing by dP. At, \[\left(\frac{\partial H}{\partial P}\right)_{T}=T\left(\frac{\partial S}{\partial P}\right)_{T}+V\]1 point

-

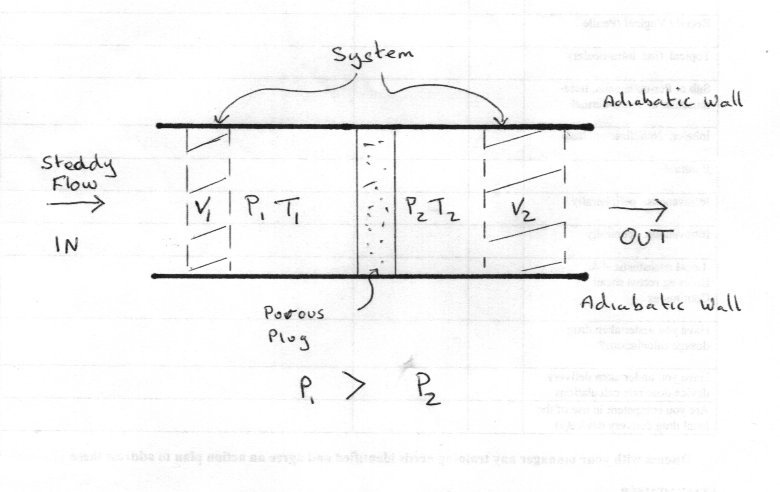

Part 1 The analysis if the Joule-Thompson or Joule-Kelvin flow or throttling is interesting because it demonstrates so many points in a successful thermodynamic analysis. Appropriate system description Distinction from similar systems Identification of appropriate variables Correct application of states Distinction between the fundamental laws and the equations of state and their application JT flow is a continuous steady state process. The system is not isolated or necessarily closed, but may be treated as quasi-closed but suitable choice of variables. It cannot be considered as closed, for instance, if we consider a 'control volume' approach, common in flow processes, since one of our chosen variables (volume) varies. By contrast, the Joule effect is a one off or one shot expansion of an isolated system. So to start the analysis here is a diagram. 1 mass unit eg 1 mole of gas within the flow enters the left chamber between adiabatic walls and equilibrates to the V1, P1, T1 and E1. Since P1 > P2 the flow takes this 1 mole through the porous plug into the right hand chamber where it equilibrates to V2, P2, T2 and E2. The 'system' is just this 1 mole of gs, not the whole flow. The system thus passes from state1 to state 2. The First Law can thus be applied to the change. Since the process is adiabatic, q = 0 and the work done at each state is PV work. E2 - E1 = P1V1 - P2V2 since the system expands and does negative work. Rearranging gives E2 + P2V2 = E1 + P1V1 But E + PV = H or enthalpy. So the process is one of constant enthalpy or ΔH = 0. Note this is unlike ΔE which is not zero. Since ΔE is not zero, P1V1 is not equal to P2V2 More of this later. Since H is a state variable and ΔH = 0 [math]dH = 0 = {\left( {\frac{{\partial H}}{{\partial T}}} \right)_P}dT + {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}dP[/math] [math]{\left( {\frac{{\partial H}}{{\partial T}}} \right)_P}dT = - {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}dP[/math] [math]{\left( {\frac{{\partial T}}{{\partial P}}} \right)_H} = - \frac{{{{\left( {\frac{{\partial H}}{{\partial P}}} \right)}_T}}}{{{{\left( {\frac{{\partial H}}{{\partial T}}} \right)}_P}}}[/math] Where [math]{\left( {\frac{{\partial T}}{{\partial P}}} \right)_H}[/math] is defined as the Joule-Thompson coefficient, μ, However we actually want our equation to contain measurable quantities to be useful so using [math]H = E + PV[/math] again [math]dH = PdV + VdP + dE[/math] [math]0 = TdS - PdV - dE[/math] add previous 2 equations [math]dH = Tds + VdP[/math] divide by dP at constant temperature [math]{\left( {\frac{{\partial H}}{{\partial P}}} \right)_T} = T{\left( {\frac{{\partial S}}{{\partial P}}} \right)_T}dP + V[/math] But [math]{\left( {\frac{{\partial S}}{{\partial P}}} \right)_T} = - {\left( {\frac{{\partial V}}{{\partial T}}} \right)_P}[/math] so [math]V = T{\left( {\frac{{\partial V}}{{\partial T}}} \right)_P} + {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}[/math] combining this with our fraction for μ we have [math]\mu = {\left( {\frac{{\partial T}}{{\partial P}}} \right)_H} = \frac{{T{{\left( {\frac{{\partial V}}{{\partial T}}} \right)}_P} - V}}{{{C_P}}}[/math] Which give a practical form with quantities that can be measured. [math]\Delta T = \frac{{T{{\left( {\frac{{\partial V}}{{\partial T}}} \right)}_P} - V}}{{{C_P}}}\Delta P[/math] Joule and Thompson found that the change in temperature is proportional to the change in pressure for a range of temperature restircted to the vicinity of T. The next stage is to introduce the second law and the connection between different equations of state and their meanings or implications. Edit I think I've ironed out all the latex now but please report errors to the author.1 point

-

Claude Shannon(1916-2001) Father of Information Theory, once teatime companion of Alan Turing1 point

-

*shakes fist* I MEANT TO SAY THAT!! Can I get back to you on that... Did you know that De Morgan taught Ada Lovelace?1 point

-

Yes I agree, Turing took one of the many steps along the development of IT, he did not invent the Von Neuman architecture (I wonder who did that ?) Although originally a theoretical mathematician, Turing was also practical as evidenced by rewriting the intensively theoretical Godel theorems into a practical (if gedanken) setting). But modern IT is about more than just about one thing. It draws together many disparate aspects of technical knowhow. But it is difficult to list the many who contributed to the drawing together of the many different threads without missing someone out, or how far back to go in engineering history. For instance what would the internet be like without the modern display screen ? Should we include the development of these or printers or printing itelf? Fax machine were first invented in 1843. What about control programs? Turing is credited with the introduction of 'the algorithm'. But Hollerith invented the punch card in 1884. You need The laws of combination logic (Boole, De Morgan) The implementation of these in machines (Babbage, Hollerith, Felt) Methods of communication between machines (Bell , Bain, Hertz, Marconi) The formation of 'words' of data from individual combinations. Standardisation of these words - the language (Bemer) Moving from mechanical to electromechanical to electronic implementations of data structures (Von Neuman) So here is my (draft) shortlist of the development, apologies for any omissions. Babbage (1791 - 1871) the analytical engine De Morgan (1806 - 1871) De Morgan's theorem. Boole (1815 - 1871) Boolean algebra Bain (1810 - 1877) the Fax machine Bell (1847 - 1922) The telegraph telephone Hollerith (1860 - 1921) punch cards Braun Berliner (1851 - 1929) microphone (inter machine communications) - 1876 Braun (1850 - 1918) cathode ray tube (inter machine communications) - 1897 Felt (1862 - 1930) Comptometer 1887 Von Neuman (1903 - 1957) Digital computer architecture. Bemer (1920 - 2004) Standardisation of digital words. 1961 Interestingly names beginning with the letter B predominate, perhaps that was Turing's crime - to start his name with the wrong letter.1 point

-

I concur with Strange. Turing was mostly concerned with computability. Whether a computing machine would stop (give an answer in a finite number of steps,) or keep running forever. Work very much related with the mathematics of decidability. The internet, I suppose, indirectly builds on his work, though. As many other advances in science, everything is interconnected. I suppose Sensei and other users who know far more about computing can tell you more. Turing was a pioneer. Computer architecture came later, as A.I. The specific advances of the internet have more to do with communication networks than computing itself. Although servers are computers, of course.1 point

-

Interesting question. I can’t think of anything. Most of his work was theoretical, on things like “what functions does a computer need; what problems can it solve” I am not aware of anything specifically related to network connectivity. Someone who has studied CS might have more ideas.1 point

-

I should be in bed, but it's Friday tomorrow so work can kiss my ass, I'm listening to this instead. Enjoy.1 point

-

I bloody well hate this, yet for some reason watched it in its entirety.......1 point

-

Here's a couple jams In honor of marijuana being legalized for recreational use in Michigan. I must admit, I'm tempted. . .1 point