-

Posts

511 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by TakenItSeriously

-

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

Since you mentioned it, The origional bassis for this solution was from a problem I had solved in poker a few years back. By solved I mean bypassed through estimations a similar problem in poker with a concept I called range removal. The idea was to try and capture the last bits of information that was normally lost in the muck in much the same way that black jack recoops some of the information lost in the discard pile, except that instead of counting actual cards, I was counting ranges of hand states that would be either acted upon or folded. I used poker bots in a poker simulator I was also developing at the time which eliminates the indeterministic behavior of humans. You could program them to play much like humans but their ranges would be deterministic by probabilities. Here is the wierd part. I kept getting instances of negative weighted rank averages which sounds a lot like QM -probabilities to me since weighted averages are just percentages like probabilities are percentages or equity calculations are percentages. In theory I could only solve the problem by instantaniously exchanging range information between all hand ranges which were entangled by the shuffle., i.e. in a theoretical hidden state. from player perspectives. Since ranges were also only in a theoretical state. I finally solved the problem by creating a real deck state that was used to deal the actual hands and an imaginary deck state I used to create a biased deck state with weighted averages for ranks. The biased imaginary deckstate could then be used to make revised adjustments in probability calculations. It worked and I was actually able to monticarlo the same results I had calculated. The intriguing aspect was that I had simulated a partial entangled state, not completely in terms of equal opposites, but in terms of each hand range being impacted as soon as the next hand range is created due to the finite number of cards. I essentially did something similar for expected results which is the extent of what I am challenging. nothing about experiments other than taking into account that the ultimate information is collected and analyze at the point after complete information is shared by both parties. i.e. something spooky is still going on. -

You do realize that I am not argueing against entanglement, but that my agruement is based on the corelations that Bell was basing his theory on.

-

So is that directed at assuming that is sometthing I'm failing to understand within my thread. I assume it is since your posting an accepted arguement in the speculations forum. I dont mind responding in this thread since you did me the kindness of not derailing my arguements. Its just that my premise precludes the set theory he used wich assumes a non biased even distribution of all possible combinations, as well as precluding the impact of entanglement itself. it could be possible that just be one of those points is true, btw.(edited to claiify) I can actually give an explanation of how this could work in a simple explanation if your willing to wait and see How it works in my thread. which Im working on even now. But this gives you at least an idea of where I'm heading. My premise is that not all combinations are possible and many are biased in their distribution. Furthermore the rest of the set theory arguement is offset by the entanglement itself where information is shared in hindsite between Alice and Bob, despite the fact that they are unaware of it in realtime or proper time, if you like which plays havoc with set theory. Thats because the results are recorded in the hind site of knowing all results. and thats where the information of entanglement was actually shared. I hope that makes sense. If I was wrong about my assumption I oppologize but this is still my reply as its directly directed at the postulate you gave.

-

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

Sorry, again. I know I'm full of excuses but in the process of going through this analysis again, I went through a kind of divergence which I believe is a logic thing when dealing with proofs that challenge proofs. Think of it like proving a negative only with proofs. (IDK, an inverted negative?). Maybe it's better to think of it like this. logic, when done properly, reduces a problem to its simplest form, therefore when disecting logic (at least in this case) the reverse seems to be true which is the opposite of the convergence which is usually fun, and instead, expands which is a lot more work So a lot more material was required to explain it well. I'ts like the Monty Hall Problem on steroids. Or like poker where you can constantly improve but never know it all. I wanted to get a better idea of how much was going to go into it before getting back to you. Hopefully it will be... soon. -

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

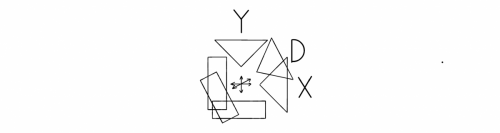

I just spotted a mistake where I had changed the reference to the detectors as A, B, & C to instead denote more like an axes of measurement of: Y, D, & X where D stood for diagonal. I just noticed some places where I failed to change the older references for the newer ones which I can see as potential sources of confusion in the future. Sorry, it's my fault, but the editing window for the OP has already passed. IDK, What's the best way to address htis issue? It's not a major issue but I could see it becomming annoying for readers who are wondering what people are referring to in their replies.. -

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

Swansont, Imatfaal, I will return to address your replies shortly after I finish trimming down my response. I'm Just letting you know as a courtesy because I know that I can be slow to respond at times, as you're probably aware. If there is ever such a thing as TLDR syndrome than I'm a candidate for the poster. I tend to waste far too much time making excessive amounts of points before needing to go back and trim them down to something more manageable which may be just a consequence of being too deeply immersed in a problem for too long. It often takes a few days for me to decompress to a level that my responses aren't going to go down some logical tangent every time I try to write a quick response. It's not that the points are irrelevant as they were all very relavant towards my finally understanding the problem to a degreee needed to find the solution, but I keep forgetting that what's relavant to me is not always relavant to a more mathematical perspective. I will try one more time to compose a more reasonable response before I need to grab some sleep. Sorry about the inconvenience and I hope you can understand. -

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

Ahh, now I think I understand Swansont's question. Problem 1 was in regards to a minor issue that I had noticed repeated in most videos or articles that either attempted to explain or tried to prove Bells consclusions. They all seemed content to only show the eight bit combinations by themselves as opposed to showing the bit combinations in relation to their entangled particles which I felt was the far more significant information to the problem. It seems like a minor point but one that carries deeper consequences in how a problem of this type is treated later on. The most common mistake seems to be that people approach the solution from Bob's point of view alone, without fully regarding the effects that entanglement has on the problem. It's effect is particularly important due to how the problem was stated asking whether the results are the "same or different" between observers which is a very subtle detail but one that drastically changes toe scope of the problem to making it necessary to utilize even the obfuscated information tat comes from entanglement including any perceived information that is revealed after Alice and Bob compare notes. I therefore made it a point to show that the 8 posible qubit combinations are not what is particularly important as if it were a typical quantum computer problem but it is their relationship as a single system of entangled pairs that is the detail that is important. by showing the qubit combinations always in their entangled pair state serves as a constant reminder that makes it less likely to overlook. It this case, their arguements all seem to be made as only looking at the problem from a three x three matrix and not from the perspective of causality that Alices measurement has an effect on Bob's measureent so that the matrix must be divided into a 1/3, 2/3 equity calculation instead. Sorry, "equity calculation" is a poker reference that deals with the proper methods for hand range analysis which is a very strong analogy for Quantum Mechanics. I just didn't know the proper QM term to use in it's place. Equity calculations deal with how our choices are broken down properly for probability calculations that is the key for finding a proper conclusion. That was the point I was making as to why I thought replacing the 3x3 matrix with two smaller problems of alligned and nonalligned measurements. Sice this reply was so long, I will address the rest of your question on problem 1 as well as the discussion about problem 2 seperately. -

Proof that Bell's Inequalities are Equal

TakenItSeriously replied to TakenItSeriously's topic in Speculations

I'm not certain what you're asking. The three qubits I refer to are probably the three options of measurement of quantum spin angle for either the electron or positron entangled particles that are measured by Alice or Bob. However, I didn't think my objectives and setup were clearly defined and tried to clean those up a bit, if that was what you were asking. I hope this helps. -

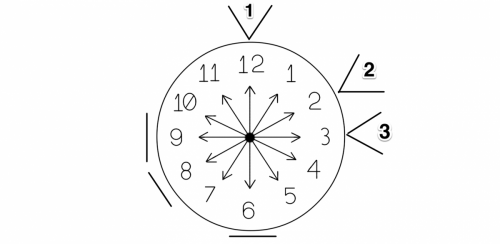

Bell's Inequalities: Proof of invalid assumptions v1.0.5 Objective I intend to show how Bell's Inequalities are based on a number of false premises which resulted in an incorrect the derrivation of expected results which was the basis for the conclusion. It is not intended to prove anything about local realism, superposition states, or spooky action at a distance. In fact, I believe this solution logically shows that spooky information is still required. While I have no problem with accepting the concept of superposition states, and believe that Bell's conclusion is probably more or less correct, I think the derrivation of expected results in Bells Theorem is not completely accurate. I believe that getting the details right are just as important as getting the conclusion right as the details are going to be accepted as being true along with the conclusion which may block other theories from achieving their goals when inaccurate details are believed to be true. I hope that makes sense. Setup I will use examples of electron/positron entangled pairs with their quantum spin states measured at three angles 0⁰, 60⁰, & 90⁰ in a single plane, as defined by John Stewart Bell's Theorem. Assume measurements of "spin up" (u) or "spin down" (d) are made by using a Stern Gerlach device. I intend to show that it is the overlapping of the measurements and the symmetry of their setup that drives the distribution of the measurement combinations and not the randomness of the quantum spin states. Problem 1: There are typically 8 combinations of three qubit states as defined by quantum spin as either up or down. Since Bell's proof is based on presuming that opposite spin states exist at the point of entanglement and conclusions are based on the inconsistency of the expected results with test results then it would be appropriate to assign the spin combinations as particle pairs with opposite spin states rather than considering only the 8 combinations with no considerations for the fact that the particles are entangled. 1) (uuu ddd) 2) (uud ddu) 3) (udu dud) 4) (udd duu) 5) (duu udd) 6) (dud udu) 7) (ddu uud) 8) (ddd uuu) Please refer to post #7 to understand the signifigance of why I feel it's important to display the information as pairs instead of independant qubit combinations. Problem 2: According to the Copenhagen interpretation of Quantum Mechanics, all possible particle states exist simultaniously in what is called a superposition state. However, this does not mean that those superposition states must include impossible states or that their distribution must be evenly distributed. One thing that should strike you as odd are the spin combos 3 & 6: (udu dud) (combo 3) (dud udu) (combo 6) Detector D has no region that is not also covered by detectors Y or X. Therefore if detector Y and detector X are the same, then detector D should not ever be different. It's simply not a possible physical state of existance the way that the measurement angles were set up. Problem 3: This brings me to the third problem which is that the distribution of the 8 combinations are an even distribution is not a valid assumption. The source of confusion is based on the premise that an electron's spin may be pointing in any radom direction therefore all posible orientations of the electro's spin are presumed to be equally true. It would seem intuitive to expect that the combinations of spin from three different measurements would also be randomly distributed. However, that would not be a valid assumption. When 3 detectors are overlapping and not arranged in a symmetrical fasion, then their combinations of spin should become biased. The easiest way to see this is true is by considering extreme cases. Assume that their are three measurements made in a single plane at the angles of 0⁰, 2⁰, and 3⁰. Then it should be abundantly clear that the vast majority of measurements for all three measurements should have the same results such that nearly all measurements were either (uuu ddd) or (ddd uuu) with any other results being an exceptional case. On the other hand, if all 3 detectors were set up in a symmetrical fashion and not overlapping, then one could expect to see a more even distribution of results. The fact that the measurements are over lapping mean that certain spin combinations may not be possible. The fact that they are not arranged symmetrically, means the distribution is likely biased. While I am making such assumptions based on a more classical approach recall, that is the kind of presumption that is under test. Whether the spin was always their before any measurements were made. BTW, while I don't know for sure, I assume that since the entanglement must adhere to the Laws of conservation of angular momentum, I assume that even Quantum Mechanics must presume that the spin states were always the opposite between the entangled pairs if not their actual orientation. In order to test wether the assumption of an even distribution of all 8 quantum spin combinations is true or a biased distribution is true, we need to break down the angles of spin to their lowest common denominator of 30⁰ increments pointing in 12 different directions of quantum spin angles in a clock like distribution. Therefore each hour on the clock would represent an electron's quantum spin direction equally. I could then distinguish each clock position as a probability of up/down for all three measurements which should reveal any bias. Detector Y Range of up spin angles: 09:00-02:59:59 Range of down spin angles: 03:00-08:59:59 Detector D Range of up spin angles: 11:00-04:59:59 Range of down spin angles: 05:00-10:59:59 Detector X Range of up spin angles: 12:00-05:59:59 Range of down spin angles: 06:00-11:59:59 By compiling a list of all probable spin results at each angle, then those results could be used to indicate any bias of spin combo distribution. For instance just based on their proximity of angle to those of the devices before even calculating any probabilities we would see the following distribution as a first order approximation. _1:00__2:00__3:00__4:00__5:00__6:00__7:00__ 8:00__9:00_10:00_11:00__12:00 1__u__|__u__|__d__|__d__|__d__|__d__|__d__|__d__|__u__|__u__|__u__|__u__ 2__u__|__u__|__u__|__u__|__d__|__d__|__d__|__d__|__d__|__d__|__u__|__u__ 3__u__|__u__|__u__|__u__|__u__|__d__|__d__|__d__|__d__|__d__|__d__|__u__ _ uuu_|_uuu_|_duu_ |_duu_|_ddu_|_ddd_|_ddd_|_ddd_|_udd_|_udd_|_uud_|_ uuu Now, simply adding the number of occurrences that satisfy each of the three measurement combos reveals the bias: 1) (uuu ddd) = 6/12 2) (uud ddu) = 2/12 3) (udu dud) = 0/12 4) (udd duu) = 4/12 5) (duu udd) = 4/12 6) (dud udu) = 0/12 7) (ddu uud) = 2/12 8) (ddd uuu) = 6/12 The sum of the coefficients adds up to 2 because two particles are involved. Since the results were symmetrical due to entanglement, we can just divide the results by two to show their probability approaximations. After rearranging the order we can see that a distribution curve is revealed: 1) (udu dud) 0/12 2) (uud ddu) 1/12 3) (udd duu) 2/12 4) (uuu ddd) 3/12 5) (ddd uuu) 3/12 6) (duu udd) 2/12 7) (ddu uud) 1/12 8) (dud udu) 0/12 Note: What this distribution means is that for every electron with spin combo 2 or 7 their exists twice as many electrons with combo 3 or 6 and 3x as many exectrons with spin combo 4 or 5. Their are no instances of combos 1 or 8 as I already meantioned should not exist. Also notice the bias that combos 3 & 6 have over combos 2 & 3. This makes sense since detector D is closer to detector X than Y. In order to get the expected results, we need ot keep in mind that their is more to the experiment than simply looking at the results from Bob's perspective of taking 3 random measurements. Remember the accounting of the results are relative because they counted the ratio of the two measurements being either the same or different. It's a major distinction because we now need to take into account the obfuscated entanglement effects very carefully which includes not trusting our intuition in situations like this, since intuition is based upon real life experience only. Fortunately I've had experience with solving a similar problem in poker a few years back which actually revealed a quantum like entanglement analog when finding a method for calculating the information lost in the muck of a poker hand. It involved a process of assigning ranges to every action and removing those ranges from the deck which I called "Range Removal". I kept running into a causality issue that kept forcing me into paradoxical like results. It took me a while to finally realize it was an entanglement issue dealing with the concept of using ranges to their fullest extent. Note that this is not a real effect in that ranges are only an imaginary concept in poker, all though to a pro, ranges are more real than the actual hand, at least in most online venues. Once I finally realized that ranges had both a real and an imaginary component, I was able to solve the problem. In this case we must treat the problem as an equity calculation with 1/3 of the results being different when measurements allign and the 2/3 results when they don't allign, while considering exactly how the entangled information will impact our relative results which is a tricky concept because it's not within our normal experiences. Therefore it's a point where you cannot exactly trust your intuition or experience unless it's related to entanglement. When Alice and Bob have made measurements of the same angle, then it's not important that we know which measurements were in allignmnet, it's only important to realize they occur 1/3 of the time and we need to imagine the problem with the information we would have later on after comparing notes. As long as we treat the problem in a comprehensive, consistent and general way then it should be fine. For the 1/3 case when measurements allign, it's easy since the results would be monotone with all measurements that are going to be 100% opposite. Since I've broken the problem down into 12 increments we end up with a simple result: (1/3)(24/12) = 8/12 note that we have 24 instead of 12 instances since we need to count for results where alice can have either up or down. Note that I kept the 12 in the denominator because I know it will show up again. In the case of when measurements don't allign, thier is still information we need to consider. For one it's no longer strictly a 3 qubit problem, because when measurements don't allign we know that their is a result that is giving us information eliminating a variable. Therefore we must assume that Alice had measured a result and on a specific detector. To make sure we get them all, we need to check it against all of her possible results. Note that the assumption of looking at two spin states covering the rest does not hold up under these conditions. Don't worry its only 3 measurements over 4 states. This is where the pairing of the particles comes in handy: 2) (uud ddu) 1/12 3) (udd duu) 2/12 6) (duu udd) 2/12 7) (ddu uud) 1/12 where: The first particle represents Alice's results and the second particle is Bob's. The measurements are shown in this order: (YDX YDX) We need to check all of Alices possible results to find the average result. Alice: spin up in Detector A 2) (uud ddu) 1/12: "du" o s 3) (udd duu) 2/12: "uu" s s 3) (udd duu) 2/12: "uu" s s same: 5 opposite: 1 Alice: spin up in Detector B 2) (uud ddu) 1/12: "du" o s 6) (duu udd) 2/12: "ud" s o 6) (duu udd) 2/12: "ud" s o same: 3 opposite: 3 Alice: spin up in Detector C 6) (duu udd) 2/12: "ud" s o 6) (duu udd) 2/12: "ud" s o 7) (ddu uud) 1/12: "uu" s s same: 4 opposite: 2 Alice: spin down in Detector A 6) (duu udd) 2/12: "dd" s s 6) (duu udd) 2/12: "dd" s s 7) (ddu uud) 1/12: "ud" o s same: 5 opposite: 1 Alice: spin down in Detector B 3) (udd duu) 2/12: "du" s o 3) (udd duu) 2/12: "du" s o 7) (ddu uud) 1/12: "ud" o s same: 3 opposite: 3 Alice: spin down in Detector C 3) (udd duu) 2/12: "du" s o 3) (udd duu) 2/12: "du" s o 2) (uud ddu) 1/12: "dd" s s same: 4 opposite: 2 same: 24/12 opposite: 12/12 combining the 1/3 and 2/3 results: same = (2/3)*(24/12) = 16/12 opposite = (2/3)*(12/12) = 8/12 opposite = (1/3)*(24/12) = 8/12 Expected Results: opposite = 16/12 same = 16/12

-

Time is the cause of motion (hijack split from Time)

TakenItSeriously replied to stupidnewton's topic in Speculations

I think your thinking of frequency which is the inverse of time as in cycles per second. Then you connected frequency to waves which they aren't actually the same because frequency is a property of waves much like velocity is a property of particles. However, I think that one could make an arguement for waves or perhaps more accurately fields which could be a cause of motion, or at least they induce motion which seems to me to be saying the same thing, though not exactly since its a kind of motion that is not felt, which I think in a way, is what makes it a more legitimate cause of mothio than force or thrust for example. While, It's true that fields do change motion the same way that forces change motion, it seems more legitimate to me in terms of the question because the motion that fields creates is always relative between the particles independent of outside observers. Another words, the motion itself is definitive to all observes between bodies of charged particles moving towards other charged particles or massive bodies moving towards other massive bodies. So all outside observers must agree that their is motion between particles with mass or particles with charges and what is the cause of that motion at least in general terms if not mechanistic terms. All observers must agree that the relative motion between bodies as being the same and cannot argue over whether some force was causing motion or resisting motion. Ironically the only exception being the observer in a sealed elevator with no windows as in Einsteins thought experiment who would think they were not in motion at all. However you could say that the motion of particles are what creates fields, at least with EM fields and I suspect that something similar can be said for gravitational fields. In fact their was a quote that said something to that effect though I can't recall the quote exactly. it was kind of like a chicken and egg relavance though not exactly. I think someone mentioned energy as causing motion, which I think it could be argued that energy in the form of heat is what causes brownian motion. Energy also causes the motion of particles in the solar wind. Energy is also what creates induces the frequency of waves to increase although that doesn't impact the waves motion which is always at the speed of light. Also waves causing the motion of photons is debatable as photons are always in motion at the speed of light just as waves are always at motion at the speed of light. However the overwhelming greatest amount of motion, is easily due to the expansion of the Universe, therefore I think that ultimately you would have to say that the Big Bang was the definitive source of all motion and conservation laws are what keeps motion going in what ever form that followed -

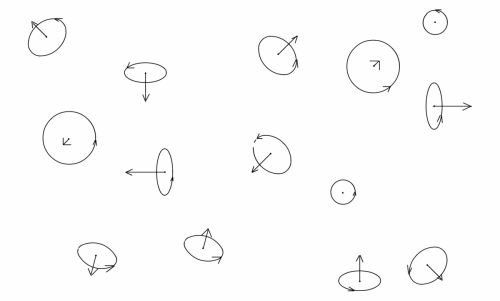

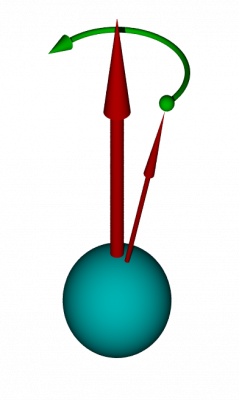

Ok, thanks. The reason I had asked is that a girl had posted a hypothesis on youtube that I think I've heard before and I believe it's correct, though it could be very difficult to prove. She did a good job describing it here: https://youtu.be/z_6B2M12H9w The mechanics: The intrinsic angular momentum keeps the spin state of the electron locked at some angle due to a gyroscopic effect. Also their is a larmor procession that rotates the angle about a verticle axes that describe a cone. Thats why all measurements are up or down regardless of the angle. All measurements are just measuring the verticle component of some arbitrary angle. Note making the same measurement always produces the same result because the procession is about the verticle axes. On the other hand alternating measurements keeps adding some amount of procession making results less certain. Possible solution: I believe tat the trick may be in control the precise amount of procession that gets added. Then it should be possible to make some kind of predictionon about the cone size which finally allows for a prediction on the results. It seems viable but would seem to depend mostly on precission of controlling the amount of procession.

-

Assume Alice and Bob have a set agenda to test for the following case: For a single given electron/positron entangled pair: Alice measures X axes which should be 50:50 up or down Bob measures Y axes which should be 3/4 the same or 1/4 different from Alice Alice measures X axes ...? I've heard form a recorded Richard Feinman lecture, that Alices X spin state can change after Bob measures a different spin state but he never gave any details behind that change. What are the new odds for Alice's X measurement to change? Must it always change? Is it still 50:50 either way? Does it depend on her last result? Does it depend on Bob's last result? Does it become a 1/4 same as Bobs Y result and 3/4 different from Bob's result? I can't seem to find this answer anywhere though it's an awkward search.

-

I think noticing patterns that seem to have a purpose is fine, take note figure out what makes them interesting and move on. Dont try to find a problem that fits the solution because your not wired to see connections that way. Your wired to recognize solutions that can solve a problem. Think of it like a collection of stuff in a hardware store, you dont take the iventory and try to invent something out of it because you just end up with modern art. You just take note of what kind of stuff is available so that when you need to fix or build something, then you know what pieces are available that can do what you need.

-

I dont understand the concept of limit

TakenItSeriously replied to giordano bruno's topic in Mathematics

You could use the inverse square law for anything that radiates as well which extends out to infinity. Maybe they're not the easiest concepts to visualize in terms of using analogies which are supposed to be simple examples to follow, but it would make more sense than nonsensical examples. You could always describe them in a way thats easier to understand as well. For example when explaining the inverse square law for anything that radiates, eg light, gravity, magnetism, capacitance, etc. you could imagine a sphere that had a very large but fixed number of N dots evenly distributed all over its surface. As the sphere expands then the number of dots per square meter will fall in proportion to the inverse square of the sphere's radius n ∝ (1/r²) where n represents the dots/square meter r is the radius of the sphere If N = ∞ then n will always be approaching but never reach 0 n can be whatever the radiating value is, such as the force of gravity or intensity of light r would be the distance to the center of whats radiating. Edit to add: I just realized that my first post in this thread was a case of exponential dislexia when trying to find a valid example that matched the OP example. I was confusing 1/n² with 1/2ⁿ -

Did the Definition of Variance in Probability Change?

TakenItSeriously replied to TakenItSeriously's topic in Mathematics

Perhaps it's an issue of the context for how I mostly applied probability theory which is for poker and other games but I see it as a matter of deterministic vs non-deterministic results, where only games of chance could have predictable variance, but for games of skill, the variance can only be measured from past data which would be a statistical variance. Without data, I'm sure only the fundamental data has practical use. It may be possible to improve on that based on structure but it still wouldnt be very practical, I would think. At least not without Quantum Computers because thousands of entries will have some incredible number of permutations to work out. I just don't see a mathematical way around that without first order approximations maybe? which isnt math in any case, because any valid form of approximation requires using logic, I think. For Example: the variance from throwing 5 dice would have a predictable outcome that I would imagine could be calculated using combinatorics or simulated using Monti-Carlo because it's deterministic. For the examples of the variance in poker tournaments it depends upon human factors relative to other human factors which is not that predictable. At least not without past results at which point it would be a statistical variance even if applied in a probability context. I actually spent a great deal of time studying tournament variance based on personal results and I found that there were some very big surprises that I would never have predicted, though I was able to explain them in hind site. Examples: For a winning player, you might think that the lower entry fees would have lower relative variance. However, at least in my case, I found that the higher entry fee events had far less relative variance due to the more homogenious play of the more advanced player, vs the more erratic play of beginners. In fact, not only was my variance much much lower, I even had a much higher ROI at the top levels vs lower levels which surprised me quite a bit. That's because the styles I was facing had become so homogenized through playing high volumes and through learning from poker forums. They weren't very adaptive and their style was better suited against the more diverse fields of players. I've always been a highly adaptive player, so given the choice of playing 9 pros with a homogeneous non-adaptive style that had been optimized against a wide range of players, vs 9 amatures who all made moderately larger mistakes on average, but more random mistakes, I'd rather play the all pro event and it wouldn't even be close. I was actually able to see the same kind of phenomenon going on in the field of digital security when every type of account started using identical security techniques. It created an extremely exploitable situation where discovering a single exploit could trheaten all accounts. Especially if exploited using mallware. I don't know if it was what made the difference, but I did warn Cert about this threat on two occasions before I started to see divergence in the kinds of security again. -

Did the Definition of Variance in Probability Change?

TakenItSeriously replied to TakenItSeriously's topic in Mathematics

I may not have been clear, my point was that variance in statistics and probability used to be different and now their defined as the same. Statistics definition was the same as given in the article, effectively the square of the standard deviation. Probability definition was effectively the largest positive or engative swing. But it seems like they need to be different because of past vs future points of view. As I had meantioned earlier, statistics is an analisys of data from past or current events probability is estimating the odds of future outcomes. So how could you provide a standard deviation when you have no data and only relly on something like combinatorics to calculate odds. Is their a standard deviation that could be predicted? Edit to add: I see the definition was already given so perhaps I need to look into the sources a little more deeply -

I dont understand the concept of limit

TakenItSeriously replied to giordano bruno's topic in Mathematics

Certainly, I was referring to the example used in the OP using the half distance term recursively. I was thinking that it was more appropriate for an example of something like half life. edit to add: or some inverse square relationship. -

Did the Definition of Variance in Probability Change?

TakenItSeriously replied to TakenItSeriously's topic in Mathematics

1920! I'm not that old, lol. It may have been an issue of the times due to the poker boom or the reliability of my sources though I know they included at least three. I also remember Wikipedia had many definitions for different fields, such as statistics, probability, accounting, etc. edit to add: is standard deviation even used in probability? how could that work without a data sample? -

Did the Definition of Variance in Probability Change?

TakenItSeriously replied to TakenItSeriously's topic in Mathematics

I'm certain they were different at most 10 years or so ago. I remember it was extremely annoying in the poker forums when long debates would break out over vairance when they didn't realize they wer discussing two different definitions. Specifically it was an issue of variance as used in tournaments where large entry fees and extremely large first place prizes make a huge diffeence and those used in online cash games, where variance was in terms of a database of stats. edit to add: and yes I did look up their specific definitions back then. -

I dont understand the concept of limit

TakenItSeriously replied to giordano bruno's topic in Mathematics

I'm no expert, but it seems like just another case of a bad analogy, though its basically the same analigy used when I was a student decades ago. Shouldn't a proper analogy include a squared term in order to be valid? -

Did the Definition of Variance in Probability Change?

TakenItSeriously posted a topic in Mathematics

Below is from Wikipedia Not that long ago probability and statistics had different definitions of variance because of their different frames of reference. Probability is about future expectations and statistics is about past results. In probability, it used to be something like: the maximum amount something could change based on a single event. However, now I can't find any evidence of that old definition. My question has nothing to do with the merits of the change. Before thinking that hard about it, it seems like a more useful form in some respects but its also bad in other respects but then, perhaps there should be two terms instead of one. However, just changing definitions without any reference to the change is confusing and dangerous. My questions are: When did it change? How did it change with no evidence of the change based on a quick Google search? -

Hijack from intuitive model of a particle wave

TakenItSeriously replied to Butch's topic in Speculations

Sorry Guy's, I've been tied up. I'll try to post something more comprehensive soon. -

Hijack from intuitive model of a particle wave

TakenItSeriously replied to Butch's topic in Speculations

The matter/antimatter entanglement I am referring to is the matter/antimatter created by the BB which I havent explained fully yet as that is in Part 2 An Intuitive Model for Quantum Mechanics. I will probably merge part 1 & part 2 when I post part 2. Unfortunately this confusion is a consequence of the fact that this is a converging model for a TOE as I posted at the top of the origional thread. Therefore, I need to provide rather long replies to short questions that needs to cover more and more of the unposted sections. It's difficult to explain, and even more difficult to write in the first place. Hopefully, once I get it all posted, with links it wont be so confusing. -

Hijack from intuitive model of a particle wave

TakenItSeriously replied to Butch's topic in Speculations

Sorry, I'm still not sure what it is your asking for. This source explains how according to the Big Bang Theory, every particle in the universe was created as a matter/antimatter entangled pair, another words a universe of matter and a mirrored universe of antimatter. http://www.physicsoftheuniverse.com/topics_bigbang_antimatter.html I've alredy given an abundance of evidence in the origional thread here: http://www.scienceforums.net/topic/101660-an-intuitive-particle-wave-model/ Following is a Summary of the Evidence: A hidden antimatter universe explains the missing antimatter created by the big bang. A matter/antimatter entangled universe solves entanglements apparent spooky information at a distance, problem. Alice/anti-Alice and Bob/anti-Bob observers observer up and down spin states. Based on the silicon drop experiment, particles in a dual orbit bassing through the slits, create the band like dispersal without needing a single particle to pass through both slits. Particle/Wave duality is due to entangled pairs causing the wave effect and broken entanglement causing the particle effect. Observers causing the wave to particle transition is caused by observers breaking entanglement. Heisenberg Uncertainty is caused by the position/momentum information split between the two universes. Entangled +/- particles in a dual orbit creates a dipole moment that can explain intrinsic magnetic moments of charged particles. Dual helix model creates differential electric waves perpendicular to differential magnetic waves which results in a 0 net energy state, or waves that may self propagate through a vacuum indefinitely. Differential waves negate any need for a ground reference or a clock reference. -

Hijack from intuitive model of a particle wave

TakenItSeriously replied to Butch's topic in Speculations

I guess I was thinking that all fields were considered to be able to exist between dimensions but perhaps thats only gravity fields? how about: As evidence for this the particle/antiparticle pair creates a dipole moment which could explain why charged particles have intrinsic spin magnetic moments: μB = eħ/(2me·c) where e is the elementary charge, ħ is the reduced Planck constant, me is the electron rest mass and c is the speed of light.