Everything posted by joigus

-

State of "matter" of a singularity

I agree through and through. What seems peculiar to me is that, if you insist on forcing the maths tell you something, and try to get S(entropy)=0, which would be sensible for a classical approach, you end up getting S(entropy)=infinity!, pointing to a, perhaps misleading --maybe just an artifact of the approximation--, "entropic catastrophe," instead of an entropy-less picture. I do too. What I find intriguing in the holographic approach is that, quantities that make sense in the bulk, perhaps do not in the complementary of the bulk* (the exterior bulk, STS,) but have a counterpart on the horizon. This, of course, must have to do with the hyperinteresting question of the holographic dictionary: How to translate observables that make sense in the bulk to observables that make sense on the horizon. This takes me back to mutterings that I've suffered for years now, and I will express here. What if Einstein's equations are valid locally** in the exterior of a certain compact surface, but not globally? Most fundamental static field equations have the form (schematically): [2nd-order differential operator](fields with assymptotic constraints)=(coupling constant)x(sources) (delta)F=S In the case of Einstein, delta is the non-linear combination of 2nd-order derivatives that constitutes the Einstein tensor G when acting on g (the metric, say the field), formally constructed in such a way that covariant differentiation D produces an identity: D(delta)g=DG=0 and, DS=0 In the case of Laplace, delta is the Laplacian, F is the electrostatic field, and S (the source) is the charge density. This makes sense for smooth charge distributions with spatial extension, but gets dramatically silly when S is a point charge. Could it be the case that this "approximation" can be made valid once a surface is chosen, but not extrapolated to be valid globally? (shrinking the surface to one point so as to express the field everywhere, as the singularity is suggesting.) * Maybe they do. I don't know enough to know. ** Or even only on charts.

-

crowded quantum information

This is not a definition. It works for you just because --no offence-- you seem to have very low standards on what constitutes a definition. "Spooky" is not a physical term. If you paid even the slightest attention to the mathematical formalism of quantum mechanics, you would understand this perfectly, as both any "blips" of information, or any "blips" of energy would have to travel in the form of "blips" in the square of the absolute value of the wave function --or the square of the gradient too, in the case of energy. That's what the quantum dynamics doesn't allow to do superluminally. (quoting your quote) Yes. Therefore => there can be no non-local interactions. Maybe we would have to go a step down and discuss what an interaction is. You say things like, What? The interaction is not physical? What is it then? Metaphysical? Because I'm keenly aware of the dangers of letting hidden assumptions slip into your arguments, I've found that it may be useful to strip the ideas to their bare minimum, and say only what they say, and nothing more. What comes next is a sketch of the history of these ideas. This is in order to satisfy @Eise's demands that we be clear. EPR: If you can predict with absolute certainty the result of an experiment without in any way disturbing the system, there must be some element of reality underlying it. Quantum mechanics says that certain pairings of observables are incompatible, say A and B. If I can exploit a conservation law that's valid for at least one of them, say A, in a bipartite system (A1+A2 = constant) and measure A in part 1, and B in part 2, I can infer what the value of A2 is without actually measuring it. I can, at the same time (within a space-like interval) measure B for 2, that is B2, with as much precision as desired, and I would have proven that quantum description of reality is incomplete, because I would have the values of A2 and B2, which quantum mechanics declares as incompatible. In a nutshell: Either quantum mechanics is incomplete, or your wave function would have to be updated superluminally, to make this incompatible character of A and B persist. I hope that is clear. If it is, we can all jump to the same page and proceed to Bohm, CHSH-Bell, Aspect. That means: why Bohm shifted the discussion to spin, what do the CHSHB correlations say and don't say, and what Aspect actually found. Then, perhaps, a discussion of science as perceived by the masses as well as relatively learned non-experts, and why this non-locality nonsense proves to be so persistent, the very same way that thousands and thousands of claims of possibilities for perpetual motion kept coming long after the question of its impossibility was perfectly understood by the theorists.

-

crowded quantum information

This is simply false. There is no such thing as non-local interactions. Please give references or stop repeating things you think you've heard or read. Your argument is logically indistinguishable from accounts that bigfoot is real... I'm sorry... "real." This reminds me of someone who once said something that was totally wrong. Come on. You can do better than this. Oh, wait... No, you can't.

-

crowded quantum information

When the quote function is playing up, I've found that copy and paste works better. But there seems to be a problem quoting, editing. Some functions seem not to be working.

-

State of "matter" of a singularity

Yes. Sorry. Same letter, different things. It's tradition, plus too few letters in the alphabet.

-

State of "matter" of a singularity

How h could possibly go to zero ? It's a constant. What we mean by that is the action (frequently named S) is enormous in comparison to h. This generally works fine when you take the solutions of the equations and make an expansion in powers of (h/action) or action+ h(something of order 1) etc. It's not to be applied directly to the equations of motion, for example. The Schrödinger equation would be meaningless. So it's something to do at the end, after having solved a problem, or in intermediate steps, but more carefully --like in the WKB (semiclassical) approximation, where you ignore terms in powers of h only when the powers are high enough.

-

State of "matter" of a singularity

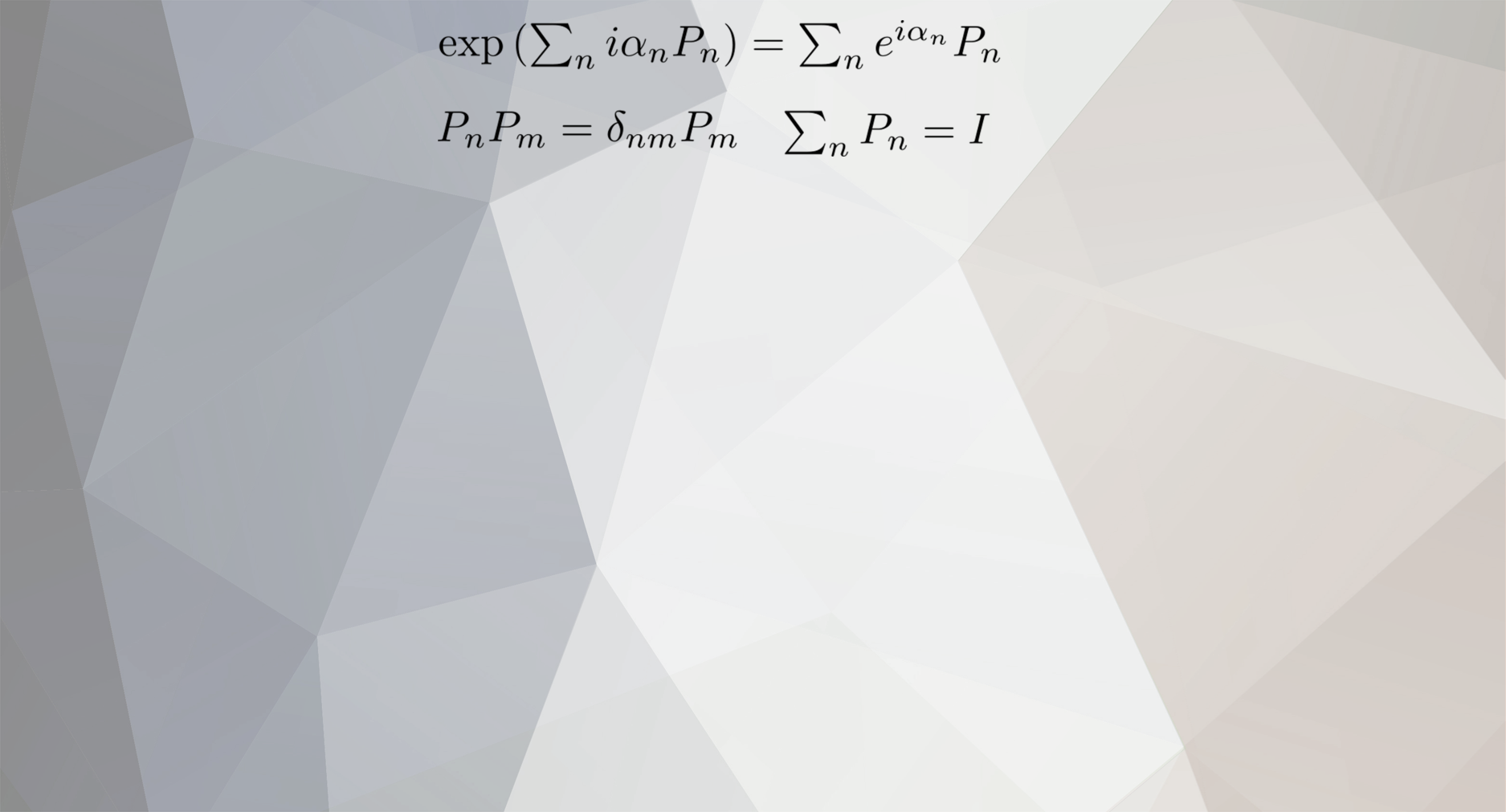

Sorry. I wasn't clear. I totally agree with you that a classical BH has no entropy. The no-hair theorem guarantees that there are only three parameters that characterise a static BH: mass, charge, and angular momentum. IOW, there is only one state. IOW probability(Q,M,J)=1, and the probability of any other state is zero because there is no other state. Therefore, \[ S_{\textrm{CBH}}=-1\ln1=0 \] On the other hand, \[ S_{\textrm{QBH}}(A)=\frac{c^{3}A}{4G\hbar} \] and, \[ \lim_{\hbar\rightarrow0}\frac{c^{3}A}{4G\hbar}=+\infty \] So, considering a classical BH, "dressing" it with entropy, and trying to get to the classical version by (perhaps simplemindedly) having h go to zero, doesn't make a lot of sense. I'm willing to admit I may be just chasing shadows here.

-

crowded quantum information

Thanks for the compliment, but I can guarantee to you I'm no computer, and I'm no superman. I do have my kryptonite, like everybody else. I do spend a lot of time thinking, and reading, and doing calculations about these things, and trying to keep up to date on the experimental front. When I say something stupid, or just sloppy, I retract and apologise, and some people on these forums can bear witness to that. The "beable" idea is very interesting, but it would take us too far off tangent, I think. It's also too mathematical perhaps, if you take my meaning.

-

crowded quantum information

(My emphasis.) Here: (In this self-quote, I've just edited minimally for clarity, and highlighted the edited terms in boldface, as well as terms that point to the parts that you really had better read carefully before you embarrass yourself any further.) If you don't read the previous posts, you're only going to make this thread unbearably difficult to follow for everybody, including yourself. If I sounded demeaning to you, I sincerely apologise. It wasn't what I meant. But now I think it's obvious why I'm annoyed. If, for some reason, you don't like the maths, or you find its sheer mention annoying, or you don't think it's part of the argument, just tell me and I will try to re-phrase in some other way, because it is. It is an essential part of the argument. Don't just dismiss it or ignore it. I also remind you that you were the first in trying to make this personal: What does that have to do with anything? Please, use the quote function --as I did-- to direct me to your definition of non-locality, as I seem to have missed it.

-

crowded quantum information

(My emphasis.) It is even conceivable that the question will never be settled. It is even possible that, once the semantics of the problem is formulated in --mathematically-- totally unambiguous terms, we find out it corresponds to some of the questions that fall under the category of "undecidable" à la Gödel. Who knows? In fact, I do believe it may well be undecidable. If generalisations of QM is what this is all about, the sky of possible ideas is the limit. Let me give you an example. Non-relativistic Schrödinger's equation tells us that the evolution of the wave function is given by a differential equation that's 2nd order in positions and 1st-order in time. This is local out and out. But what if I'm allowed to modify the equation in such a way that there is a non-local term that only becomes relevant when a measurement is performed? The actual crux of the matter is, IMO, not so much whether the wave function represents something objective, as to how faithfully it parametrizes what's going on or, on the contrary, it parametrizes either more/less, as the case may be, than what we bargained for? Gauge invariance gives us a clue that it does give us much less --I'd say incommensurably less-- than we bargained for. As soon as you have gauge fields, you can change the wave function from any initial prescription \( \psi \) that's useful for, eg, collision theory, to any other that locally differs from your original choice by a (time-position)-dependent phase factor, and may be carrying other useful/significant/meaningful information. Who is to say the immensely rich landscape of infinitely-many gauge prescriptions does not carry a subgroup that tells us which one of the alternatives of a measurement is the one we are measuring? Because that's a --blatantly deliberate-- rethorical question, let me answer that explicitly, and face the possible consequences of any arguing against that may arise: Nobody.

-

crowded quantum information

I'm sorry I've failed. If I can keep your interest --the question is a really good one--, and until I can find a better way to explain it, please try to teach yourself as much as you can about --keywords-- individual vs collective interpretation of quantum mechanics. Pure states can be understood both ways, while mixed states can only be understood the second way. This piece of literature may be helpful: https://www.amazon.es/Quantum-Mechanics-Development-Leslie-Ballentine/dp/9810241054/ If you abide by the collective interpretation* of quantum mechanics all the way, the problem of measurement --in its original formulation-- simply dissolves before your eyes, and J. S. Bell** and M. Nauenberg's words in a rather obscure paper***, suddenly make sense, and you understand why he took so much interest in the double-solution proposed by Bohm and De Broglie. "Reduction of the wave packet" is synonym of "collapse of the wave function." It is only because the last generations have decided to re-coin the term to mean "decoherence in the density matrix," and only that, that the problem has become almost unintelligible. * In a nutshell: One electron wave function actually represents infinitely-many electron experiments. ** Probably the most misunderstood theoretical physicist of all time. This is my view. *** https://www.worldscientific.com/doi/10.1142/9789812386540_0003 I'll try to do that, I promise. Unfortunately, I will have to get involved in a lot of self-quoting. One part of the problem is that J. S. Bell shifted his position somehow during the time that he was thinking about this problem. So it's not impossible that he said some things here and there that do not conform to what his final position was. So I'll do my best. The problem with scientific literature is that sometimes you capture statements that took place when the problem was in the process of beeing understood. A good example of this is general relativity in the 60's. Lots and lots of peer-reviewed incorrect calculations made it to the literature. The final judge is, of couse, the experiment, and I'm sure nobody will ever by able to send any kind of superluminal signal. In my mathematically-biased mind, this is only too clear, as QM proposes nothing more than a finite-order differential equation, so unless this "model" is fundamentally wrong, no non-local effect can be claimed. But again, I'll try to explain it better. Yes, that's exactly what I'm saying. Now, give me a definition of a non-local theory, please, so that this discussion is not taking place in a conceptual vacuum. Exactly. It would have to be non-local. Intuitively, it's very clear: Because you need wave functions to represent quantum mechanical probability distributions, you would have to update the hidden variables in some non-local way to have some definite variables do the job of updating the wave function. But that would be only because you're trying to picture an internal classical world in the wave function to represent that updating in the wave function. If you actually believe that no "internal," "hidden-variable" representation of the results implements that updating, and quantum mechanics is sufficient to you, you are freed from that constriction. Sorry if I wasn't clear before.

-

crowded quantum information

No. And the fact that you keep ignoring the references I posted, and my best efforts to explain why, as well as other members', is making this ping-pong match really annoying. Again: https://en.wikipedia.org/wiki/No-teleportation_theorem https://iopscience.iop.org/article/10.1209/0295-5075/6/2/001 As I posted before, there are claims that generalisations of QM could explore non-locality. Generalisations of QM do not exclude the possibility of angels either. But we haven't seen any, and have serious reasons to believe there aren't any. For a theory to be actually non-local, not gibberish-non-local like what you're pretending to argue --I know how much you hate maths, but I have no better way to explain-- you would have to have dependence on arbitrarily high-order derivatives in the field variables. That's because you would have to have couplings of the form at 2 distant point \( x \) and \( x-a \), for example, \[ \varphi_{1}\left(x\right)\varphi_{2}\left(x-a\right)=\varphi_{1}\left(x\right)\left[\varphi_{2}\left(x\right)-a\varphi_{2}'\left(x\right)+\frac{1}{2}a^{2}\varphi_{2}''\left(x\right)-\cdots\right] \] so the fields would be coupled at distant points \( x \) and \(x-a\), and therefore would fall well outside the realm of any quantum theory. It faithfully follows the spatial dependence of the classical theories of which it is the quantised version. I've shown you arguments and authoritative claims that no quantum mechanical theory formulated so far --including quantum field theory-- is non-local. But you haven't answered any of it yet. Quantum mechanics is not non-local. Not in its mathematical form, for all we know it isn't, and not in the experiments. If you think it is, it is about time you start to present your case seriously, instead of repeating something you've heard. We are not children. If you don't like locality, go to another universe.

-

crowded quantum information

All systems that are maximally determined, yes. Those are called pure states. OTOH, it's always possible to prepare systems in non-maximally determined states, called mixtures or mixed states, and those are represented by a matrix built from products of the different sub-states, so to speak, in which the diagonal elements are the statistical weights of the pure states the collective state is made of. In other words, they represent quantum states in which not all the information has been monitored. The probabilities attached to the different "pure components" (wave functions) don't have necessarily a quantum origin. In that case there is a mixture of quantum uncertainties, and other uncertainties.

-

crowded quantum information

This example illustrates very nicely an analogue for quantum superpositions, but as any other classical analogue of quantum systems, it only illustrates one particular aspect of them. But I'm sure you agree that no classical analogy can actually embody all the properties of a multipartite quantum system, or of any other quantum system for that matter. The coin illustrates very well the indefinite nature of the intermediate states, but misses the correlations, and the fact that the state can be brought apart in the spatial components. The gloves cannot reproduce the total indefinition that characterises the state before a measurement is performed. So we're at a loss for analogies really.

-

Does darkness exist ?

I'd say you may be minutes away from being done. Oh, yes. Your "evidence." I'm very impressed.

-

State of "matter" of a singularity

Sorry, I don't follow. The azimuthal angle leads to a description that's constantly varying on a line? One angle disappears because you can only use one direction to provide a set of operators to express angular momentum. It's a convention that we use the angle that represents rotation around the z-axis. Jx, Jy and Jz do not commute with each other, so we only get to pick one. Is that what you're trying to say? Superstring theory has produced some intuitions or concepts that strike me as potentially revealing: dualities, unexpected symmetries... It has the flavour of a Copernican turn. But the landscape of possibilities it opens up is too complicated to be predictive. I'd say I'm in two minds about it, but it's more like I'm in 10500 minds, or whatever the number of ways to compactify the manifolds there were. This is what I think truly underlies John Bell's concept of beables: A set of commuting operators that expand the internal space of elementary particles. We would have to drop the requisite that everything relevant "inside the particle," so to speak, is amenable to parametrisation with real numbers and Hermitian operators --observables. Maybe the condition that every relevant parameter be an observable is too strong, and only a human constriction. Maybe that's the path to tackle the problem of singularities. Expressing measurable consequences of that idea may be a taller order though.

-

Does darkness exist ?

No. It's very easy to see it's wrong. Light can be detected even when it's not visible. The whole thing has nothing to do with human perception. Your eyes are adapted to be particularly sensitive to green, for good evolutionary reasons, and totally blind to ultraviolet or infrared light. Period. Are we done?

-

Does darkness exist ?

Absolutely watertight argument.

-

crowded quantum information

0) Quantum mechanics (a totally local theory) predicts quantum correlations for spin 1) If some hidden variables explained quantum correlations for spin => they would contradict quantum mechanics (Bell) 2) quantum mechanical correlations for spin are experimentally confirmed (Aspect) => QM is correct even for these subtle cases. Good! Let's go home --quoting M. Gell-Mann. But no. Wait a minute. What if...? Here's where things get complicated. People go back to Bell's paper and notice he mentions something about non-locality. Bell was very ambivalent about this point for a while. Sometimes he thought his inequalities implied some kind of non-locality, and in a matter of weeks or months, he changed his mind about it, agreeing with Feynman that they only reflected the peculiar nature of quantum probabilities: Some propositions, that from a classical POV make perfect sense as independent propositions, do not behave like that at all in quantum mechanics. In QM you can have propositions that are either true, false, or neither true nor false (quantum superpositions.) Bell finally sets the record straight and publishes his famous paper on Bertlemann's socks and the nature of reality. => You don't need any magical action at a distance to explain correlations based on a conservation principle. It happens classically all the time! End of story. Or is it? The jury is still out, only because they didn't, or wouldn't, hear the call to go back home. People keep going back to those papers looking for a magical action at a distance for which there was no need at all in the first place. Why? Because: Quantum mechanics (a totally local theory) predicts quantum correlations for spin

-

Does darkness exist ?

Photodetectors not clicking.

-

Does darkness exist ?

Perhaps you should start your study of physics with a dictionary? "Opaque" and "dark" are different attributes. Opaque basically means "it doesn't let light go through it," while dark means "there is no light there."

-

crowded quantum information

This is, I think, a brilliant question. We've touched it laterally, but because there was disagreement on more basic aspects, I for one have dispatched it too quickly perhaps. I'm aware that some people --who are not completely off their rocker-- say that. But, Decoherence can only be ascertained only after a large-enough number of identically-prepared experiments is performed --ideally, infinitely many experiments. The state to account for is no longer a pure state, but a strict mixture. It cannot, and should not, be considered as representing one run of the experiment, but infinitely many runs of the experiment. What does it even mean that decoherence is globally instantaneous in that case? Mind you: Decoherence tells you that the different components of the wave function are no longer in phase --think about the double-slit experiment. How do you measure that with just one instance? How do you know there is no longer interference by shooting just one electron? You can rest assured that's the case, otherwise relativistic causality would be violated. That's certainly the case for spin, for the very simple reason that experiments and mathematical theorems cannot deceive us. I don't think it's necessarily the case for position variables. Caveats: Keep in mind that the previous ones are my answers. As to the 1st one, it's possible that the view is not unanimous, but I think the argument I gave you is solid enough. As to the second, I don't think anybody who's really a serious physicist would disagree with it. The 3rd one is my opinion on the part that concerns position variables, but it's an opinion I can defend and I'm willing to discuss it with anybody who's interested.

-

crowded quantum information

Again, no. Nobody has definitely proved that non-locality is ruled out. If you had read what I posted before, you wouldn't be implying that I said what I didn't. In order to help you better understand the difference between what I "appear to be saying" and what I actually said: (My emphasis in boldface characters.) It may well be the case that some day somebody comes up with a model that is non-local, and produces the quantum correlations, which give no clue of non-locality anywhere, because they are correlations built into the quantum state when it was prepared at, say, t=0, (x,y,z)=(0,0,0). Of course, this person would be under the automatic obligation to explain why it is that no trace of this non-locality can be found in the real world. Why would someone pursue something so incredibly silly? I don't know. You tell me. A non-local field or particle theory of any kind would be very difficult to reconcile with relativistic causality. It would be miraculous that no non-local (and therefore relativistically non-causal) effect can be exploited, while all the while, the inner workings of the fields were non-local. What the famous impossibility theorems tell you is: If, for some reason, you wanted to assume that the eigenvalues (observed values) have been a function of some definite variables all the time, you would have to give up locality, or very deep principles of statistics (positivity of probabilities), or perhaps both. What Aspect et al. proved is not that there are no hidden variables. How can you prove the non-existence of something you have no idea what it could be? What Aspect et al. proved is that quantum mechanical probabilities give exactly what quantum mechanics --in its close mathematical form for angular momentum-- predicts. Even for the crucial case that Bell et al. proposed. Because they were confirmed, and John Bell proved that quantum mechanics involves something other than classical logic, we believe quantum mechanics is correct (about angular momentum, anyway) and non-locality was never necessary in the first place. Is that better?

-

What is the correct way to use a science based forum ?

None taken. If nothing else, you've proven to be respectful to others, and I appreciate that and would like to answer you in kind. Swansont has just given you an impeccable reasoning why your idea is probably very much misguided. Appealing to mathematics is absolutely essential. That doesn't mean you have to use the most powerful mathematical tools at hand. Many times it's good enough to do some sanity checks that go in the mathematical direction. Those are well known, and invaluable tools. Check for: Units Orders of magnitude Simple arguments about symmetry, what depends on what, what shouldn't depend on what, etc. Approximations: this is small in comparison to that, etc. Please keep in mind that, even though criticism from people who know more than you can be hard to swallow, it is a necessary step in building up to bigger things. They're actually doing you a favour.

-

What is the correct way to use a science based forum ?

That's where you're wrong --among many other places. Newtonian mechanics can be obtained from relativistic mechanics as an approximation. Classical relativistic, or non-relativistic, mechanics can be obtained from quantum mechanics as an approximation. Newton's theory of gravity can be obtained from general relativity as an approximation. Etc. In all of those theoretical steps, the reason why we believed the previous idea, but were unable to see the new one, is made transparent. Test number one for a new theory, even before it makes it to the laboratory, is that everything we already know is implied there, as an approximation. Then you must come up with a way to test how it could be proven wrong. The experiments are the other, and final, judge of whether your idea is sound. It doesn't matter how beautiful your idea is to you, or others. If it contradicts the experiment, it's out.