-

Posts

5375 -

Joined

-

Days Won

52

Content Type

Profiles

Forums

Events

Everything posted by Genady

-

Star Trek is here: US Navy shoots down drone with a laser.

Genady replied to StringJunky's topic in Science News

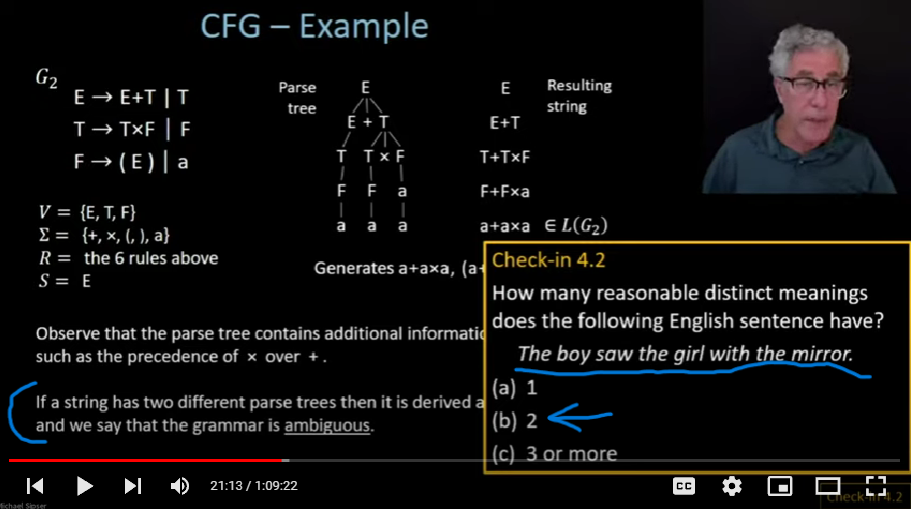

It can be interpreted - as intended - that Navy used laser to shoot down drone. But it also can be interpreted that Navy shoots down drone which had a laser on it. Here is a snapshot from an MIT lecture with a similar example. -

Star Trek is here: US Navy shoots down drone with a laser.

Genady replied to StringJunky's topic in Science News

"shoots with laser" or "drone with laser" -

Star Trek is here: US Navy shoots down drone with a laser.

Genady replied to StringJunky's topic in Science News

(This is OT, but the previous comment is so too) The title is a textbook example of language ambiguity. -

Trolling (split from Quick Forum Questions)

Genady replied to Kittenpuncher's topic in Suggestions, Comments and Support

Exactly. I have to answer to my conscience only. I can express my POV, if I want to. I can learn POVs of others and understand them, if I want to. There is nothing more can be done about them. If another person doesn't agree with my POV, it is not because they don't understand it, but because their POV is different. So, there is no point in explaining my POV again and again, is there? -

This relationship is needed because fields and particles don't only exist inside a curved spacetime, but they tell spacetime how to curve.

-

Yes, but I think (1) time was not compressed, (2) IIRC, the time when it was compressed to a nucleus size was before inflation and then even the unified force did not exist yet; it supposedly appeared in decay of inflation field, when the universe was of a size of a marble.

-

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

Going back to the OP topic, I think it belongs rather to biology than philosophy forum. -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

There are MRI and other similar methods; many brains are already well known and reexamining of the existing images might be sufficient. I don't know why would they look for more. -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

Where did I say that I would do any of this? -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

I'm sorry, but I still don't know what your question is. Can you just ask it in a straight form, please? -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

Gained from what? -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

You're right, perhaps consciousness is different. More fundamental and, perhaps, more objective. For example, it may be an ability of brain to take some of its own processes as input, while brains without consciousness process only inputs that arrive from elsewhere. In such case, we might eventually find out what brain structures provide this ability and then could look for similar structures in other creatures. -

Let me be the first to announce the birth of a new science. Lee Smolin et al. explain it in a new paper, Biocosmology: Towards the birth of a new science.

-

The impact is obvious on this small island: the measures up - in 1-2 weeks the numbers down, the measures down - in 1-2 weeks the numbers up.

-

Many thanks. +1

-

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

Remember our discussion about free will a couple of months ago? My resolution is the same: Just different reference frames. -

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

This crawling neutrophil appears to be consciously chasing that bacterium: -

I've thought of a test for understanding human speech by an AI system: give it a short story and ask questions which require an interpretation of the story rather than finding an answer in it. For example*, consider this human conversation: Carol: Are you coming to the party tonight? Lara: I’ve got an exam tomorrow. On the face of it, Lara’s statement is not an answer to Carol’s question. Lara doesn’t say Yes or No. Yet Carol will interpret the statement as meaning “No” or “Probably not.” Carol can work out that “exam tomorrow” involves “study tonight,” and “study tonight” precludes “party tonight.” Thus, Lara’s response is not just a statement about tomorrow’s activities, it contains an answer and a reasoning concerning tonight’s activities. To see if an AI system understands it, ask for example: Is Lara's reply an answer to Carol's question? Is Lara going to the party tonight, Yes or No? etc. I didn't see this kind of test in natural language processing systems. If anyone knows something similar, please let me know. *This example is from Yule, George. The Study of Language, 2020.

-

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

It seems to me that the question shifts then to, "what constitutes a thing"? -

We cannot explain to other humans the meaning of finite numbers either. How do you explain the meaning of "two"?

-

I don't know where it is , but I've heard it many time from mods: "Rule 2.7 requires the discussion to take place here ("material for discussion must be posted")"

-

The Consciousness Question (If such a question really exists)

Genady replied to geordief's topic in General Philosophy

Is such a test needed? Isn't everything conscious? -

I remember, I had it, too. Except it was called something else. Don't remember what, but it was in Cyrillic Metal parts looked exactly the same, but the architectural elements that look plastic here, were wooden pieces in my case. Even better look and feel that way. My mother was an architect and my father was a construction engineer - they made sure I got such stuff...

-

On the Origin of Hallucinations in Conversational Models: Is it the Datasets or the Models? (2204.07931.pdf (arxiv.org)) In knowledge-based conversational AI systems, "hallucinations" are responses which are factually invalid, fully or partially. It appears that AI does it a lot. This study investigated where these hallucinations come from. As it turns out, the big source is in the databases used to train these AI systems. On average, the responses, on which the systems are trained, contain about 20% factual information, while the rest is hallucinations (~65%), uncooperative (~5%), or uninformative (~10%). On top of this, it turns out that the systems themselves amplify hallucinations to about 70%, while reducing factual information to about 11%, increasing uncooperative responses to about 12%, and reducing uninformative ones to about 7%. They are getting really human-like, evidently...

-

OK, it might constitute a part of the solution. Like hair is a part of dog.