-

Posts

5374 -

Joined

-

Days Won

52

Content Type

Profiles

Forums

Events

Everything posted by Genady

-

Hint: Internet search

-

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

IMO, the answer to the first question depends on conditions of usage of the memory. In the extreme case when the memory is added but the system is not using it, there is no entropy increase. Regarding the second question, a probability distribution of the memory contents has information entropy. A probability distribution of micro-states of the gas in the box has information entropy as well. This is my understanding. It is interesting to run various scenarios and see how it works. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

I don't know how deep it is, but I doubt that I'm overthinking this. I just follow the Weinberg's derivation of the H-theorem, which in turn follows the Gibbs' one, which in turn is a generalization of the Boltzmann's one. The first part of this theorem is that dH/dt ≤ 0. That is, H is time-dependent, which means P(α) is time-dependent. I thing that in order for H to correspond to a thermodynamic entropy, P(α) has to correspond to a well-defined thermodynamic state. However, in other states it actually is "some kind of weird thing −∫P(α,t)lnP(α,t)." This is how I think it's done, for example. Let's consider an isolated box of volume 2V with a partition in the middle. The left half, of volume V has one mole of an ideal gas inside with a total energy E. The right half is empty. Now let's imagine that we have infinitely-many of these boxes. At some moment, let's remove the partitions in all of them at once and then let's take snapshots of the states of gas in all the boxes at a fixed time t. We'll get a distribution of states of gas in the process of filling the boxes, and this distribution depends on t. This distribution gives us the P(α,t) . The H-theorem says that, as these distributions change in time the corresponding H decreases until it settles at a minimum value when a distribution corresponds to a well-defined thermodynamic state. At that state it is the same as -kS. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

I looked in Steven Weinberg's book, Foundations of Modern Physics, for his way of introducing the thermodynamics' and the statistical mechanics' definitions of entropy and their connection, and I've noticed what seems to be an inconsistency. Here is how it goes: Step 1. Quantity H = ∫ P(α) dα ln P(α) is defined for a system that can be in states parametrized by α, with probability distribution P(α). (This H is obviously equal negative Shannon's information entropy up to a constant, log2e.) Step 2. Shown that starting from any probability distribution, a system evolves in such a way that H of its probability distribution decreases until it reaches a minimal value. Step 3. Shown that the probability distribution achieved at the end of step 2, with the minimal H, is a probability distribution at equilibrium. Step 4. Shown that the minimal value of H achieved at the end of step 2, is proportional to negative entropy, S=-kH. Step 5. Concluded that the decrease of H occurring on Step 2 implies the increase in entropy. The last step seems to be inconsistent, because only the minimal value of H achieved at the end of step 2 is shown to be connected to entropy, and not the decreasing values of H before it reaches the minimum. Thus the decreasing H prior to that point cannot imply anything about entropy. I understand that entropy might change as a system goes from one equilibrium state to another. This means that a minimal value of H achieved at one equilibrium state differs from a minimal value of H achieved at another equilibrium state. But this difference of minimal values is not the same as decreasing H from a non-minimum to a minimum. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

However, while pointing to some others' mistakes he's clarified for me some subtle issues and I appreciate this. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

Regarding the Ben-Naim's book, unfortunately it in fact disappoints. I'm about 40% through and although it has clear explanations and examples, it goes for too long and too repetitiously into debunking of various metaphors, esp. in pop science. OTOH, these detours are easy to skip. The book could be shorter and could flow better without them. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

Thank you for the explanation of indicator diagrams and of the introduction of thermodynamic entropy. Very clear! +1 I remember these diagrams from my old studies. They also show sometimes areas of different phases and critical points, IIRC. I didn't know, or didn't remember, that they are called indicator diagrams. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

I'm reading this book right now. Saw his other books on Amazon, but this one was the first I've picked. Interestingly, the Student's Guide book is the next on my list. I guess, you'd recommend it. I didn't see when you stated the simple original reason for introducing the entropy function, and I haven't heard of indicator diagrams... As you can see, this subject is relatively unfamiliar to me. I have had only a general idea from short intros to thermodynamics, statistical mechanics, and to information theory about 45 years ago. It is very interesting now, that I delve into it. Curiously, I've never needed it during long and successful career in computer systems design. So, not 'everything is information' even in that field, contrary to some members' opinion ... -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

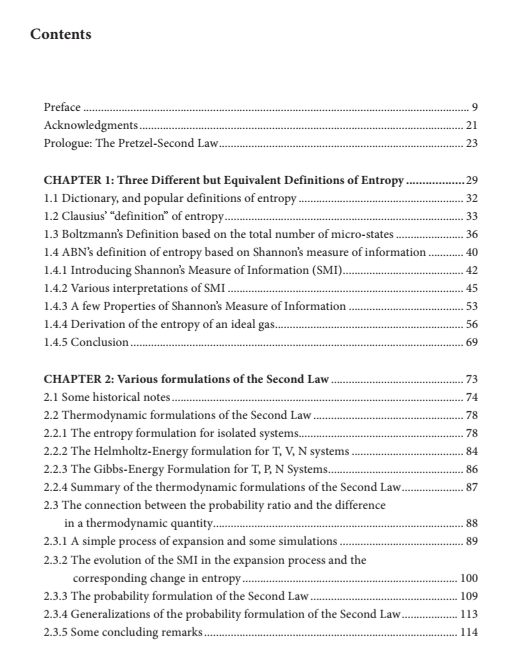

I've got it from Amazon US on Kindle for $9.99. Here is a review from the Amazon page: The contents: Hope it helps. Glad to assist as much as I can. -

How can information (Shannon) entropy decrease ?

Genady replied to studiot's topic in Computer Science

Since this thread has something to do with entropy - 😉 - I hope this question is not OT. Are you familiar with this book: ENTROPY: The Greatest Blunder in the History of science Arieh Ben-Naim ? -

I don't have /use one. I think I'm the only person on this island who doesn't.

-

My apartment door was spray-painted with the word "traitor" when I was readying to leave the USSR back in the 70-s. Somebody somehow knew this, without social media or me advertising this fact. Compare: Putin likens opponents to 'gnats,' signaling new repression - ABC News (go.com)

-

Elements of Combinatorial Analysis

Genady replied to Dhamnekar Win,odd's topic in Analysis and Calculus

A probability of finding m cells empty when you have r+1 objects is comprised of a probability of finding m cells empty when you have r objects and an additional object is placed in one of the occupied cells, and a probability of finding m+1 cells empty when you have r objects and an additional object is placed in one of the empty cells. -

Yes, my professor in biological statistics was very explicit about these studies. Here is a recent example of a study with far fetched, unwarranted, and completely speculative conclusions: Researchers uncover how the human brain separates, stores, and retrieves memories | National Institutes of Health (NIH)

-

My suspicions have been confirmed. When I studied cognitive neuroscience and when I read news in this area, it was and is very disconcerting to see how often far fetched conclusions about causation are made there on a shaky basis of a small sample size, a multitude of uncontrolled parameters, and correlations only. This new study confirms that more often than not these results are unreliable and unreproducible. A new research paradigm is needed to move this science from stagnation. Brain studies show thousands of participants are needed for accurate results | University of Minnesota (umn.edu)

.png.2114e500de98c763fbd44ccdea560f6d.png)

.png.143436301a10f4c310b962a2c3d47497.png)