-

Both Jim Keller and Jensen Huang said it would take less than $1T to do what Sam Alman claims to need $7T for.

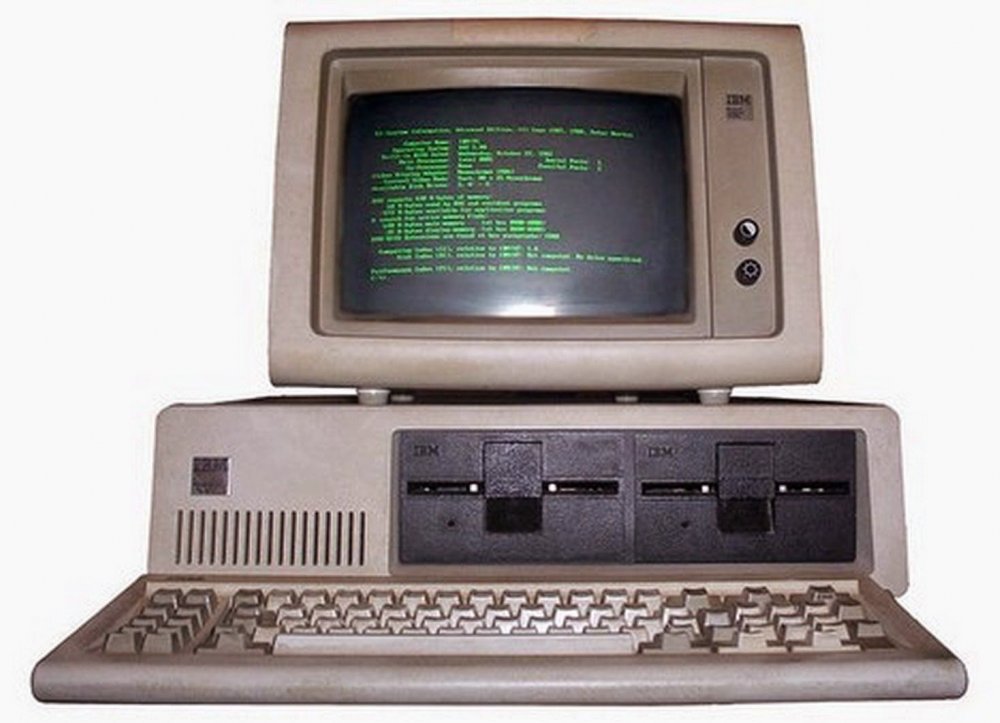

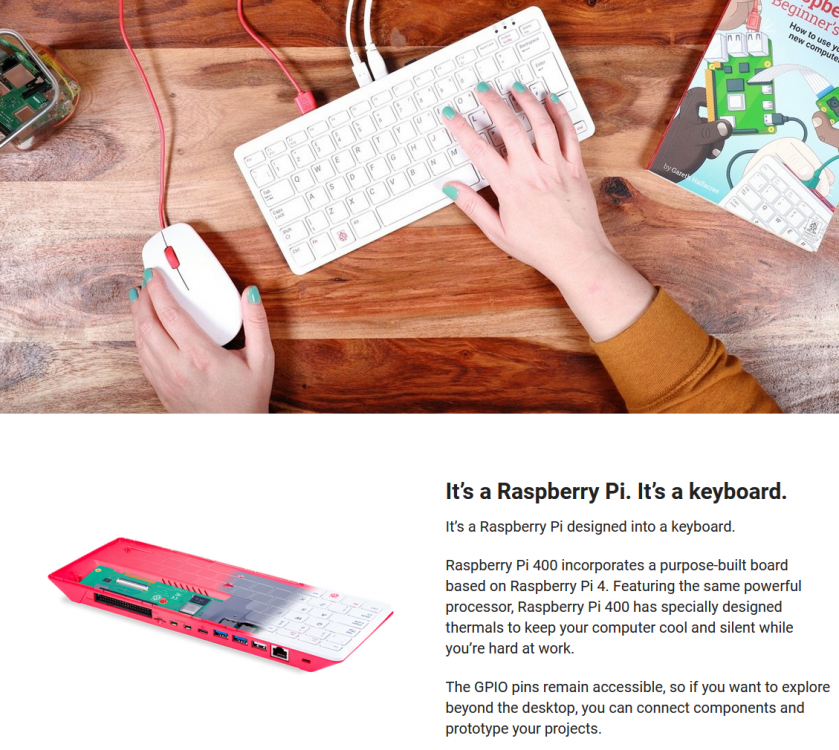

https://www.tomshardware.com/tech-industry/artificial-intelligence/jim-keller-responds-to-sam-altmans-plan-to-raise-dollar7-billion-to-make-ai-chips They are being very conservative, but more on that in a moment. I had presented many figures of the current silicon EDA and manufacturing in another thread, feel free to look them up there. There are also some in the above article itself. None of them are anywhere near even $1T, much less then $7T. There isn't a viable route to claiming ignorance on any sort of invisible plan, because what I presented was the ABSOLUTE MAXIMUM numbers. Why? Because the implementation of new technologies (software/hardware/design/manufacturing) bring cost REDUCTION, not INCREASE. If you want to be somewhat technical about this on just one vector alone, you can look at something like Moore's Law, from one of Intel's founders: https://www.intel.com/content/www/us/en/newsroom/resources/moores-law.html#gs.6gfswq What does that all mean? The number of devices-on-chip increases at an exponential rate, combined with minimal rise in cost, produces much lower cost per unit of computing performance. Let's compare a current example: This was my first computer I owned when I was a kid, an IBM PC 4.77MHz powered by an Intel 8088. It had 256k of RAM, which made it an advanced model with its memory banks maxed out. My father paid about $3000 for it, and I remember taking off the cover to marvel at all the chips on the motherboard. The wh ole thing was heavy as HECK, and the keyboard itself weighed almost as much as an actual typewriter. It displayed one color. This is the Raspberry Pi 400. The actual motherboard is much smaller than the keyboard footprint. It's powered by a Broadcom BCM2711 quad-core Cortex-A72(ARM v8) 64-bit SoC at 1.8GHz, with 2 HDMI ports capable of 4K resolution full color The price? $77 "But what about R&D?" Sure, what about it? Look up a pure development house like Nvidia or AMD. They're not as big as Samsung, of which I've already given the numbers.......... My personal number? Actually less than $300B. I'm not making that up, I'm simply going by the numbers I've already listed- Go look at them again. If that's not enough, look at Microsoft's number and add it to Amazon's. It's not as if the current AI capacity of the world right now is running on NOTHING........

-

Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

,,,Because you don't yield to evidence, and on top of that have the gall to ironically keep that "bad faith" accusation going. You're even worse than the average sophist I run into online. Time to ask yourself why you even have this place up.

-

Artificial Consciousness Is Impossible

All of the above hasn't even touched the point of contention that opened the entire topical can of worms: The assumption that generative AI "take inspiration" from previous works the same way humans do (yikes). No wonder nobody cares how artists/writers (even actors, which was what the SAG strike was about, also see the ongoing New York Times versus OpenAI lawsuit, and the new Nvidia lawsuit as well) are exploited or could stand to be ripped off. I have my own personal experiences with Stable Diffusion to lean on for that. More on it later...

-

Artificial Consciousness Is Impossible

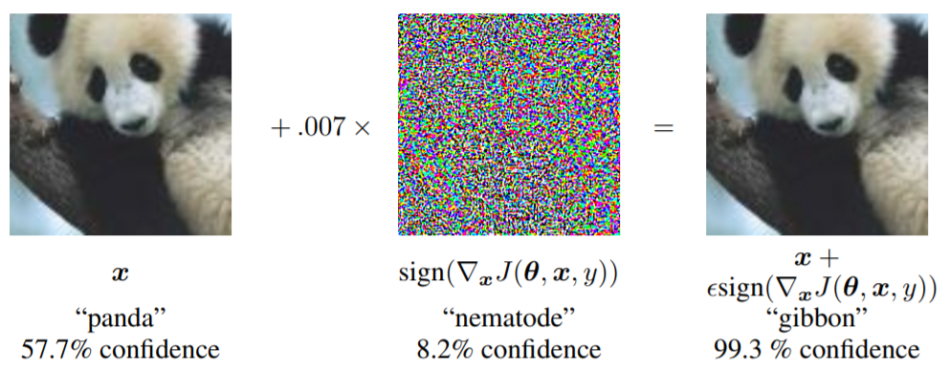

The past couple of weeks I've been in a series of debates with a depressingly large number of people who all hold one misconception in common: "The human brain works just like (machine) neural networks." I don't know where the heck they got that idea from. Is it from Hinton? I suspect not, but more on that later. It can't be just from the word "neural," right? Because there's nothing "neural" about neural networks. Even on the most basic level, such a gross correlation couldn't be established: https://www.theguardian.com/science/2020/feb/27/why-your-brain-is-not-a-computer-neuroscience-neural-networks-consciousness Looking at the infamous panda adversarial example, NNs evidently don't deal with specific concepts. Here, A signal from an image translating to mathematical space attached to the text label "panda" combined with another signal->space of an image that's invisible to the naked eye produces an image with a mathematical space that's identified to be corresponding to the text label "gibbon" with an even higher degree of match: That's not "meaning," that's not anything being referred to; That's just correspondence. People keep saying "oh humans make the same mistakes too!".... No... The correspondences are not mistakes. That's the way the NN algorithm is designed from the start to behave. If you have those same inputs, that's what you get as the output. Human reactions are not algorithmic outputs. Human behavior isn't computational (and thus non-algorithmic). Goedelian arguments themselves are used to demonstrate similar assertions as mine: ====== (see section 7 of Bishop's paper)https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2020.513474/full The fly brain neural probing experiment I mentioned in my opening article lends support that at least fly brains aren't algorithmic. I don't think there's good reason to believe that the human brain is going to categorically deviate. Now, on to the subject of Hinton. I think I'd be giving people too much credit if I pegged their thinking to Hinton (as if they all follow him or something), but even if so, Marcus had shown Hinton's POV to be just flat out wrong so many times already I wonder why people never consider changing their minds even once https://garymarcus.substack.com/p/further-trouble-in-hinton-city Even directly looking at a Hinton lecture I could spot trouble without even going very far into one. While I acknowledge Hinton for his past achievements, he is completely guilty of the behavior of "when all you have is a hammer, everything looks like a nail." Apparently he thinks EVERYTHING is backprop when it comes to the brain. This isn't as cut and dry as he makes it at all, as my previous quote regarding supposed "neural coding" showed. Marcus says Hinton's understanding on the matter is SHALLOW, and I agree. In the video lecture below, Hinton completely hand-waved the part about how numbers are supposed to capture syntax and meaning. Uh, it DOESN'T, as the above "Panda" example shows. If people arse to watch the rest and can show how he rescued himself from that blunder, please go right ahead... https://www.youtube.com/watch?v=iHCeAotHZa4 (..................and the behavior of the audience itself is laughable. They laughed at the Turing Test "joke"......so I guess behaviorism is a good criteria? That's their attitude, correct? "Seriously these people are not-so-updated if they think that way; Maybe they're just laughing to be polite?" was my reaction)

-

Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

I'm not the one arguing in bad faith. I'm the one who presented all the numbers on other engineering projects as well as silicon fabs. Where are your supporting arguments, except using the word "lots" a couple of times? Next thing I know you're going to say people like Jim Keller is making bad faith arguments. https://www.tomshardware.com/tech-industry/artificial-intelligence/jim-keller-responds-to-sam-altmans-plan-to-raise-dollar7-billion-to-make-ai-chips I have solid numbers to back myself up. You blow a lot of smoke. Are you sure you are setting the highest possible example for this forum?

-

Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

AFAIK nobody has done the math on the ecological disaster that $7T would bring about, but there is some noise being made about it starting with Hugging Face's climate lead: https://venturebeat.com/ai/sam-altman-wants-up-to-7-trillion-for-ai-chips-the-natural-resources-required-would-be-mind-boggling/

-

Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

Because TSMC has 12 fabs that are processor fabs, Samsung about 5, and Intel has 17. That's what... 34. Let's round that up to 50 or something. Still not even a tenth. Look inside your own computer. What processors are there? If you have an AMD rig, everything's TSMC. Nvidia cards are either TSMC or Samsung. Intel stuff are Intel and some TSMC. Same with anything that goes on server racks. 10x the capacity of the entire world just for AI workload. Uh, no...... There are reasons why not, first I'd like to mention that existing infrastructure already handle AI, that's the Amazon guys with the servers they rent out, but OpenAI is probably going go with Microsoft's own Azure infrastructure first (.......and all the Bing/"MS Copilot" stuff already runs there......) No. Not incredulity. It's just how much it's not gonna take.

-

What are you listening to right now?

- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

I'm arguing from evidence. Each fab is $20B. Samsung itself is about $300B. "Someone is trying to commit fraud, but that's okay because people will see through it" doesn't make a defense, sorry. I'm not following yours. Which part of this following quote of his from the article don't you understand? Specifically, which part of it implies scaling up projects to the tune of trillions of dollars?- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

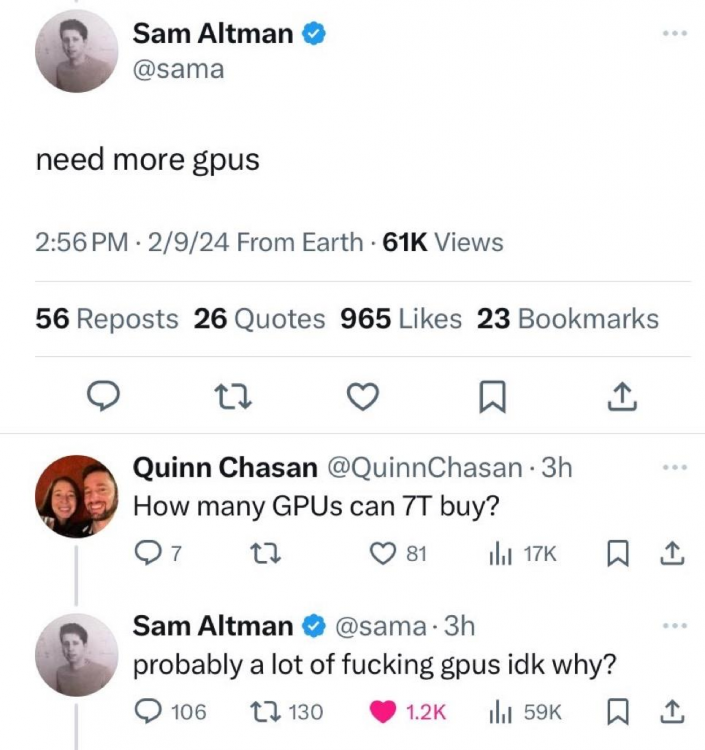

https://www.wired.com/story/openai-ceo-sam-altman-the-age-of-giant-ai-models-is-already-over/ $7T points to not "giant" but "beyond gargantuan" Hey what the heck I was gonna link the following smoking-gun tweet that someone forwarded to me in HIS SUPPORT of Altman and Altman DELETED IT! Why?? That's not nice at all!!- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

(I saw TheVat quoting you, forgot I still have you on block, unblocked) I don't think anyone who is outside of the industry, either in north/south/east/west, would or perhaps even could comprehend the scale. A colleague joked to me about fusion power, and I kinda doubt if that's enough even if it outputs 10x. Just that monster data-center network used to model-condition (hate using the word "train" in reference to machines) and respond with uh... $7T worth or even $1T worth of hardware is on the level of entire usage swaths of medium-sized COUNTRIES, not just cities as in the case of power-gobbling crypo-mining farms. I'm going to respond with the same sort of line I used in the first thread. Let me put it this way. Someone ask you for $7000 dollars for something that could be done for much less than $500, on top of the fact that he already acknowledged elsewhere to someone else that spending money on that particular thing would be useless. Are you still not going to call this a grift? It doesn't jive with his very own rhetoric. He himself already said scaling up isn't going to help. Let's put aside any of his rhetoric for a moment; The sheer scale is already off the chart. Nothing, literally nothing, takes nearly that much. NASA Space Shuttle program from start to finish: $209 billion (2010 dollars) including 134 flights https://www.space.com/12166-space-shuttle-program-cost-promises-209-billion.html ......that's start to finish. Not just start. If you're talking "moon shot," well that's still not it. The entire Apollo 1960-1973 program was $257 billion (2020 dollars) https://www.planetary.org/space-policy/cost-of-apollo No.......... you're not waving this one off- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

Okay. Could you state what the hyperbole was?- I'm gonna make this clear. Someone is using a definition of "trolling" that I'm not familiar with.

Thanks, +1 for confirming. Still didn't like what you said in the other thread when I gave it a neg- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

Let's be generous and discount his tweet, and that he's going to make his own manly fabs (ref what ex-AMD CEO Sanders said a long while back) That's still 20X Samsung How is that part "hyperbole"- Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

First part here: Now for the second part, since it's allegedly "non-interesting." I do find it "interesting" how Sam is going to a foundry now, complete with a Government wig who's tagging along: https://www.intel.com/content/www/us/en/events/ifs-direct-connect.html Now think about this for half a minute. He's not even building anything ground up- There's been zero indications of that. Every indication he's given so far points to "ready made" sources (see above post), either buying hardware outright (the GPU kits he tweeted about) or going to a foundry to procure silicon. It would be doubly puzzling/"interesting" why that he's asking $7 TRILLION for this endeavor. Since NOT going ground-up costs SIGNIFICANTLY less than supplying yourself from your own fabs AND your own process tech R&D (such reasons is why Samsung, Intel, and IBM are basically the ONLY semiconductor verticals left in the whole world after AMD jettisoned theirs a while back) Question remains that what in the world is the Samster gonna do with even ONE trillion (#1 Samsung is only about a third of that trillion...) let alone SEVEN trillion. For reference, TSMC is "only" spending 40 Billion in Arizona, and that's spread across TWO FABS, a project that's already huge in every way (21k construction jobs, world's biggest cranes that couldn't fit on a 2-lane, etc.): https://www.azcentral.com/story/money/business/2022/12/07/what-to-know-phoenix-taiwan-semiconductor-factory/69707994007/ Like the neurons vs transistors comparisons I've done before, I'm going to be extremely generous in this comparison: Ignore that Samsung has its own fabs already i.e. just ignore all those manufacturing plants, okay? Ignore the fact that Samsung does/make a whole load of other silicon stuff than just processors Ignore everything Samsung has built up over the decades, outside of investor valuation Instead of adding anything to OpenAI, just do separate new stuff, no creating verticals or anything despite any advantages of it and waste/opportunity costs of not going it Open AI / Samster going to create a whole new R&D company that's about 20X times the size of Samsung (despite what he said about the uselessness of scaling up LLMs.............. guess he just did a huge 180 and double/triple/quadrippledown on the opposite.........) and farm out the results of that design to existing foundries. Some design........... Why doesn't he work on a quantum computer instead? Why doesn't he invest exotic materials research (current foundries are more interested in refining processes. Sure they work with universities and all but that's hardly the same thing as pouring the entire company's process research resources into it!)?? Massively, massively, FRAUDULENT, "developmental posture." The Samster said he's focused on raising funds from UAE (United Arab Emerates.....) so he's counting on the desert oil barons and UAE royal family members there to fall for his money-draining scheme? ============================== Let's give him the benefit of a sliver of doubt that he's actually going to build this "20X Samsung Uber Designhouse" that's going to eventually pump out silicon from the likes of Intel. What actual good is this going to do, in general? Gary Marcus wrote an open letter to Sam Altman regarding this question. https://garymarcus.substack.com/p/an-open-letter-to-sam-altman Uh, I'd say that Altman care for exactly none of those things. He's trying to raise trillions and pay himself billions. - Sam Alman is a CROOK, part deux (now broadcasting in the correct subforum)

Important Information

We have placed cookies on your device to help make this website better. You can adjust your cookie settings, otherwise we'll assume you're okay to continue.