Everything posted by Killtech

-

The meaning of constancy of the speed of light

Hmm, i think you misunderstand the intension of my thread. The SI system and its definitions are perfectly fine for the purpose of enabling measurements with maximum accuracy. The same goes for GR and its interpretation that are build to work with the SI system. The question is about how we interpret the physical laws that establish the constancy of the speed of light in vacuum. From these assumptions we derive what physical quantities we set as constant and define their values. This differs from other physical assumptions because this creates a situation where there cannot even exist an experimental way to disprove these assumptions. You brought the term of a gauge freedom into the conversation before, and indeed this is very much appropriate for this. Whether we define the meter via c or via a atomic transition wavelength, we can deduct that this will produce the same gauge. For a different gauge we have to look further. The invariance of Maxwell is a gauge of SR, but LET uses indeed another one. That is not entirely true, because there are known cases that contradict this. consider the twin paradox situation in a global geometry of a cylinder without any curvature. In this case the twins travelling in their inertial frames will meet periodically and are able to check who is older. Each twin can also send light signals in both directions around the cylinder and wait which one comes back first to measure the one way speed of light. There are papers exploring this case if you are interested. In fact, if the global geometry has any kind of features, that is it not just the flat unbounded R^4, we can use these features to measure the one-way speed of light. So there is really one special case where the one-way speed is not measurable.

-

The meaning of constancy of the speed of light

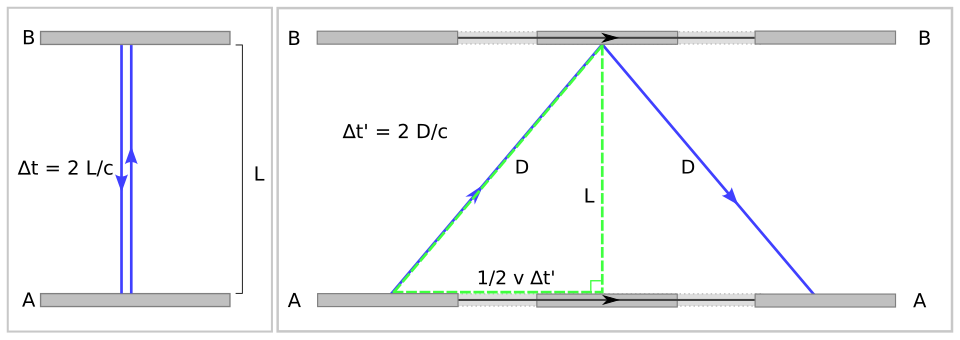

The effect does not cancel out for entirely in the special case when we consider a situation of a regional variation of c. Even so, it cancels out for most things, so it would only be indirectly measurable, namely as a curvature of space time. This would render it indistinguishable from gravity and thus offer an alternative interpretation of it. This might not be too surprising though that one can model gravitational lensing of light in vacuum alternatively by a refractive index. Not entirely. Atoms inside a strong electric field are different from atoms outside of it. They change depending on the regional conditions. You are comparing it to the wrong standard. Consider this: when you look at the acoustic wave equation, you can agree that obviously for every frame there exist coordinates such that the the speed of sound as expressed by a quotient of those coordinates \( \frac{\Delta x}{ \Delta t}) \) yields the same constant value. Now one could ask if there are physical quantities that correspond to these coordinates, and indeed we can find them. To build a clock, we need an oscillator, so what would happen if we were to used a fixed frequency sinus sound emitter for that? What does happen to that frequency when we are in a frame to which the air is in motion? The doppler effect will change the original frequency so a clock that uses a time standard that bluntly assumes this frequency is a fixed value will therefore slow down. To visualize what that means to a measurement of the periods of that oscillation: - left the clock is at rest frame of the air, right in a frame where the air is in motion. This reconstructs the coordinate t in our frame. As for x, we can measure distance using a sonar device but we additionally express distance as the space traversed by a sonic signal within a time span given by our new acoustic clock. What will we expect to measure when we use those devices to measure the speed of sound, in terms of acoustic distance per sonic signal periods?

-

The meaning of constancy of the speed of light

Which still produces the same issue because you still have to claim something else to be constant - and in this case something that behaves still the same in context of relativity, hence you cannot expect it to affect it. But consider this. Yes, exactly. And it is worthwhile to explore the implications that we are always forced to define some physical quantities as constant values, and all our previous observations were always based on similar assumptions of the constancy of some other physical quantities. Within such a system we cannot exclude the possibility of those physical quantities actually changing - but on the other hand, this doesn't matter because in our model that physical quantity is described in units of itself, then of course it must remain perfectly constant. So the constancies of these physical quantities is not actually a thing of reality but simply a convention or interpretation that we use. So let's apply this logic to the the speed of sound (limited to some some limited ideal system where the refractive index does not depend on frequency) and see what happens if we claim it to be constant and hence define measurement and laws of physical based on such an assumption. Sure the resulting theory will look different. But: it will still make the same predictions, hence it will just be a different interpretation of current physics.

-

The meaning of constancy of the speed of light

The unit definition is an intermediary step between experimental measurements and what corresponds to the proper time in the model. This is explained in the OP. In the OP i also mentioned Poincarés critique on these interpretations and the assumptions that c is constant, specifically how that observation was obtained. It was him who also brought LET to its final form which made it equivalent to SR during that time. As mentioned in the OP any attempt of even thinking of a variation of c causes logical issues. The obvious one where speeds are given in meter per second and the meter is a the distance which light travels through vacuum in a specified time does not allow for any variation of c in any measurement, does it? That problem should be easily to understand. Something similar applies to the second, but less obvious and with more impact. Within SI system and SR you cannot resolve this properly to even find something to look for experimentally. The considerations i made really means applying classical QM to the Maxwell-equations of LET to understand what happens to atomic energy levels. And all you get is that atoms have to react to a movement against the aether just so that an atomic clock will get slower same as SR predicts. Additionally we can expand it with a refractive index for the vacuum and more interesting results. But all this leads to is not even a counter-hypothesis but just a different interpretation of the same physics. For those that understand how LET and SR relate to each other, this should be quite simple to understand. Where it gets more interesting is when consider the speed of light depending additionally on the region. That is something LET did not cover. However it still is good enough to make similar considerations under the assumption that the regional variation appears constant in the local area covered by an atom. And again, this seems to lead to well known predictions, that is the same GR makes around massive bodies. The pink unicorn we are speaking of is really a GLET, generalized LET which includes gravity, yet other then being a different interpretation of physics is still entirely equivalent to GR. And if we want to measure GLET explicitly without any costly conversion from GR, we need to use entirely different measurement definitions. If we consider the GR interpretation requiring experiment doing measurements in USD units (to use the analogy from before), then we GLET will require us to measure everything in EUR.

-

The meaning of constancy of the speed of light

You mean Avogadro constant? Fair enough, this one is not related to anything real or physical to to a convention

-

The meaning of constancy of the speed of light

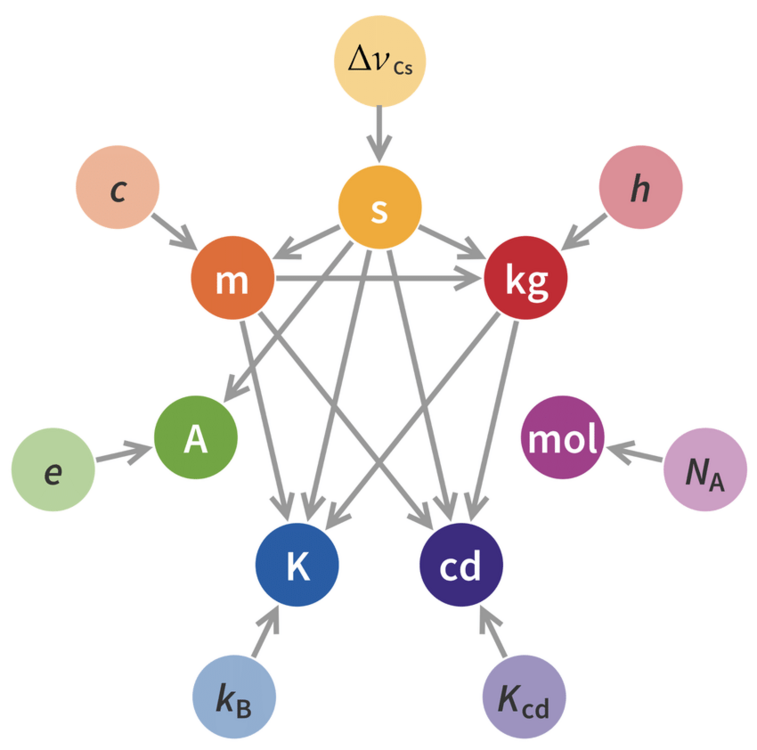

Then you gravely misunderstand the definition of the 2019 revision What the physical real world reference for \(\Delta \nu_s \) and the second we don't need to discuss. So let's look at the Coulomb, which are defined via e, which again corresponds to the charge of an electron. So you measure charge by how much electrons you would need to get the equivalent amount. For the Ampere you need to additionally to include the second. Those constant which values SI has fixed, those are not some arbitrary quantities. Each corresponds to some real physical things, the references to which SI measures everything relative to.

-

The meaning of constancy of the speed of light

We are talking about the same in this case. LET and SR are effectively different interpretations. The second provides time as a relative to specific transition frequency Caesium atom in the given frame, so in measures time as the number of periods this frequency will do in the same time. So when two time measurements took the same amount of seconds, it does not mean it took the same time but rather the number of periods achieved by the Caesium emissions in those frames over the duration of the measurement is the same. Think of a simple analogy: the value of a Google equity stock can be measured in USD. The USD here should serve as an analogue of the second. But it can also be measured in EUR. Consider the situation where the stock went down over a week. Its price in EUR might however have risen. We can think of USD and EUR as just two units, but that is not entirely appropriate because the EUR/USD conversion rate changes over time. These are two quite different measures and far more then just units. There are other currencies like the Jordanian Dinar JOD which is fixed to USD with a rate of 0.709 - these numéraires behave like the the Joule to Kilocalorie. Note that you cannot really measure the price of an asset without a numeraire. You always have to choose one. Doesn't have to be a currency. So when we have a statement like the "price of asset X is constant", it is actually ambiguous and only true for certain interpretations of the price. Hmm, maybe now you can understand the trick behind rendering the speed of sound an invariant constant?

-

The meaning of constancy of the speed of light

I am pretty sure the University of Pittsburg does not count as a commercial site, nor could a link to a scientific paper highly relevant to the discussion be considered advertising. By that standard all interpretations of quantum mechanics discredit one another? No, it is discredited because it has a preferred frame and in most situations, there is no experimental way to find which it is. Apparently you lack a bit of knowledge about this part of physics and its history, so it would be quite a bit easier for the discussion if you would read up on it. I understand that this isn't highly relevant for popular modem physics, though i do think it would broaden your perspective on SR understanding its history better. LET differs from SR that both time and length remain invariant, whereas they differ in SR by frame. And when it comes to the speed of light, it is frame dependent in the prior but not so in the latter. Those statements are however in no contradiction because the definitions of time and space differ between those two interpretation, i.e. they are not the same quantities and hence neither are speeds. In LET Maxwell transforms by Galilei, hence they equations looks different for every frame. The frame dependence can reduced to a physical field called the ether and its movement speed. One might be naively lead to believe that this difference by frame should easily distinguished experimentally. However, we have to consider that our clocks and rulers are build out of atoms - which are electromagnetic in origin. So we have to account to what happens to atoms moving relative to the aether as well. Michelson-Morey explains how we expect the electromagnetic waves to be affected by this. We do know for example of the Stark effect when an atom is exposed to an external electric field, so similarly we expect effects due to the aether wind. And indeed, using some special coordinates we can simplify the problem to a known case, easily obtaining the answer that the atom has to contract in this instances and also its energy levels shift - the factors are a combination what we know as time dilatation and length contraction from SR, so if one was to use these atoms to as a base for a clock or line them up to produce a ruler, they will be affected in the very same way as SR prescribes. So LET makes the very same predictions as SR but explains them by the clocks and ruler we use being distorted by a physical field and thus not actually showing the proper time. And indeed we cannot say how the "true" time ticks, because in reality all measurement is relative: a comparison between the measured object and a reference. So all we can measure is whether one object changes relative to another. If both change in the same manner, we are not able to see that, hence we can never say if an object changes in an absolute way. But we don't need to know that to make all the prediction we are interested in.

-

The meaning of constancy of the speed of light

Have you read the article? Do you unterstand by what means LET and SR are equivalent? You understand that in LET the speed of light in a frame = speed of light + ether velocity, hence depends on the frame due to the Galilean nature of the theory? Despite this, both interpretation maintain the equivalence making identical predictions. This is same as in my case. This change of interpretation is fundamental for understanding the issue and ideas in this thread - and the reinterpretation of time and length is the key for this. I seem to fail to explain it well enough with my words. Yet the equivalence between LET and SR are well established, so maybe you should check out better sources then me for an explanation why this works. I admit though, it is a bit mind-boggling to get the head around it at first. Or please read the article, it explains all your questions in detail.

-

The meaning of constancy of the speed of light

You should read the article i liked in my post before. Invariance has no absolute meaning but only within a given model - that is invariances depend on the definition of time and length standard, as was discussed in this thread already: . For LET, this question was a difficult one, as the ether wind cannot be measured (at least not if the medium is perfectly uniform). For a classic medium however it is trivial to measure the flow velocity wrt. the observer frame. The equation i wrote is the simple Lorentz trafo in one dimension. i expanded gamma and beta to not mistake them for their analogue - not sure if you call it a Lorentz trafo is c is exchanged for the speed of sound. I also used used ^{-1} notation instead of \frac to make it not stand out within the text. but when i edited the post, the equations broke the ^ and all exponents fell down. i could not repair them.

-

The meaning of constancy of the speed of light

Not sure if i get you right, because a sound wave always moves with the speed of sound + speed of the local medium. To model the situation of a plane where the medium has two distinct regions, i.e. inside and outside, is significantly more complicated. It is indeed very interesting for the analogy it has but quite complicated as a start to exploring this framework. If you are just talking about observing the wave from a frame with a relative movement v to the rest frame of the uniform medium, observe that we use a special time standard, specifically in the moving frame we use a clock which time is t′=(1−v2c−2)−0.5(t+vc−2x) relative to a clock in the rest frame of the medium. We measure distance by how far an audio signal gets in 1 unit of t', hence the observers frame can use coordinate x′=(1−v2c−2)−0.5(x+v−1t) to easily obtain the distances in the needed standard of length. Redefining time and length, requires that speed is also redefined correspondingly. This tricky redefinition causes the speed of sound c=Δx′Δt′−1 to become constant in every frame. But it is just a that: a redefinition. The latter is nothing new: it was basically the discoveries made when developing Lorentz Ether Theory which uses almost the exact same framework - except for a slightly more complicated wave equation. Furthermore Poincaré corrected version of LET is equivalent to SR producing identical predictions. For reference: https://philsci-archive.pitt.edu/5339/1/leszabo-lorein-preprint.pdf EDIT: ... so formulas, particularly exponents break on editing here?!? any way to prevent that?

-

The meaning of constancy of the speed of light

Functions of the form \(e^{ikx}\) are invariant under Lorentz-like coordinate trafos. This is a trivial mathematical observation that applies to any simple wave and their equations. So for an ideal uniform acoustic medium you can apply these and notice that the form of the equation remains invariant. This hints what definition of a time standard we have to use to achieve this in covariant form as well. In general we can use analogue construction of a geodesic clock from GR but utilizing acoustic signals instead of light. For the standard of length its pretty obvious: we just define length by the distance an acoustic wave travels in a fixed time - this is why the speed of sound must become a constant in any circumstance. For either standard we can construct devices that measure them in reality. Now we have discussed extensively what happens to the laws of physics when we do change the standards of time and length. Apply it to this case.

-

The meaning of constancy of the speed of light

Of course. What i meant is that the definition of the standard for clocks and rulers is itself a significant degree of freedom, as it does not affect any real physics itself. yet massively reshapes the resulting model. I understood you. I am just not sure i would strictly call e.g. the form of Navier-Stokes equations - in their general form - to depend on location or the observers frame. There is also a general form for Maxwell equations under Galilean trafos. What is nasty though it the transformation between frames. But i know what you mean and you are right that in practice when describing e.g. physics in a medium there is always a frame for which the medium is (at least locally) at rest, making some terms to vanish and the equations of interest simplify. Not sure this is the case. For the corrections in questions it may not be possible to experimentally determine them by local means only, but involving global information, this should be possible to do without any knowledge of GR itself. For example the extremal clock signal a radio clock receives compared against a local running clock of same type allows to extract data about the gravitation environment the signal travelled and used that for corrections. Therefore we should be able to construct such u-standard clocks in reality even without the knowledge of GR. But since we do not want to experimentally and empirically discover the laws physics anew, we can deduct the new laws from GR, if we can express the correction in terms of GR. Once we know the equations of motions of all fields and the geometry in the new standard, we technically won't need any knowledge of GR any longer. The enthusiasm is indeed a lot on a theoretical level. because if the standard for time and length allows to rewrite the laws of physics with quite some degree of freedom, we could ask if we can postulate certain laws to have a certain form and then find a standard that makes these laws comply with reality. Applying this idea to Maxwell equation renders it infallible by construction and the nature of c becomes a different interpretation in this concept. Consequently its perfect constancy is a logical consequence independent of physical reality. But this idea seems to works for a broad set of wave equation without dispersion by frequency. So for example, if we take an ideal medium and look at the acoustic wave equations, we can find a time standard that allows to treat it with the same framework as SR - rendering the speed of sound a perfect constant which need to be set and perfect Lorentz invariance but around the set speed of sound. Even if we include regions with a non constant refractive index, in the new standard such local anomalies vanish from the wave equation in covariant form and instead wander into the geometry / are handled by the connection.

-

The meaning of constancy of the speed of light

I am very well aware of that. The backtracing along world lines is however only relevant when translating results from u-standard model to t-standard experimental data. That is indeed expensive. Yet in the case we do for example numerical simulations of galaxies moving over long time spans, we can stay within one standard without the need for switching. Events like collisions will not depend on whatever time standard and its physical laws we use to calculate them - if they are consistent. This in particular would depend a bit what specific approach would make most sense for quantum field quantization. An isometry to either Minkowski or Euclidean metric would certainly make things simpler. An approach to change the geometry merely moves real physical information between geometric object and fields of similar nature. This is why it is guaranteed to produce an analogue of the Euler-Lagrange equations. But indeed all geometry dependent quantities are redefined by analogues and this of course includes energy and its conservation. I am happy that we finally start to understand each other. I find it very interesting as this shows how much relativity there is any model of reality, simply based on the standards and conventions required to map it into a mathematical description. This mapping is just highly non-unique, non-trivial and very influential in terms of the model it produces. I am aware how tedious this may look in the first place, yet on the other hand this line of thought also adds so much more degrees of freedom to model the very same reality. It also adds quite a different perspective on physics, as it is a lot more relative then one would think. I haven't yet concretized specific corrections. The Schwarzschild example i used was merely to demonstrate that i really want to change the definition of time standard and not just coordinates. For the concrete corrections, i was considering something along the lines of using a coordinate time concept like Barycentric Coordinate Time TCB and uplifted to serve as a time standard, or alternatively a clock concept basing the corrections on radio clocks based on a common specially placed signal source - both ideas are somewhat related. The latter would allow to determine the corrections experimentally, the former gives a recipe how to do it from the model. Either one should allow to find a relation between the regular GR geometry and the corrected one for any scenario, hence this would allow to deduct field equations / laws for all new physical fields from the Einstein field equations. I might note that the way TCB seems to be used in practice for calculation in the solar system is actually not that far from what i intend to do. And there are in fact practical reasons for doing that. It just doesn't go all the way. Weather laws of physics are differ depending on location is a thing of interpretation. state of physical fields and geometry differs by location but the overall laws won't if you keep your definitions of what is a force clean. Even if you would purely arbitrarily add some curvature to a region that is normally flat, in the resulting model it would look like there is some weird dark matter sitting there and messing up physical fields and geometry - yet apart from the concrete distribution of the dark matter in your model, the new laws of physics can retain the same form at all locations - everything just needs some special interactions with the dark matter when passing through. Finally, there is also another case of study for this idea and it goes the other way around - that is where physical laws appear complicated and location depended (in the sense of extra fields appearing), yet could be reduced in apparent complexity if a different time standard were to be chosen. Of course there are good reasons not to, but next time it might be of interest to discuss these situations to understand under which circumstances this is advantageous.

-

The meaning of constancy of the speed of light

Naively i would think you should be able, since you know how \( a_{\mu \nu} \) changes in length in any direction. I would multiply some scalar function f and then try to find a solution such that \( \nabla_\lambda (f a_{\mu \nu}) = 0 \). Applying the product rule you get the covariant derivative of a as a term, which is known. So at least in the case of 1 dimension, this is a differential equation that can be solved. This gives you an adjustment f a that preserves length along lambda, which reconstructs a part of the metric. Anyhow, i dead tired and probably got something wrong. stupid of me to check the forums just before i was going to sleep.

-

The meaning of constancy of the speed of light

See, this is unfair. I demand access to write latex formulas... no seriously, how do you do that around here? But i get what you are highlighting. in u-standards muon decay has a dependence on new physical laws and fields that appear in the new standard, hence the naive approach (utilizing classic/relativistic physics only) would indeed require an integration of the now location dependent decay rate along the worldline to get an answer. On the other hand, if u is not some arbitrary time standard but indeed obtained from pure gravity correction, then this question becomes quite a bit more interesting. As far as i am aware we have not much experimental data on if or how particle decay channels may change in strong gravitational environments - nor do we have a theory to make such predictions. But we do know particle decays change in say strong electric fields (think photon e+e- pair production). there is no obvious reason (for me) to exclude such a possibility for gravity. So, in u time standard, the corrections from gravity fields should result in producing some kind of physical 2-tensor field (at least) representing gravity and it should have interactions with both the EM-field and leptonic fields, hence after quantization (which should be straight forward in the flat spacetime u produces), we will get a very different Lagrangian. Around weak gravity fields this new u-standard-G+QED will then have to explain why (u-)gravitons slow down muonic decays. but around strong gravitational fields, i won't dare make any predictions. Maybe this makes my approach appear a little bit more reasonable? Well, in one sense there can be only one LC-connection at a time, as it is unique. But as it is bound to the metric, and we have two distinct ones, the meaning of LC becomes ambiguous. So in oder to keep it clear, we need to say either a g-LC or g'-LC connection. Note that for g-LC connection \( \nabla \) we have \( \nabla g = 0 \neq \nabla g'\). Yet \( \nabla' g' = 0\) using the g'-LC connection \( \nabla' \).

-

The meaning of constancy of the speed of light

Sorry for the miscommunication and the wrong wording. Didn't know how else to describe it. Highlighting what this interdependence means is my main focus here and what it may lead to. The logical consequences of interdependencies are rarely trivial. Specifically what it means for the isotropy of light and the constancy of c.

-

The meaning of constancy of the speed of light

One addendum though: in your example a(t) is the acceleration as defined in the previous standard. But if we think of proper acceleration and proper velocity, then those concepts are defined in terms of the proper time. Now as the standard definition of time changes, it reflects directly on proper time tau which is exchanged for tau' in the new standard. This in turn defines a new proper velocity in the new standard. same goes for the proper acceleration. So if the new standards dictates a change from tau to tau', it also requires switching from w to w' for the proper velocity and a to a' for the proper acceleration. And indeed a(t) != a'(t). Only s(t) = s'(t) is unaffected. Note that due to the change in geometry we attribute the same physical information differently between physical quantities and the geometry. So we have a'(tau') = d^2/d tau'^2 s'(tau') != a(tau') Now, where can i find how to use latext here?!?

-

The meaning of constancy of the speed of light

Yes, this is exactly what i am doing. So far we agree what it means for the model. Now to the experiment: As @Markus Hanke stated the change of standard definition of time makes the laws of physics take a different form, so it is more then a mere change of units. On the other hand it does not change the outcome of experiments directly. In that situation the original experiment cannot be directly compared with the model - at least not without expensive transformations (like you stated correctly before). So we look at how we could adjust our experimental setup to make its measurements conform with the new standard definition of time to make it comparable again. In practice this means exchanging all measuring devices to new ones that adopt the new standard, including mainly clocks. Let's go through this process slowly, because it is fairly important to become aware of what it does. In an laboratory we can measure velocities by taking the time a test body needed from one point to another. A ruler gives us the distance between the points, a clock measures the time and the velocity is the quotient of both measurements. Now if change to the new standard where the clock in the laboratory have correction factor f(x) (which we assume is nearly constant in the area of the laboratory for this example), then this will result in obtaining a different numerical value for the velocity then in the original experiment, i.e. by a factor of 1/f(x). Similarly we can measure the acceleration as a change of velocity over time and with adopting the new standard we obtain a result differing by a factor of 1/f(x)^2. So if we were to measure the Coulomb force a charged plate capacitor applies on charged test bodies, we observe a slightly modified law Coulomb law, one that has an additional factor 1/f(x)^2 in it. Performing the same experiment in a different laboratory where the correction factor has another value f(y), we get observe the Coulomb force is affected by the physical field which we correct via f(x). However, as you stated yourself due to the change of the standard definition of time, the laws of physics take on a different form, and so this experimental outcome will be consistent with that. If our experiments uses the very same standards as our model assumes, we will not be able to create any testable violation between reality and theory. This is what is important for me to highlight. I am sorry to correct you here but that statement is false. On a smooth manifold, you can have a connection but without the metric you cannot classify it as LC, only as torsion free. There are many torsion free connections on a smooth manifold and they differ by what they do to different metrics. If you whish to contest me on that, then please provide some literature to back up your statement. I think you a mistake smooth manifold for a Riemann manifold, because then your statement would align with the fundamental theorem of Riemann geometry https://en.wikipedia.org/wiki/Levi-Civita_connection#Fundamental_theorem_of_(pseudo-)Riemannian_geometry. Two metrics which are not isometric towards each other produce different LC-connections.

-

The meaning of constancy of the speed of light

Arg, no, that is not what i was saying at all. You misunderstand me. I am interested in exploring the definition of SI second and meter and how they affect measurements made by experiments, the laws of physics those experiments perceive when using those standards and finally how that shapes the entire physical model that we use. Also Einstein uses in his gedankenexperiments a lot of clocks, yet this object is never actually defined or meaningfully explored. Implicitly all his considerations assume there is only one concept of time, which all real world clocks have to measure. But there is more then one concept of time and of course we can build other real world devices that measure them (though maybe with less precision). But if we are not willing to even consider hypothetically what were to happen if we used those instead for all our current real world measurements, we become blind to how much the SI system and its standards for measurement determine. We won't be able to see that the invariance and shape of Maxwell as seen by experiments and theory is a logical consequence of SI standards and postulates underlying it. And because they determine both measurement and model in the same way, there is no possibility to create a violation. They cannot be proven wrong, as they are a convention. And we get stuck in using only a singular interpretation of spacetime which just isn't practical for a lot of problems, mainly in theoretical physics. Issues like failure to establish quantum gravity or the huge amounts of dark matter we need to postulate to make GR conform with observations may be related to this. Not sure if the latter two instances you do consider practical problems though. Let me be clear, if we just discuss calculating satellite orbits around earth, all the considerations discussed here are entirely useless. Only when we look at problems of galaxy rotation anomalies, or the issues of describing the quantum interactions in a black hole, things start to look differently. It is not like we can put a Caesium clock inside a black hole to get 'real world measurements' from it (that's like talking about invisible pink unicorns). Generally I am not convicted the SI standards are well definierend for that scenario. Maybe you can only describe the situation in there by extrapolating a definition of a hypothetical clock device that corrects for all gravity effects. Ah, this is crucial information and now i unterstand your prior statements. But your are using the diffeomorphism very differently then i do. Now a diffeomorphism can pullback (https://en.wikipedia.org/wiki/Pullback_(differential_geometry)) all objects defined on manifold, be it points, vector fields, metrics and even a connection. If you do that, the target manifold becomes really the original one as the diffeomorphism is used almost like a map itself, so this is almost a mere change of coordinates. But if we have two metrics, this doesn't help us at all, because such a diffeomorphism phi, the pullback metric g(phi) is not g' and same holds for the connection. Therefore in my case it makes no sense to apply the diffeomorphisms to any features linked to the geometry. We can only apply it to the points of the manifold but not even to the vector fields. And this is why: Imagine you have clocks that correct SI atomic clocks for some effects and you use those in your measurements. One way to measure a force, it to let it affect test bodies and measure their acceleration, which is by its units is space / time^2 and therefore have a direct dependence of the spacetime geometry. Any corrections made to the time measurement standard will therefore change the outcome of the measurement, as the acceleration measured using the corrected clocks is different and hence the force itself. In SI we can see the dependency of all quantities on the spacetime geometry right from its units. So no, a change of metric does not keep even vector fields invariant as due to the change of perspective those are related yet actually different objects. So we need to look a bit closer on the toolset of diff geo what it provides to handle this situation. So first let's have a look at the definition of a Riemann manifold (M,g): https://en.wikipedia.org/wiki/Riemannian_manifold#Definition - it is always a pair of two things and one is the defining metric. So if we have one smooth manifold M but two metrics on it, we have formally two Riemann manifolds (M,g) and (M,g'). When it comes to the connection, specifically Levi-Civita, we know that it is only defined on Riemann manifolds, as by definition it is explicitly defined via the metric: https://en.wikipedia.org/wiki/Levi-Civita_connection#Formal_definition. So two metrics, two Riemann manifolds, two LC-connections, only one smooth manifold M. When we use diffeomorphisms to transition between Riemann manifolds, we are no longer allowed pullback any objects that depend explicitly on the Riemann geometry. This situation is a part of diff geo that i haven't seen being used in physics, hence it won't be familiar for physicists to think in this way. Only distantly related, as it discusses using an alternative geometry and maybe the flatness, but everything else is based on a different concepts, hence resulting in quite a different setup.

-

The meaning of constancy of the speed of light

You misread my statement or i do not follow you here. A piece of a sphere is diffeomorphic to a flat space of R^2 (calling the former a sphere and the latter flat does imply that i talk about more then there mere differential manifold). The coordinate maps themselves provide such a diffeomorphism (at least for piece of the sphere there is no problem with map singularities at the poles). So with the regular connection you have on the sphere you get a parallel transport like this: https://en.wikipedia.org/wiki/Parallel_transport#/media/File:Parallel_Transport.svg. On the flat R^2 you will not find a path where such a twisting will occur because it comes with a different connection. But of course you can pullback your connection from the sphere piece via the coordinate map to get a connection on the flat space which will also twists your parallels - and basically reinterpret the flat space as curved. Think of a balloon painted as a globus. Also paint the coordinate grid on it. Usually a balloon is spherically shaped. But we can cut a piece out of it, stretch it into a flat surface and pin it on the wall. All points of interesting like Greenwich, the Cape Town and Katmandu will remain at their locations as described via coordinates. But let's try a parallel transport between Greenwich, the Cape Town, Katmandu and back to Greenwich - in the original state and after the flattening. Apparently it now uses a different connection. Yet the piece of the balloon is the same smooth manifold regardless how it is shaped - it remains the same set of points and how they are linked with each other. Diffeomorphism, or more generally homeomorphisms is what we use in diff geo to reshape a geometry without changing its topology. You know, like a coffee mug is homeomorph to a torus (https://en.wikipedia.org/wiki/Homeomorphism#/media/File:Mug_and_Torus_morph.gif). The diffeomorphism will additionally make sure the reshaping does not produce any kinks or folds that would cause issues for differentials. But a diffeomorphism does not preserve the geometry, for that you need stronger things (https://en.wikipedia.org/wiki/Isometry#Manifold). In my case i have only one set of coordinates and never change it, because coordinates and equations purely expressed in terms of them are one of the few thing that remain unaffected under a reinterpretation of the geometry. Now imagine the balloon not to be made out of a simple fabric but its surface being a futuristic display that displays live physics interactions that happen on the globus (think of a live meteorological satellite stream). The physics displayed on that surface will have no idea about what kind of stretching and reshaping we did with it and act entirely independently from that. All the reshaping may however let the same physical interactions playing out on the surface appear to to subject to different laws of physics with things moving faster in the stretched regions and slowed in the contracted ones. Also our coordinates as originally painted as a grid onto the surface do not notice any kind of reshaping we do - they only look different for someone outside their world looking at the manifold. Yes, this you are absolutely right. When we stretch the spacetime geometry onto the flat, gravity must at least become similar to electromatic field which is a 2nd degree tensor. So unlike Newtons gravity there must be at least something like a gravity analoge of the magnetic field when represented in a flat spacetime. Probably even more then that. We have exchanged the clocks and rules and use those for our real-world measurements instead. Think as if you were forbidden from even using SI conforming clocks and rules and are forced to rely solely on the new ones for all experiments - i want to you understand what this situation mathematically means (not practically). In that case i don't have to do any additional mappings: because as you said, the correction we did coupled with the mapping back (which is the inverse) combined together yields f ° f^-1 = id. No additional work needed if i simply forget the old word entirely, both in terms of the the model and measurements in the real world.

-

The meaning of constancy of the speed of light

But what does that even means "behave naturally as clocks"? Naturally i could just interpret the situation another way: Lets say I don't know much about modern physics and naively start my exploration of the world with radio clocks instead. those don't need any corrections and in a sense they do tick at the same rate regardless if they are in deep space or close to a black hole. If i compare their time with that of SI atomic clocks, i notice that those run inconsistently - and i can figure out a physical reason to attribute that effect to: gravity. Furthermore i can make the atomic clocks consistent to my radio clocks, if i were to correct them for that effect. Now if i have two clock types, ones that give me different times at different regions and another that show consistent results, which clock seems more like the natural choice for time keeping? If i was to run space ports around the universe, which kind of clocks would i announce my flight schedule with? Just to throw that in reminiscence of how connecting towns with the rail and trains required to make clocks at different towns to run consistently, i.e. introducing the railway time. It really depends on your perspective which clocks you declare to behave naturally. But yes, radio clocks have a significant drawback: they always have a preferred frame and that is that of their signal source. In empty space there is no natural choice of a preferred frame - and as you say eta and h there is some arbitrarily there. I am not sure if the presence of gravity may induce some kind of preference though. If you think of Poincarés corrected LET but now expand it with gravity deducted from GR - which is more or less what this approach leads to - it would require addition of density + current for the aether along with a flow equation. The choice of h would impact its form since it mostly would describe the aether, thus you would look for what makes its time evolution equation most natural. wait, we can use latex here!?! how?

-

The meaning of constancy of the speed of light

Diff geo conforms with the general definitions of metric spaces, it's just the we have better means to represent the metric. But just to be clear, a diffeomorphism (see wiki definition) without any further specifications is just a bijective function which is smooth in both directions. A piece of flat space is diffeomorphic to a piece of a spheric surface - and i want to stress those are in no way unique. These spaces will have different connections though. Do you mean a situation where your diffeomorphism pulls back the connection, i.e. the connection of the target space can be expressed via the connection of the source space? Hmm, it's really neither of those two, though it is much closer to 1 except that your interpretation how this maps to reality/experiment might differ from mine. In a way you could call it a different physical situation with different laws of physics which despite this produces equivalent outcomes. We need to work on the interpretation of this. Lets go back to how we interpret GR and how we compare it with reality/experiments. More specifically, how does the spacetime show itself in reality? If we agree that the metric is a simple tool in our model to describe the spacetime, then there are a few things we can obtain from it that directly map to objects of reality, like the proper time maps to what clocks measure and proper length what rulers show. And there are also angles. And does this mapping work the other way around as well? i.e. given the tools like clocks, rulers and goniometers (for angles) is that enough to reconstruct the spacetime with its metric? So is this mapping kind of bijective and in some way smooth? ... can we treat this interpretation mapping between model and reality almost like a diffeomorphism itself, one pulling back a metric? What would happen to this line of thought if we exchanged the tools we use in reality (clocks & rulers) for a different set of tools wich additionally apply a correction for gravity? Let's assume the type of correction is such that these tools do fulfill all conditions to construct a mathematically valid space and metric. More specifically, lets assume they construct exactly the g' metric from my previous example in the Schwarzschild case. The benefits are hard to see from the tedious construction, but actually there are multiple reasons i am looking into this. But maybe I could throw in an appetizer for a potential silver lining: the quantization of gravity would greatly benefit if there existed a method to equivalently model GR physics in a flat space where gravity arises as a force. But i do not want to discuss such ideas further for as long as we don't have a common understanding. Lets forego that the definitions of one iteration second and meter were made such that to be consistent with previous ones, so that the original definitions weren't chosen with such a purpose in mind. But lets go to Einstein. He postulated c to be invariant and with it Maxwell. A postulate is just as much a definition, and so it was set as invariant. The definition of the meter was derived in parts on this idea. Lets not focus how this postulate was obtained but rather what the direct consequence of such a definition is. Lets just consider what would be if light somewhat tried to travel at different speeds (and for some reason no one catches it). even the prior part of the sentence already causes a lot of contractions with the meter. Because we measure distance in units of light travel time in vacuum. So we practically would try to measure the speed of light in vacuum in units of the speed of light, so we measure it moves at 1 c. It is by all means a tautology! It can neither logically deviate nor create any contradictions with reality. its just how we measure stuff. If light traveled slower in some region, it would cause Maxwell to look differently, but since the lengths that we use become shorter, plugging in the effect restores Maxwell to its well know invariant form. All effect you will see instead is that space will get a dent around that region - which is no contradiction with GR, since even for the worst case of a missing explanation we have dark matter. What this does it that whatever the reality is, the definition of meter and second guarantee that Maxwell in vacuum is invariant. At the same time there is nothing in this statement capable to produce a conflict with reality. For the meter, this tautological construction with SR is easy to see, but for the second it is far less obvious.

-

The meaning of constancy of the speed of light

I think we are getting close to an understanding, yet I do not entirely follow how you use the term metric and what it means to you two of them. We know that the Christoffel symbols of a Levi-Civita connection can be expressed via the metric, i.e. the metric fully specifies all components of this special connection. That means that if we have two metrics, each produces a distinct LC-connection and those will not agree with each other in the general case. As for metric equivalents, i used the term they way i know it from metric spaces: https://en.wikipedia.org/wiki/Equivalence_of_metrics But actually, i should have said if the metrics are not isometric, that is the identify id:(M,g) -> (M,g') is a diffeomorphism but not an isometry. I think it's best to try an example to make things concrete. So let's start with the Schwarzschild situation: Let (x0,x1,x2,x3) = (t, r, theta, phi) as a usual choice of spherical coordinates, Let g be our regular metric associated with the standard clocks and rulers (SI second and meter). So in these coordinates we have a diagonal with g00 = -(1 - rs/r) g11 = 1/(1-rs/r) g22 = r^2 g33 = r^2 sin^2 theta The LC connection is given by Gamma^i_kl = 0.5 g^im(g_mk,l + gml,k - g_kl,m) (i use the comma notation for the derivatives) Lets assume the proposition of a alternative clocks and rules that correct for all gravitation effects. Lets g' be a metric associated with these and written in the same coordinates it is: g'00 = g11 = 1 (why not make it Euclidean if c is not preserved here anyway) g'22 = r^2 g'33 = r^2 sin^2 theta Now the Christoffel symbols Gamma' of this connection obtained from g' metric via the analogue formula. It is well known since these is the spherical coordinates of flat space. Obviously those two connections are different and disagree. However each is a Levi-Civita connection for its associated metric. And if we leave this as it is, it will break physics, so we have to do the real work. This is where we apply the scheme I quoted here: https://www.scienceforums.net/topic/135672-the-meaning-of-constancy-of-the-speed-of-light/#comment-1286826 We start at looking at the laws of physics. In the original space there were no extra forces needed as curvature did all. The new space with metric g' is however Euclidean, so it cannot work the same way. We look at the geodesic equation of a particle world line in free fall and try expressing it with the new connection - and we find not all terms can be expressed with the new symbols. The left-over terms have to be interpreted as a now appearing force resembling something like a Lorentz force in structure - thus quite a bit more complex then Newtons gravity. Yet any solutions expressed in terms of coordinates will by construction be the same as the original - we more or less just renamed/reinterpreted the terms, what is a force, what is the geometry/connection and all that. On that basis predictions stay the same, though doing all interpretation based on coordinates alone is nasty. When we fully want to compare the results with experiments, then these must be now preformed with clocks and rules corrected for gravity effects (conforming g') - because the laws of physics are now rewritten to have different invariances - which are based on the alternative second' and meter'. So we get an entirely different physical description of the situation, alternative laws of physics and a different interpretation yet all combined together still produce the same predictions. Think of it this way: the definition of the SI second defines the Caesium transition frequency as invariant (which is entirely electromagnetic in origin). Everything that behaves the same way, will become invariant with it. The SI meter defines c as invariant. With both the temporal and spatial part of the EM-waves set as invariant, Maxwell equations obtain their invariant nature. In this situation, experiments cannot even logically violate this part of the model, as any disagreement with the prediction can be interpreted as a failure to correctly implement the SI standards for time and distance measurement rather then a flaw of the laws. I suspect isotropy of space is purely a convention. Experiments cannot verify or disprove conventions. Conventions can be assessed in terms of practicality but not if they are right or wrong. What would an experiment be looking for to disprove the isotropic nature of space? The issue i raised with this thread about c is that a counterhypotheses cannot be properly formulated for experiments to check.

-

The meaning of constancy of the speed of light

Nooo... i lost my post when i accidentally clicked the go back button on my mouse.... i suppose there is no cache i could get my post back? ugh.. Sorry, i missed a crucial world. We have two Riemann manifolds build on the same smooth manifold. A smooth manifold give you differentiable maps, that is enough to define affine connections and play with coordinates but not much else. A Riemann manifold expands this by defining a metric. This allows us to define things like curvature and also Levi-Civita connections only exist in this context as they are defined relative to a metric which they preserve. Every Levi-Civita connection is also an affine connection and in this sense we can look at it from the perspective of both Riemann manifolds and their metrics. If the metrics are not equivalent, then the LC-connection of one metric cannot simultaneously preserve the other metric. Now as for time- and space-like, these words are defined in terms of the metric, i.e. specific to the geometry. Remember X is defined as time-like if g(X,X) < 0. But if we have two non-isometric Riemann manifolds, then there can be X such that g(X,X) < 0 < g'(X,X). So if c changes, it is not because it changed but rather because c' ist not the same thing. And you are right in your suspicion that i use a different parametrization of world lines. However, this is how the situation looks when you stay on the original Riemann manifold. I do intend to treat the parametrization as a proper time - and for that i need a metric g' which produces this parametrization as proper time via the analogue formula. I think faster then i can type, so this leaves me often skipping crucial details. I am sorry for that. Thank you for bearing with me so far. Indeed, losing the isotropy of space is what is required, and yes, this has to end up in an aether-like physical model. The math reflects this then when we try to rewrite the Maxwell equation as it given with the usual connection of GR into another connection. If the new connection does no longer preserve the original metric (but some other), then it becomes a lot harder to express the Christoffel symbols of one connection with those of the other. In fact we need to introduce additional fields to be able to do that, one such field is c(t,x). But the geometry has a lot more degrees of freedom - 10 come from the metric tensor, so there are a lot of more fields that come out of such a transformation. A general formula cannot be written down without specification how the two metrics and their Levi-Civita connections relate to each other. That would needs a concrete proposal how to adjust the definition of the second (i.e. what clocks to use) and that i am not sure of yet.