-

Posts

11784 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Events

Everything posted by Cap'n Refsmmat

-

This is true. We have, however, given explanations of what behavior is prohibited: http://www.scienceforums.net/topic/52262-uhhm-wheres-the-politics-forum/page__view__findpost__p__568200 I have given iNow long and detailed explanations of what behavior is unacceptable in private messages and warnings in the past, including the last time he was suspended. iNow made it clear that he does not wish to change his posting behavior and does not believe he can change, regardless of what we say. One cannot consider this suspension in isolation. We have certainly missed other members who have posted insults and personal attacks, but when I received a report about this particular post, it was clear that a simple note in-thread would not suffice. You do not fall into the category of tactful incivility. You fall into the category of gleeful incivility. Our moderation policy is based on gradual escalation. First we post notes in-thread to request civility. If that fails, we warn the member by PM, or suspend them for a few days with a note explaining their rule violations. If that fails, we suspend for longer. Eventually we give up and ban the member. Most SFN members have reached the first step. You have regular step aerobics workouts. Hence, we crack down harder. Also, not all moderation is visible. It's incorrect to assume that because you see no moderation note, we never talked to a member about their behavior. Recently I have discussed attitude problems with members in the chatroom, or through private messages. People may appear to get away scot-free in public, but we may have a chat and ensure they do not re-offend.

-

It is entirely true that we could be more effective in finding and punishing rule violations. There were 284 posts made on SFN yesterday, and I did not read all of them; I am limited to what people report as a rule violation. (Hint, hint.) However, when I come across a report citing a post from a member who has been reprimanded numerous times in the past, lectured about civility, and even been threatened with suspension, I move quickly. zapatos is right. Tact is important. I'd like to crack down on tactful incivility, but I fear there wouldn't be many posts left.

-

How do astronauts survive for six months in the International Space Station, then? They seem to digest food just fine.

-

That's beside the point. Saying "There is no evidence that they don't exist" is not evidence of their existence. How do you accurately determine whether an event is miraculous, or merely unlikely? To prove it is miraculous you must demonstrate that no possible natural cause could exist.

-

Yes, really. There is a difference between correcting minor errors and assuming that perceived minor errors are a sign of total incompetence. There is a difference between helping neophytes better understand physics and convincing them that we're all unapproachable academic snobs. I say this as the foremost expert on attitudes appropriate for SFN. Please choose tact in the future.

-

Possible sources of error in fatigue testing?

Cap'n Refsmmat replied to VelocityGirl's topic in Engineering

That all depends on how you performed the fatigue testing, and what instruments you used. -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

It's worth noting that the paradoxical results I mentioned are essentially statistical examples of the saying "extraordinary claims require extraordinary evidence." If you test 1000 hypotheses, only 1 of which is actually true, you will be overwhelmed with statistically significant false positives. Bayesian statistics takes into account the "prior probability" of a hypothesis being true, and estimates how likely a hypothesis is to be true given the new evidence collected. Here's an account of how statistical problems affected another study searching for psychic powers: http://commonsenseatheism.com/wp-content/uploads/2010/11/Wagenmakers-Why-Psychologists-Must-Change-the-Way-They-Analyze-Their-Data.pdf -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

Here is the study: http://annals.ba0.biz/content/132/11/903.short Please do not misrepresent me. My claim is not that statisticians are incompetent, or that their computations of p-values are inaccurate. My claim is that one should not interpret p < 0.01 as meaning "we're 99% sure of our hypothesis", because that is false; the odds of a false positive are generally large. -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

There is not a statistical significance test which can tell you there is a 5% chance that the null hypothesis is true. No study can report that information, because saying "p < 0.05" does not tell you that. p < 0.05 means that, if the null hypothesis were true, there'd be less than a 5% chance of obtaining results that look like the data you collected. The p-value is computed under the assumption that the null hypothesis is true. It cannot be used to determine the odds that the null hypothesis is, in fact, true. My computations are a result of the definition of p-values. The results are unavoidable. You can, however, collect more data and set the bar higher, requiring significance of, say, p < 0.01, or p < 0.001. This cuts down on false positives significantly, but also means that psychics with weak powers will be regarded as frauds, rather than merely unskilled psychics. You should receive a copy shortly. -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

I'm not sure how this relates to my point. You stated earlier that a high statistical significance value (say, p < 0.05) represents a small chance that the null hypothesis is true. This is not true. Statistical significance is calculated under the assumption that the null hypothesis is true, and tells you the likelihood of obtaining this particular data. Suppose I design a trial which is to test 1000 purported psychics. Of these psychics, only 100 are truly "gifted", and the rest are merely fraudulent or deluded. I test their abilities in some standard psychic test, and compare them against a control group of individuals with no known psychic powers. I use a statistical significance test to determine if the psychics perform any better than the control group. My test has very good statistical power, so I detect all 100 "gifted" psychics and accurately determine that they have psychic powers. However, there are 900 frauds in the group; the odds of any given fraud (for whom the null hypothesis of no powers is in fact true) producing a statistically significant result with p < 0.05 is 5%, so 45 frauds will be determined to have true psychic powers. Hence I have detected 145 psychics, only two-thirds of whom are actually psychic. Despite looking for p < 0.05, any individual "confirmed psychic" only has a 68% chance of being psychic, and a 32% chance of being a fraud. Similarly, if none of the 1000 evaluated psychics had any powers at all, I would still detect 50 false positives. This is why interpreting p < 0.05 as "less than 5% chance of this being a fluke" is incorrect. edit: I should also point out that this example is almost directly lifted from this article: Sterne, J., Smith, G., & Cox, D. (2001). "Sifting the evidence—what's wrong with significance tests? Another comment on the role of statistical methods." British Medical Journal, 322(7280), 226. I can send you a copy if you're interested. -

The Official "Introduce Yourself" Thread

Cap'n Refsmmat replied to Radical Edward's topic in The Lounge

I joined this site when I was age 12. (Not grade 12, but 12 years old.) It is an excellent place to ask questions and learn. I hope you enjoy it. -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

That is most emphatically not what statistical significance tests mean. I have an overview of this common misconception on my blog: http://blogs.scienceforums.net/capn/2011/02/21/statistical-significance-of-doom/ Statistical significance by itself cannot tell you the odds of the data occurring "by chance". Getting a result with p < 0.05 does not mean a less than 5% chance of your result being a fluke; in many cases, with small sample sizes and poor statistical methods, the odds of your result being a fluke can be over 50%. -

A Quick Glance at "Brian Cox is Full of **it"

Cap'n Refsmmat replied to Xittenn's topic in Quantum Theory

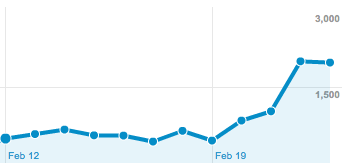

Nicely done, swansont: That's the chart of pageviews per day for the entire SFN Blogs network. Picking fights with famous people is a good idea, apparently. -

I admin this site. Personally deleting you is a remarkably good suggestion. Consider it done.

-

A Quick Glance at "Brian Cox is Full of **it"

Cap'n Refsmmat replied to Xittenn's topic in Quantum Theory

He doesn't claim to be proposing new science; he claims to represent what already-accepted science says, and apparently instead misrepresented it. -

A Quick Glance at "Brian Cox is Full of **it"

Cap'n Refsmmat replied to Xittenn's topic in Quantum Theory

Sean Carroll posted another interpretation of Cox's words on his blog: http://blogs.discovermagazine.com/cosmicvariance/2012/02/23/everything-is-connected/ Seems like Cox isn't going to get out of this easily. -

A Quick Glance at "Brian Cox is Full of **it"

Cap'n Refsmmat replied to Xittenn's topic in Quantum Theory

Cox's idea is explained in some detail here: http://www.hep.manchester.ac.uk/u/forshaw/BoseFermi/Double%20Well.html -

"Consciousness," the missing 'unified theory' factor?

Cap'n Refsmmat replied to owl's topic in Speculations

This is getting tiresome. Can we just admit it doesn't really matter and move on? If you don't believe owl, then ignore that part of the argument and move on. There's an actual topic to discuss. -

Er, no, perhaps you are. Certainly nobody should expect unjustified assertions to be accepted on SFN, but you should also know better than to think that blatant flaming belongs on SFN. We've been over this many times. Enjoy your week's vacation.

-

At some point (the critical field), the high magnetic field causes the superconductor to no longer be superconducting. The other practical concern is quenching; if the magnet warms up and gets a resistance, the huge current starts melting things.

-

Big Bang and only 14 billion years?

Cap'n Refsmmat replied to Jiggerj's topic in Astronomy and Cosmology

Cut it out. Civility is part of the rules; you will not improve the behavior of others by providing worse behavior yourself. -

Please stop. We don't exist to advertise your blog.

-

No, it should be immediate. I'm not sure what could cause a delay.