Duda Jarek

Senior Members-

Posts

587 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by Duda Jarek

-

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

Arnold Neumaier has responded on stack ( https://physics.stackexchange.com/questions/397022/experimental-boundaries-for-size-of-electron ) - he has gathered many materials on this topic: https://www.mat.univie.ac.at/~neum/physfaq/topics/pointlike.html But still no clear argument that electron is much smaller then femtometer (?) Anyway, to better specify the problem, define E(r) as energy in a radius r ball around electron. We know that E(r) ~ 511keVs for large r, for smaller it reduces e.g. by energy of electric field. Assuming perfect point charge, we would get E(r) -> -infinity for r->0 this way. Where does divergence from this assumption starts? More specifically: for example where is maximum of E'(r) - in which distance there is maximal deposition of 511keVs energy? Or median range: such that E(r) = 511/2 keVs. It is not a question about the exact values, only their scale: ~femtometer or much lower? -

How quantum is wave-particle duality of Couder's walking droplets?

Duda Jarek replied to Duda Jarek's topic in Physics

Sure, it misses a lot from real physics, like it seems impossible to model 3D this way, also clock here is external while in physics it is rather internal of particles (de Broglie's, zitterbewegung): https://physics.stackexchange.com/questions/386715/does-electron-have-some-intrinsic-1021-hz-oscillations-de-broglies-clock But these hydrodynamical analogues provide very valuable intuitions about the real physics ... -

How quantum is wave-particle duality of Couder's walking droplets?

Duda Jarek replied to Duda Jarek's topic in Physics

Oh, muuuch more has happened - see my slides with links to materials: https://www.dropbox.com/s/kxvvhj0cnl1iqxr/Couder.pdf Interference in particle statistics of double-slit experiment (PRL 2006) - corpuscle travels one path, but its "pilot wave" travels all paths - affecting trajectory of corpuscle (measured by detectors). Unpredictable tunneling (PRL 2009) due to complicated state of the field ("memory"), depending on the history - they observe exponential drop of probability to cross a barrier with its width. Landau orbit quantization (PNAS 2010) - using rotation and Coriolis force as analog of magnetic field and Lorentz force (Michael Berry 1980). The intuition is that the clock has to find a resonance with the field to make it a standing wave (e.g. described by Schrödinger's equation). Zeeman-like level splitting (PRL 2012) - quantized orbits split proportionally to applied rotation speed (with sign). Double quantization in harmonic potential (Nature 2014) - of separately both radius (instead of standard: energy) and angular momentum. E.g. n=2 state switches between m=2 oval and m=0 lemniscate of 0 angular momentum. Recreating eigenstate form statistics of a walker's trajectories (PRE 2013). In the slides there are also hydrodynamical analogous of Casimir and Aharonov-Bohm. -

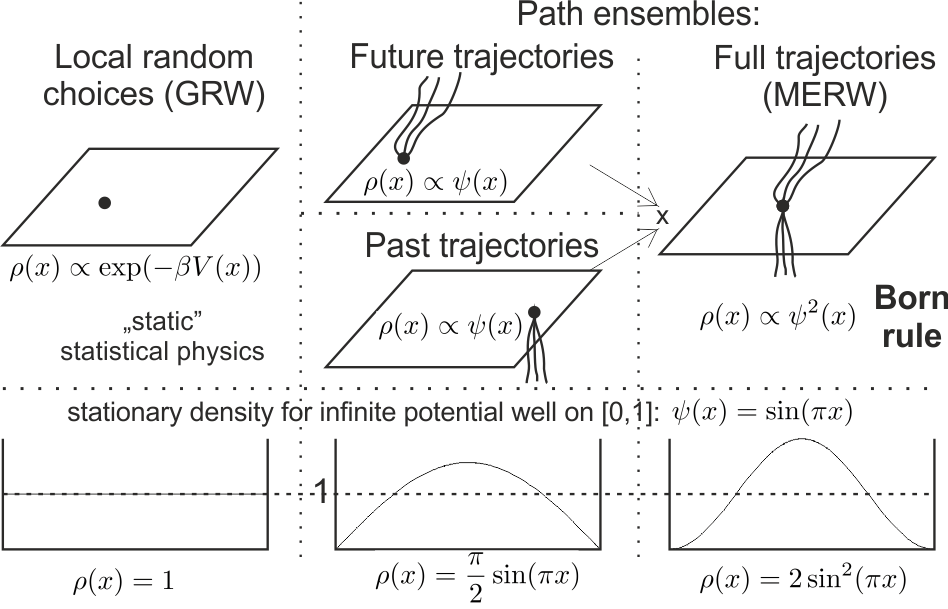

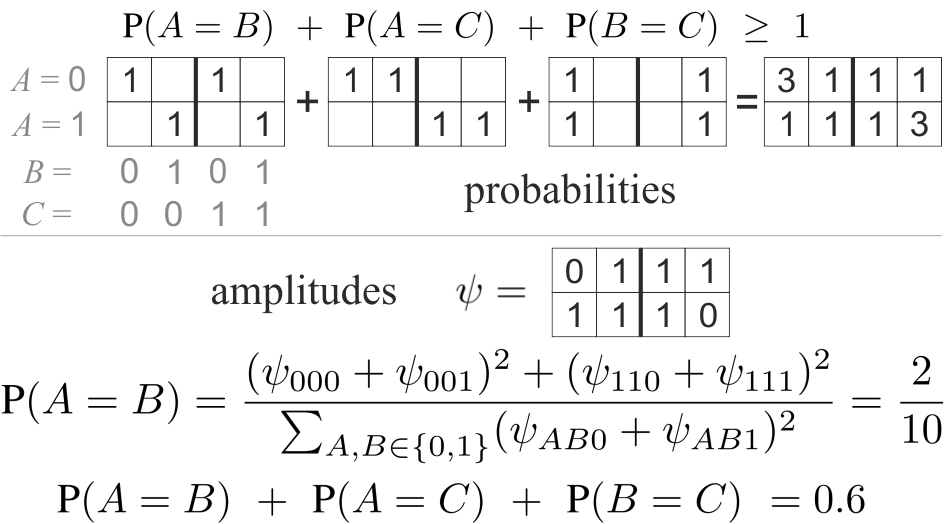

While the original Bell inequality might leave some hope for violation, here is one which seems completely impossible to violate - for three binary variables A,B,C: Pr(A=B) + Pr(A=C) + Pr(B=C) >= 1 It has obvious intuitive proof: drawing three coins, at least two of them need to give the same value. Alternatively, choosing any probability distribution pABC among these 2^3=8 possibilities, we have: Pr(A=B) = p000 + p001 + p110 + p111 ... Pr(A=B) + Pr(A=C) + Pr(B=C) = 1 + 2 p000 + 2 p111 ... however, it is violated in QM, see e.g. page 9 here: http://www.theory.caltech.edu/people/preskill/ph229/notes/chap4.pdf If we want to understand why our physics violates Bell inequalities, the above one seems the best to work on as the simplest and having absolutely obvious proof. QM uses Born rules for this violation: 1) Intuitively: probability of union of disjoint events is sum of their probabilities: pAB? = pAB0 + pAB1, leading to above inequality. 2) Born rule: probability of union of disjoint events is proportional to square of sum of their amplitudes: pAB? ~ (psiAB0 + psiAB1)^2 Such Born rule allows to violate this inequality to 3/5 < 1 by using psi000=psi111=0, psi001=psi010=psi011=psi100=psi101=psi110 > 0. We get such Born rule if considering ensemble of trajectories: that proper statistical physics shouldn't see particles as just points, but rather as their trajectories to consider e.g. Boltzmann ensemble - it is in Feynman's Euclidean path integrals or its thermodynamical analogue: MERW (Maximal Entropy Random Walk: https://en.wikipedia.org/wiki/Maximal_entropy_random_walk ). For example looking at [0,1] infinite potential well, standard random walk predicts rho=1 uniform probability density, while QM and uniform ensemble of trajectories predict different rho~sin^2 with localization, and the square like in Born rules has clear interpretation: Is ensemble of trajectories the proper way to understand violation of this obvious inequality? Comparing with local realism from Bell theorem, path ensemble has realism and is non-local in standard "evolving 3D" way of thinking ... however, it is local in 4D view: spacetime, Einstein's block universe - where particles are their trajectories. What other models with realism allow to violate this inequality?

- 1 reply

-

1

-

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

Sure, it isn't - fm size is only a suggestion, but a general conclusion here is that cross section does not offer a sub-femtometer boundary for electron size (?) Dehmelt's argument of fitting parabola to 2 points: so that the third point is 0 for g=2 ... is "proof" of tiny electron radius by assuming the thesis ... and at most criticizes electron built of 3 smaller fermions. So what experimental evidence bounding size of electron do we have? -

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

Sure, so here is the original Cabbibo electro-positron collision 1961 paper: https://journals.aps.org/pr/abstract/10.1103/PhysRev.124.1577 Its formula (10) says sigma ~ \beta/E^2 ... which extrapolation to resting electron gives ~ 2fm radius. Indeed it would be great to understand corrections to potential used in Schrodinger/Dirac, especially for r~0 situations like electron capture (by nucleus), internal conversion or positronium. Standard potential V ~ 1/r goes to infinity there, to get finite electric field we need to deform it in femtometer scale -

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

Could you give some number? Article? We can naively interpret cross-section as area of particle, but the question is: cross-section for which energy should we use for this purpose? Naive extrapolation to resting electron (not Lorentz contracted) suggests ~2fm electron radius this way (which agrees with size of needed deformation of electric field not to exceed 511 keVs energy). Could you propose some different extrapolation? -

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

So can you say something about electron size based on electron-positron cross section alone? -

Experimental boundaries for size of electron?

Duda Jarek replied to Duda Jarek's topic in Modern and Theoretical Physics

No matter interpretation, if we want boundaries for size of electron, it shouldn't be calculated for Lorentz contracted electron, but for resting electron - extrapolate above plot to gamma=1 ... or take direct values: So what boundary for size of (resting) electron can you calculate from cross-sections of electron-positron scattering? -

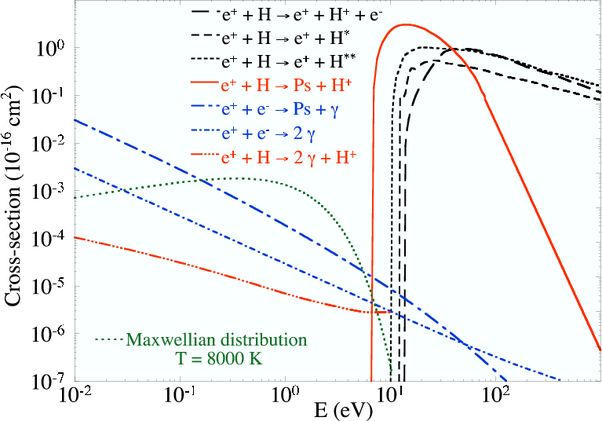

There is some confidence that electron is a perfect point e.g. to simplify QFT calculations. However, searching for experimental evidence (stack), Wikipedia article only points argument based on g-factor being close to 2: Dehmelt's 1988 paper extrapolating from proton and triton behavior that RMS (root mean square) radius for particles composed of 3 fermions should be ~g-2: Using more than two points for fitting this parabola it wouldn't look so great, e.g. neutron (udd) has g~ -3.8, \(<r^2_n>\approx -0.1 fm^2 \) And while classically g-factor is said to be 1 for rotating object, it is for assuming equal mass and charge density. Generally we can classically get any g-factor by modifying charge-mass distribution: \[ g=\frac{2m}{q} \frac{\mu}{L}=\frac{2m}{q} \frac{\int AdI}{\omega I}=\frac{2m}{q} \frac{\int \pi r^2 \rho_q(r)\frac{\omega}{2\pi} dr}{\omega I}= \frac{m}{q}\frac{\int \rho_q(r) r^2 dr}{\int \rho_m(r) r^2 dr} \] Another argument for point nature of electron is tiny cross-section, so let's look at it for electron-positron collisions: Beside some bumps corresponding to resonances, we see a linear trend in this log-log plot: 1nb for 10GeVs (5GeV per lepton), 100nb for 1GeV. The 1GeV case means \(\gamma\approx1000\), which is also in Lorentz contraction: geometrically means gamma times reduction of size, hence \(\gamma^2\) times reduction of cross-section - exactly as in this line on log-log scale plot. More proper explanation is that it is for collision - transforming to frame of reference where one particle rests, we get \(\gamma \to \approx \gamma^2\). This asymptotic \(\sigma \propto 1/E^2\) behavior in colliders is well known (e.g. (10) here) - wanting size of resting electron, we need to take it from GeVs to E=511keVs. Extrapolating this line (no resonances) to resting electron (\(\gamma=1\)), we get 100mb, corresponding to ~2fm radius. From the other side we know that two EM photons having 2 x 511keV energy can create electron-positron pair, hence energy conservation doesn't allow electric field of electron to exceed 511keV energy, what requires some its deformation in femtometer scale from \(E\propto1/r^2 \): \[ \int_{1.4fm}^\infty \frac{1}{2} |E|^2 4\pi r^2 dr\approx 511keV \] Could anybody elaborate on concluding upper bound for electron radius from g-factor itself, or point different experimental boundary? Does it forbid electron's parton structure: being "composed of three smaller fermions" as Dehmelt writes? Does it also forbid some deformation/regularization of electric field to a finite energy?

-

Four-dimensional understanding of quantum computers

Duda Jarek replied to Duda Jarek's topic in Quantum Theory

Thanks, I would gladly discuss. The main part of the paper is MERW ( https://en.wikipedia.org/wiki/Maximal_Entropy_Random_Walk) showing why standard diffusion has failed (e.g. predicting that semiconductor is a conductor) - because it has used only an approximation of the (Jaynes) principle of maximum entropy (required by statistical physics), and if using the real entropy maximum (MERW), there is no longer discrepancy - e.g. its stationary probability distribution is exactly as in the quantum ground state. In fact MERW turns out just assuming uniform or Boltzmann distribution among possible paths - exactly like in Feynman's Eulclidean path integrals (there are some differences), hence the agreement with quantum predictions is not a surprise (while still MERW being just a (repaired) diffusion). Including the Born rule: probabilities being (normalized) squares of amplitudes - amplitude describes probability distribution at the end of half-paths toward past or future in Boltzmann ensemble among paths, to randomly get some value in a given moment we need to "draw it" from both time directions - hence probability is square of amplitude: Which leads to violation of Bell inequalities: top below there is derivation of simple Bell inequality (true for any probability distribution among 8 possibilities for 3 binary variables ABC), and bottom is example of their violation assuming Born rule: -

Four-dimensional understanding of quantum computers

Duda Jarek replied to Duda Jarek's topic in Quantum Theory

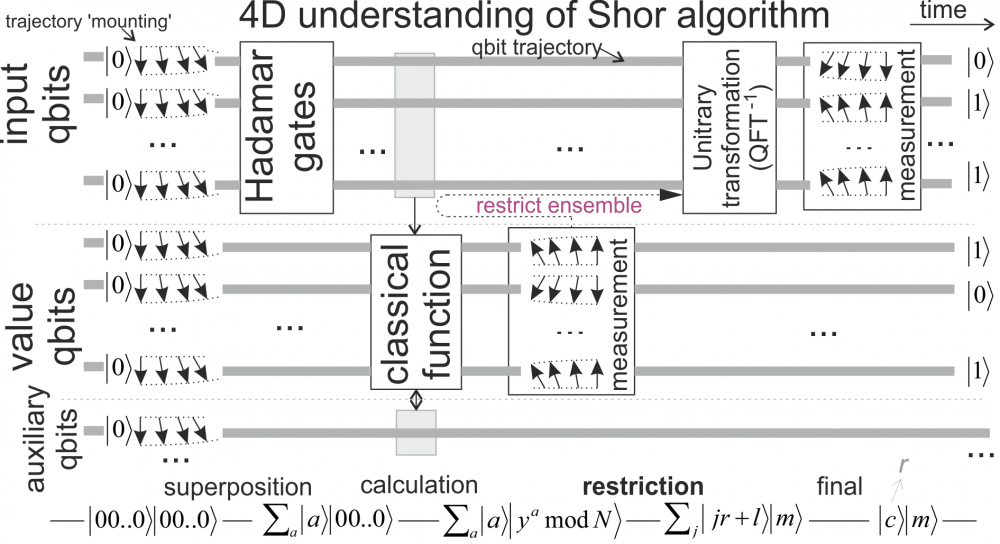

After 7 years I have finally written it down: https://arxiv.org/pdf/0910.2724v2.pdf Also other consequences of living in spacetime, like violation of Bell inequalities. Schematic diagram of quantum subroutine of Shor's algorithm for finding prime factors of natural number N. For a random natural number y<N, it searches for period r of f(a)=y^a mod N, such period can be concluded from measurement of value c after Quantum Fourier Transform (QFT) and with some large probability (O(1)) allows to find a nontrivial factor of N. The Hadamar gates produce state being superposition of all possible values of a. Then classical function f(a) is applied, getting superposition of |a> |f(a)>. Due to necessary reversibility of applied operations, this calculation of f(a) requires use of auxiliary qbits, initially prepared as |0>. Now measuring the value of f(a) returns some random value m, and restricts the original superposition to only a fulfilling f(a)=m. Mathematics ensures that {a:f(a)=m} set has to be periodic here (y^r \equiv 1 mod N), this period r is concluded from the value of Fourier Transform (QFT). Seeing the above process as a situation in 4D spacetime, qbits become trajectories, state preparation mounts their values (chosen) in the past direction, measurement mounts their values (random) in the future direction. Superiority of this quantum subroutine comes from future-past propagation of information (tension) by restricting the original ensemble in the first measurement. -

Immunity by incompatibility – hope in chiral life

Duda Jarek replied to Duda Jarek's topic in Biology

A decade has passed, moving this topic from SF to synthetic life: https://en.wikipedia.org/wiki/Chiral_life_concept One of the most difficult tasks seemed to be able to synthesize working proteins ... and last year Chinese have synthesized mirror polymeraze: Nature News 2016: Mirror-image enzyme copies looking-glass DNA, Synthetic polymerase is a small step along the way to mirrored life forms, http://www.nature.com/news/mirror-image-enzyme-copies-looking-glass-dna-1.19918 There are also lots of direct economical motivations to continue to synthesize mirror bacteria, like for mass production of mirror proteins (e.g. aptamers) or L-glucose (perfect sweetener). So in another decade we might find out that a colony of mirror bacteria is already living e.g. in some lab in China ... ... taking us closer to a possibility nicely expressed in the title of WIRED 2010 article: "Mirror-image cells could transform science - or kill us all" ( https://www.wired.com/2010/11/ff_mirrorlife/ ) - estimating that it would take a mirror cyanobacteria (photosynthesizing) a few centuries to dominate our planet ... eradicating our life ... -

There is a recent article in a good journal (Optics July 2015) showing violation of Bell inequalities for classical fields: "Shifting the quantum-classical boundary: theory and experiment for statistically classical optical fields" https://www.osapublishing.org/optica/abstract.cfm?URI=optica-2-7-611 Hence, while Bell inequalities are fulfilled in classical mechanics, they are violated not only in QM, but also classical field theories - asking for field configurations of particles (soliton particle models) makes sense. It is obtained by superposition/entanglement of electric field in two directions ... analogously we can see a crystal through classical oscillations, or equivalently through superposition of their normal modes: phonos, described by quantum mechanics, violating Bell inequalities.

-

Regarding Bell - we know that nature violates his inequalities, so we need to find an erroneous assumption in his way of thinking. Let's look at a simple proof from http://www.johnboccio.com/research/quantum/notes/paper.pdf So let us assume that there are 3 binary hidden variables describing our system: A, B, C. We can assume that the total probability of being in one of these 8 possibilities is 1: Pr(000)+Pr(001)+Pr(010)+Pr(011)+Pr(100)+Pr(101)+Pr(110)+Pr(111)=1 Denote by Pe as probability that given two variables have equal values: Pe(A,B) = Pr(000) + Pr (001) + Pr(110) + Pr(111) Pe(A,C) = Pr(000) + Pr(010) + Pr(101) + Pr(111) Pe(B,C) = Pr(000) + Pr(100) + Pr(011) + Pr(111) summing these 3 we get Bell inequalities: Pe(A,B) + Pe(A,C) + Pe(B,C) = 1 + 2Pr(000) + 2 Pr(111) >= 1 Now denote ABC as outcomes of measurement in 3 directions (differing by 120 deg) - taking two identical (entangled) particles and asking about frequencies of their ABC outcomes, we can get Pe(A,B) + Pe(A,C) + Pe(B,C) < 1 what agrees with experiment ... so something is wrong with the above line of thinking ... The problem is that we cannot think of particles as having fixed ABC binary values describing direction of spin. We can ask about these values independently by using measurements - which are extremely complex phenomena like Stern-Gerlach. Such measurement doesn't just return a fixed internal variable. Instead, in every measurement this variable is chosen at random - and this process changes the state of the system. Here is a schematic picture of the Bell's misconception: The squares leading to violation of Bell inequalities come e.g. from completely classical Malus law: the polarizer reduces electric field like cos(theta), light intensity is E^2: cos^2(theta). http://www.physics.utoronto.ca/~phy225h/experiments/polarization-of-light/polar.pdf To summarize, as I have sketched a proof, the following statement is true: (*): "Assuming the system have some 3 fixed binary descriptors (ABC), then frequencies of their occurrences fulfill Pe(A,B) + Pe(A,C) + Pe(B,C) >= 1 (Bell) inequality" Bell's misconception was applying it to situation with spins: assuming that the internal state uniquely defines a few applied binary values. In contrast, this is a probabilistic translation (measurement) and it changes the system. Beside probabilistic nature, while asking about all 3, their values would depend on the order of questioning - ABC are definitely not fixed in the initial system, what is required to apply (*).

-

I don't know The discussion about Bell inequalities for solitons has evolved a bit here: http://www.sciforums.com/threads/do-nonlocal-entities-fulfill-assumptions-of-bell-theorem.153000/

- 4 replies

-

-1

-

While dynamics of (classical) field theories is defined by (local) PDEs like wave equation (finite propagation speed), some fields allow for stable localized configurations: solitons. For example the simplest: sine-Gordon model, which can be realized by pendula on a rod which are connected by spring. While gravity prefers that pendula are "down", increasing angle by 2pi also means "down" - if these two different stable configurations (minima of potential) meet each other, there is required a soliton (called kink) corresponding to 2pi rotation, like here (the right one is moving - Lorentz contracted): Kinks are narrow, but there are also soltions filling the entire universe, like 2D vector field with (|v|^2-1)^2 potential - a hedgehog configuration is a soliton: all vectors point outside - these solitons are highly nonlocal entities. A similar example of nonlocal entities in "local" field theory are Couder's walking droplets: corpuscle coupled with a (nonlocal) wave - getting quantum-like effects: interference, tunneling, orbit quantization (thread http://www.scienceforums.net/topic/65504-how-quantum-is-wave-particle-duality-of-couders-walking-droplets/ ). The field depends on the entire history and affects the behavior of soliton or droplet. For example Noether theorem says that the entire field guards (among others) the angular momentum conservation - in EPR experiment the momentum conservation is kind of encoded in the entire field - in a very nonlocal way. So can we see real particles this way? The only counter-argument I have heard is the Bell theorem (?) But while soliton happen in local field theories (information propagates with finite speed), these models of particles: solitons/droplets are extremaly nonlocal entities. In contrast, Bell theorem assumes local entities - so does it apply to solitons?

-

Molecular shape descriptors for virtual screening of ligands?

Duda Jarek posted a topic in Chemistry

I was thinking about designing molecular descriptors for the virtual screening purpose: such that two molecules have similar shape if and only if their descriptors are similar. They could be used separately, or to complement e.g. some pharmacophore descriptors. They should be optimized for ligands - which are usually elongated and flat. Hence I thought to use the following approach: - normalize rotation (using principal component analysis), - describe bending - usually one coefficient is sufficient, - describe evolution of cross-section, for example as evolving ellipse Finally, the shape below is described by 8 real coefficients: length (1), bending (1) and 6 for evolution of ellipse in cross-section. It expresses bending and that this molecule is approximately circular on the left, and flat on the right: preprint: http://arxiv.org/pdf/1509.09211 slides: https://dl.dropboxusercontent.com/u/.../shape_sem.pdf Mathematica implementation: https://dl.dropboxusercontent.com/u/12405967/shape.nb Have you met something like that? Is it a reasonable approach? I am comparing it with USR (ultrafast shape recognition) and (rotationally invariant) spherical harmonics - have you seen other approaches of this type? -

Radiogenic heat is significant in Earth's internal heat budget ( http://en.wikipedia.org/wiki/Earth%27s_internal_heat_budget ) and its effect can be observed e.g. as high He3/He4 ratio from volcanoes and geysers: http://www.nature.com/nature/journal/v506/n7488/full/nature12992.html http://www.wired.com/2014/04/what-helium-can-tell-us-about-volcanoes/

-

From the NASA article: "Until now, all ULXs were thought to be black holes. The new data from NuSTAR show at least one ULX, about 12 million light-years away in the galaxy Messier 82 (M82), is actually a pulsar. (...) Black holes do not pulse, but pulsars do." If as "a gravitational sink" you mean massive and small - sure. However, as I understand the NASA article, it is considered being a star: an macroscopic object made of matter, instead of a black hole: all matter being gathered in the central singularity. The assumption is that there is a rotating object made of matter, producing much more energy than we could explain (assuming baryon number conservation) - what is the source of this energy?

-

Indeed the main question here is if the baryon number is ultimately conserved? Violation of this number is required by - hypothetical baryogenesis producing more matter than anti-matter, - many particle models, like supersymmetric, - massless Hawking radiation - black holes would have to evaporate with baryons to conserve the baryon number. From the other side, there is a fundamental reason to conserve e.g. electric charge: Gauss law says that electric field of the whole Universe guards charge conservation. In other words, adding a single charge would mean changing electric field of the whole Universe proportionally to 1/r^2. We don't have anything like that for baryon number (?) - a fundamental reason for conserving this number. Indeed the search for such violation (by proton decay) has failed, but this search was performed in room temperature water tanks. One of the question is if required conditions can be reached in such conditions: if energy required to cross the energy barrier holding the baryon together can be spontaneously generated in room-temperature water. In other words: if Boltzmann distribution of size of random fluctuations still behaves well for such huge energies. If baryon number is not ultimately conserved, it would rather require extreme conditions, like while Big Bang (baryogenesis) ... or in the center of neutron star, which will exceed all finite limits before getting to infinite density required to start forming the black hole horizon and the central singularity. Such "baryon burning phase" would result in enormous energy (nearly complete matter -> energy conversion) - and we observe this kind of sources, like gamma ray bursts, which "The means by which gamma-ray bursts convert energy into radiation remains poorly understood, and as of 2010 there was still no generally accepted model for how this process occurs (...) Particularly challenging is the need to explain the very high efficiencies that are inferred from some explosions: some gamma-ray bursts may convert as much as half (or more) of the explosion energy into gamma-rays." ( http://en.wikipedia.org/wiki/Gamma-ray_burst ) So we have something like supernova explosion, but instead of exploding due to neutrinos (from e+p -> n), this time using gammas - can you think of other than baryon decay mechanisms for releasing such huge energy? NASA news from 2 days: http://www.nasa.gov/press/2014/october/nasa-s-nustar-telescope-discovers-shockingly-bright-dead-star/ about 1-2 solar mass star, with more than 10 millions times larger power than sun ... is no longer considered as a black hole! Where this enormous energy comes from? While fusion or p+e->n converts less than 0.01 matter into energy, baryon decay converts more than 0.99 - are there some intermediate possibilities?

-

Have you forgotten to add "in contrast to forming infinite density singularity in large matter concentrations" ?

-

Not me, Hawking radiation means: gather lots of baryons into a black hole, wait until it evaporates (massless Hawking radiation) - and there is this number of baryons less in the universe. Also, if we believe in baryogenesis which create more matter than anti-matter ... it also violated baryon number conservation.

-

After Stephen Hawking "There are no black holes": http://www.nature.com/news/stephen-hawking-there-are-no-black-holes-1.14583 now from http://phys.org/news/2014-09-black-holes.html : "But now Mersini-Houghton describes an entirely new scenario. She and Hawking both agree that as a star collapses under its own gravity, it produces Hawking radiation. However, in her new work, Mersini-Houghton shows that by giving off this radiation, the star also sheds mass. So much so that as it shrinks it no longer has the density to become a black hole." What is nearly exactly what I was saying: instead of growing singularity in the center of neutron star, it should rather immediately go through some matter->energy conversion (like evaporation through Hawking radiation or in other words: some proton decay) - releasing huge amount of energy (finally released as gamma ray bursts), and preventing the collapse.

-

Determinant is just a sum over all permutations of products - I don't see a problem here? Cramer formula allows to write inverse matrix as rational expression of determinants - what seems sufficient ... Anyway, there still seems to be required exponential number of terms to find the determinant ... But maybe there can be a better way to just find n-th power of (Grassman) matrix ... ?