-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

Yes, please describe the situation. If leakage results from micro-cracks, I tend to answer "replace or repair the vessel, rather than modelling the problem". But sometimes (vessel for a nuclear reactor) it's impossible. At such micro-cracks, the first factor I think of is the fluid's viscosity, not the density - though Studiot brought a good point with capillary action and surface tension. If the fluid diffuses through the metal, it's essentially an experimental value. Each fluid through each metal has a measured diffusion coefficient. The volume throughput is proportional to this coefficient and can be scaled accordingly between different fluids if everything else is the same. The difference of partial pressures uses to act proportionally within reasonable limits and once an equilibrium exists. The temperature tends to influence over some activation energy.

-

About computer randomness mentioned in the first post: Software random function were historically (and still are mostly) pseudo-random. They are fully deterministic, in that, with the same seed they produce the same output sequence. Their "randomness" only means some statistical properties, and for cryptography applications, which are much more demanding, some difficulty to deduce future or past values from a known subset of the output sequence. Finding a seed that is varied enough, hard enough to guess, is very difficult to program. This is a weak point in many crypto applications, for instance if the clock serves for this purpose, and an attack angle for cryptanalysts. Recent Cpu propose a "true random" generator. I didn't see an accurate description of these generators. They could be built from thermal noise, shot noise... Physically random looks seducing, but tends to be very weak. First, physical biases like 0.1% are totally unacceptable in cryptography. Software post-processing eliminates the biases that the programmer has thought of (probability of 1 vs 0, correlation over successive bits...), but are there more? Then, is the physical state of the generator observable by other means, or can it be influenced? On a chip where the supply voltages vary brutally over time, the temperature too, isolating a generator must be difficult.

-

Particle in a box; 'localized' integrals

Enthalpy replied to Danijel Gorupec's topic in Quantum Theory

Hi Danijel and the others, you wrote the dependence of Psi on the position but did not write the dependence on time. When the wavefunction is stationary, that is if |Psi|2 is independent on time, it has still a multiplicative term exp (j2piEt/h). On the probability density of a stationary wave the term disappears, but on the momentum it doesn't. With this time term, <p> is not just a constant number, imaginary or not. Its phase rotates over time with frequency E/h. Whether <p> is imaginary at t=0 depends on your arbitrary choice of time origin. Drift the time convention by E/4h and an imaginary value at t=0 gets real. So the imaginary value you got does not mean a value to be discarded. More generally, complex numbers are excellent to describe sine amplitudes: their two components just describe the in-phase and in-quadrature components of a sine, both being very tangible when the wave is. ========== By the way, if you write this term of time dependence in a p orbital, you can compute how quickly a plane of constant phase rotates around the nucleus, and relate this simply with the orbital angular momentum of the electron. Then, the orbital angular momentum is nicely concrete and similar to the macroscopic understanding - except that the electron shows no bulge moving around the nucleus. Through the phase of the complex wavefunction, QM explains why the electron has an orbital angular momentum but the probability distribution has no movement that would radiate light. Some attributes of a movement, but not all. You can also compute the movement of equiphase planes for the plane wavefunction of a massive particle, and compare with the particle's momentum and speed. This will tell you why the ancestors defined the momentum operator that way. And do the same with the kinetic energy. ========== Computing the kinetic energy over a part of the spatial extent does have uses and is legitimate. For instance to compute how the relativistic mass changes the electron's energy levels in a hydrogen or hydrogen-like (one electron, several protons) atom. The mass correction must be done locally, not once over the whole extension, to get the experimental result. Or if an other particle interacts with the electron, and that other particle is concentrated over a portion of the electron's extension, it will feel the attributes the electron has in that region. -

Do we add fields or intensities in the double-slit experiment?

Enthalpy replied to aknight's topic in Quantum Theory

Let me then re-explain the detection correlation with two photons whose polarisation is entangled. But that's strictly nothing more than standard EPR, and I explained it already in an other thread, apparently in vain. Imagine that the photon source gives parallel polarisations to the photons. Neglect the uncertainty on the entanglement - but Heisenberg's uncertainty principles applies to entanglement too. Two linear detectors of varied orientation. If both are vertical, their detection of the photons is correlated. Both horizontal, too. One vertical and the other horizontal, no correlation. That would still be compatible with the photons' polarisation being decided at the emission. But you can repeat the experiment by replacing only the detectors with ones sensible to circular polarisation. Both right or both left, correlation. One right and one left, anticorrelation. This is not compatible with a polarisation decided at the emission. Linear polarisation gives a right or left detector some detection probability, and no correlation between both detectors. Or circular polarisation decided at the emission gives a vertical or horizontal detector some detection probability, and no correlation between both detectors. The experimental results show that the polarisation of the photons is decided at the detectors too. The polarisation of the photon is not a property fully decided at the emission. The detector influences it. And the wavefunction must be written with the polarisation as an argument, since the polarisation isn't already decided. -

In some cases, the wavefunction boils down to a probability density. Generally, it's more than that. As the wavefunction determines interference patterns or future distributions, the particle exists at all the possible positions, not only at one position where we have some chance to find it. The wavefunction has also a phase, which doesn't influence the local probability density, but is all-important to determine the momentum or the angular momentum among others. Writing of the wavefunction can make it a function of other variables than the position, for instance a function of the momentum. True, that was already answered. What the pentacene pictures bring new is that the wavefunction can be observed and then released intact - in some cases. Sure. Observations are interactions, often with some sort of amplification, that end with an effect that we perceive by our senses. And I agree too that superposition may go on at the detecting particle(s), measuring device, observer. This was a debate question, and maybe it's still one. I haven't grasped from you text where past/present/future makes a difference. Yes, all the possible paths for the photon contribute to the electron's wavefunction at the screen (or wherever) to give for instance a probability density of detection there. One interpretation common presently is indeed decoherence. The many stories exist simultaneously, there is no wavefunction collapse at all, the measurement instrument exists in all the states that result from the many photon histories, and the observer too. In a given state, the observer is not aware of the other states because decoherence let the other states (that have become en even huger number by the many microscopic interactions) add up to nearly zero. A very nice advantage of this interpretation is that is supposes nearly nothing. It superposes states as we observe they do in other circumstances. Maybe this interpretation has drawbacks. So you propose instead some limitation on the number of possibilities, histories, scenarios that coexist and may sometimes (generally at microscopic scale) produce stable and observable interferences - if I understand you properly. That's an interesting option, provided it answers some difficulties of the competing interpretations. I don't grasp why you got a neg eval, and I gave you a pos. But is there a rationale, observations, re-interpretation of experiments... something that suggests this limitation or selection among the stories? Adding an arbitrary hypothesis isn't generally desired, it gets accepted (like the wavefunction collapse was or is) if it fits the observation or simplifies the understanding. Cumulating and propagating all the possible states of a particle isn't a good interpretation - I botched that in an other thread, changed my mind meanwhile. Apparently this description suffices when the possible states are exclusive: spin up or down, photon detection at pixel 27 or 38... But for instance a photon's polarisation is not exclusive. Vertical, horizontal, biassed, right, left, elliptic... if one wants the photon to exist in all these possible states, he gets more than 1 for the total probability. Other problem, with two entangled photons he doesn't explain the observed correlation of the photons' polarisations. So some sort of "decision" must happen at the detection, being it a collapse of the wavefunction or something else. I don't grasp when such a collapse or equivalent should happen or not, so I welcome alternative theories too.

-

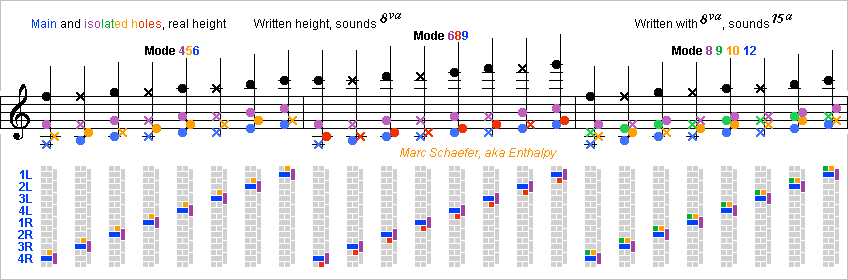

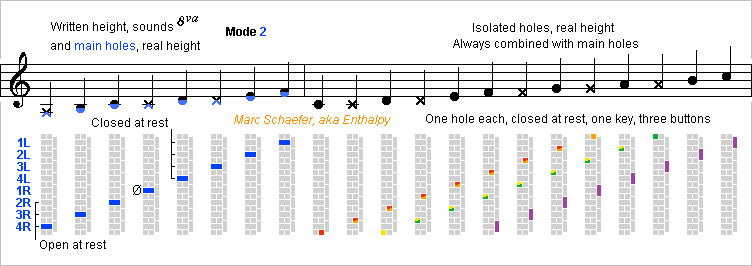

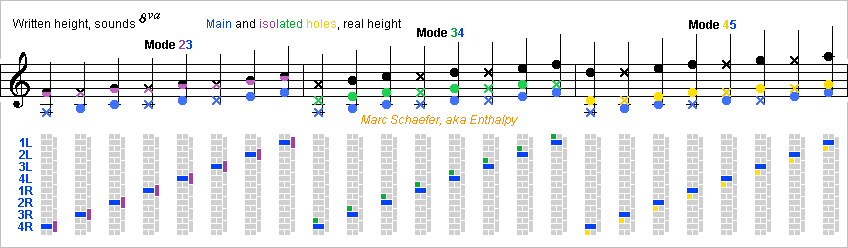

This soprito system F makes near-automatic cross-fingerings. The very high instrument resonates a soprano-long air column on modes 2, 3, 4, 5, 6, 9 and 12. One upper finger at a time presses the keys, like playing one note on a piano. Trills with the pinkie (needed only at the lowest note) and between the hands need more training, but fingerings are equally easy for all tonalities, the highest notes too. In each mode, the eight upper fingers produce one semitone each by pressing one main hole button. Some trills jump across the modes. Around each main hole button, more buttons open additional isolated holes to impose and ease the higher modes. Adjacent semitones share most of these buttons. The musician presses with a single finger a main hole button and optional isolated hole button(s) to create the pattern of open and closed holes. The three lowest main hole covers are open at rest, as seen on the lower figure, and connected so 3R closes two covers and 4R three. The dummy main button for 1R moves no cover. The four highest main hole covers are closed at rest and connected so 3L opens two covers, 2L three and 1L four. Consider the mechanism I proposed on Jul 02, 2017 scienceforums The spring force must be minimized. The covers are small. Five buttons surround each main hole button to open isolated hole covers some distance higher than the main hole: 2 semitones = major second, defining the mode ratio 8/9. 3 = minor third, 5/6. 4 = major third, 4/5. 5 = fourth, 3/4. 7 = fifth, 2/3. Adjacent main holes and buttons making notes a semitone apart, the isolated hole 3 semitones higher than a main hole is also 2 semitones higher than the next higher main hole, so the same isolated hole and corresponding button serve for both combinations: ratio 5/6 at one main hole button and 8/9 at the next one. Similarly, an isolated hole 5 semitones higher and its button for ratio 3/4 serve also 4 semitones higher than the next higher main hole, for ratio 4/5. The buttons for the isolated holes 7 semitones higher serve for only one main hole button each, but they can be combined with other buttons. The lucky combination mimics cross-fingering for modes 2-3, 3-4, 4-5, 4-5-6, 6-8-9 and 8-9-10-12. The mode 6-8-9 reaches already a transposed G higher than a piccolo oboe, a piccolo clarinet or Eppelsheim's soprillo. Whether the available mode 8-9-10-12, as high as the piccolo flute, can be played? The specialized narrow bore and the many open isolated holes help. The heights in this text and drawings hold for an instrument transposing in C one octave higher, while a tárogató or soprillo would be in B flat. A single set of 13 isolated holes serves for all mode ratios. Each hole cover has one key with up to 3 buttons, all moving together. At different locations, the 3 buttons make the varied intervals with the nearby main hole buttons: 2 and 3 semitones, respectively 4 and 5, respectively 7. The isolated holes are better distinct from the main holes. They spoil the unwanted modes better if they're smaller, and their positions adjust the intonation independently. The set of isolated holes overlaps the main holes and can reside on an offset line. A drawing may come some day. Single reeds need register keys, these help double reeds too. Each key could select one mode, with tolerance for trills. Covering only a sixth, the holes can be efficient, even be multiple at different near-nodes. I'd double the buttons at the left and right thumbs, 6+6 pieces if mode 2 needs no hole. The keyworks are simpler than an oboe or saxophone. ========== For softer sound, the main holes shouldn't be too wide, especially with a double reed. This offsets their position, and also the distance to the isolated holes. The isolated holes too can have the chambers against the strident frequencies I explained there scienceforums ========== The instrument has 8 pedal notes an octave below the mode 2. 4 extra holes and keys, for instance at the proximal phalanges or the left fingers, or at the thumbs, would reach them continuously. Though, I don't expect 4 octaves range from a soprito, and would not let a wider bore waste the high notes for low notes that a soprano makes better. Or should the instrument be twice as short, like the soprillo, use its pedal notes and the 4 extra holes, and stop at modes less high? The mode 4-5, with fewer buttons, would already give nearly the soprillo's range. Isolated holes closer to the reed should ease the emission. But with the long column and high modes, I hope to soften the sound and stabilize the intonation. Can instruments less high use this system? The keyworks make several joints difficult, and a soprano would be as long as a tenor, not good optically neither. Folded in three like a trumpet? Can a bassoon of normal length use the pedal notes and for the high notes the present easier fingerings? Assembly seems difficult then, while my alternative system there scienceforums has good joint lengths, simple keys and half-way decent fingerings. A high clarinet is less simple. The widely spaced modes need 9 or rather 10 main holes. The mode 3 an octave higher than a soprano needs a body as long as an alto. Marc Schaefer, aka Enthalpy

-

Do we add fields or intensities in the double-slit experiment?

Enthalpy replied to aknight's topic in Quantum Theory

When the photon's orientation is uncertain, it gets decided at the detector (or any interaction if that interaction is sensitive to the spin). That's the point of the Einstein-Podolsky-Rosen "paradox". This prevents writing the photon's wavefunction as an electric field. Already the possibility for the photon to be right- or left-polarized with identical probability, say as emitted by the 3s->2p transition, imposes to write the wavefunction as a function of the polarisation. Writing independently of the polarisation would make a zero sum, or a definite linear polarisation that would be inconsistent with the possibility to detect that photon with any linear polarisation. The spin is usually included in the description of a photon. Very few detectors are insensitive to the polarisation, bolometers being one example. And if you write the propagation equation for the photon, you say (or forget to say) that the polarisation is not parallel to the propagation. So the polarisation is vital to the photon. Whether writing psi (position, time, polarisation) makes the description dependent on the detector? Why should we describe an attribute, if not because we observe it? -

Hey Swansont, I hope you are only conducing tests for some potential employer. What you ask here is not your normal level. The wave function must be narrow behind each slit in order to spread with the distance so the illumination by the slits overlap in some screen region. Within that overlap region, interferences are observed and prove that the photon passes by both slits. In this experiment, the obstacle that bears the slits is chosen opaque enough to neglect light passing through. Nor would I model this absorption by an energy barrier, as they are difficult for photons, and because barriers reflect particles instead of reflecting them. And light passing through such an obstacle wouldn't be tunnelling.

-

As far as I know, all manufacturers of hydrogen cars store hydrogen under pressure at room temperature. Much research proposes to adsorb the hydrogen, make hydrides, compounds hydrogenated at will (cyclohexadiene <-> benzene), cool the hydrogen without liquefying it, and so on. I suggested to use abnormally light alloys and in magnetostrictive and shape-memory alloys https://www.scienceforums.net/topic/79128-alloys-to-store-hydrogen/I do believe storing the hydrogen as a liquid is excellent for the mass and the evaporation rate is quite acceptable for a vehicle serving regularly https://www.scienceforums.net/topic/73798-quick-electric-machines/?tab=comments#comment-738806 that would be perfect for helicopters and quadcopters, excellent for airliners. Less good for a car that may be immobile for months, but then a fuel cell can consume the boiled hydrogen to cool the remaining liquid. It was a project at Nasa for space probes, by the way. What happens to a hydrogen tank in a crash? Much the same as to a gasoline tank. In a small crash, nothing. In a big crash, leak. Difficult vehicle design saves the passengers up to 70km/h crash in good circumstances. What happens to the tank at 100km/h is less important if the passengers are dead anyway. A tank that resists 70km/h within a deformable car doesn't seem very difficult to me. For crash-tests, I build hardware, indefinitely reusable, that decelerated from 120km/h within 0.15m, that's worse than a crash. Both metal and fibre tanks are conceivable. Storing methane is much like hydrogen, only easier, both as a gas and a liquid. Denser, less cold, leaks less, more heat capacity. With battery cars being simpler, easier to fill, and available, I fear the attempts with hydrogen cars are over. But for helicopters and airliners, batteries are far from satisfying presently, while hydrogen looks perfect.

-

Hi siddesh, welcome! In some cases, there is a relationship with the density, in other cases not. When the viscosity of the liquid has no influence, typically when the "pores" are big holes, then all the pressure serves to accelerate the liquid. In such a situation, the pressure drop equals the liquid's density multiplied by the half of the squared speed attained by the liquid. Then, the liquid's speed (which tends to be uniform then) varies as the reciprocal of the pressure square root. The other extreme case is when the liquid's viscosity determines what speed it attains. You word "pores" suggests rather this situation. Then, the liquid's density has not direct effect at all. Only the viscosity has, and it does not relate with the density. Mercury for instance is dense and runny. The leakage throughput varies as the reciprocal of the viscosity. Sometimes both the density and the viscosity matter. Then no simple scaling is possible. When the exact shape of the flow is known and simple, which is rarely the case in a leak, some semi-experimental computations are possible, with Reynolds number and so on. If the shape is known but not very simple, software can try predictions using finite elements.

-

Are you kidding? That's for instance the position-momentum uncertainty. Or the diffraction limit of a lens, and antenna and so on.

-

Do we add fields or intensities in the double-slit experiment?

Enthalpy replied to aknight's topic in Quantum Theory

Wrong. The proper expression for a photon wavefunction looks like psi (position, time, polarisation). As psi must be a scalar, making it a function of the polarisation is the way to include the dependence of the amplitude on the polarisation. But in case you still believe that the electric field is the wavefunction of a photon, just show us how you write that electric field for a right-polarised photon emitted by a 3s to 2p transition. As the emission is isotropic, it shouldn't be difficult, is it? -

About the original question: Many experiments are made with photons, which are fragile particles, and this biasses the understanding and most explanations about QM. Atomic Force Microscopes make observations and measurements of valence electrons (call them wavefunctions if you wish) without destroying the electrons, which go back to the original state afterwards. Interestingly, this microscope observes all the time the same electron pair, using one single electron pair, as opposed to a tunnel effect microscope, or to experiments with photons. Meditating that should debunk many misconceptions about observations, perturbation, observability, statistical nature of the wavefunction or not. And the pictures are nice! There science.sciencemag.org and by googling Pentacene AFM Other pictures show also Lumo, no just Homo. It should be clear enough that - A measurement is not always destructive - It doesn't always change or "collapse" the wavefunction - The wavefunction can be observable and observed.

-

Why do people need fast/strong computers

Enthalpy replied to silverghoul1's topic in Computer Science

Software would help restore movie master records when lost, broken or too much damaged. How much processing power it needs, I don't know, whether it's already done neither. Take as many copies as possible, and the master too if available. Digitize everything. By comparing pictures from different copies, remove all the scratches and dust, if needed the parts completely lost at some copy. Compare also successive pictures, especially if only one copy is available, which is easier for nearly-static scenes, but heavy for characters and fast motions. If the many copies have a lower resolution than the master had, processing can recover some too. ========== I'd like the same for sound records. Removing noise shots from one copy should work better than averaging many copies. The potential public is smaller, but for musicians, some records are invaluable, and master records are lost while many copies exist, say as discs. From Heifetz' beginnings, more generally from the early twentieth century, I've heard only badly damaged copies, but many copies exist. Even more useful, we could hear how a composer played his pieces, very useful. Records exist from Eugène Ysaÿe or Béla Bartók for instance. Marc Schaefer, aka Enthalpy -

Do we add fields or intensities in the double-slit experiment?

Enthalpy replied to aknight's topic in Quantum Theory

This maths will work in the present case but not generally. The correct expression for the photon's wavefunction is a complex scalar that depends also on the polarisation that the detector can observe. It is not a vector. I too learnt it wrong from my professor. For instance, a hydrogen 3s->2p transition can radiate a photon in any direction of space, and a left polarized detector can intercept the photon with uniform probability in any direction. This is impossible to write as an electric field or any vector field, while a scalar psi does it easily. As well, the scalar psi can be generalized to entangled particles, while the electric field can't. ========== aknight, are you wondering why you get the same interference pattern whether you compute at both slits the sums of amplitudes versus the sums of squared amplitudes? Sorry I've too little time to read your interesting but long post. This is normal, but it depends on the items sizes you chose for the simulation. With typical sizes chosen for real experiments, the observed interference at the screen won't tell whether to add the amplitudes or the squared amplitudes at the slits. The slits are chosen narrow so that the actual distribution of the amplitude within a slit doesn't influence the interference at the screen. Within the width of the interference at the screen, the phase at one slit changes little, so it can be anything. If you compute Newton's diffraction rings from an aperture, a reflector, a lens... you see that the phase within the aperture is important. By the phase distribution, a lens or mirror lets light converge or diverge. It explains also why light passing through a hole not too narrow continues in the same direction as it arrived. This is excellent for the consistency of the theory. I'd dislike some theory that adds amplitudes when computing at the screen, but squared amplitudes when computing at the slits. -

Rare earth ore provides them all, but it's reportedly difficult to separate Sc and Y from the lanthanides. Centrifuges could do it thanks to the well spread molar masses: 45g, 89g and for La (138g) 139g with at least 44g difference, 15* easier than for uranium. Volatile compounds are rare. ScCl3, YCl3 and RCl3 need some 1800K to boil under 1atm, so hopefully 1000K make a usable vapour pressure. Monoisotopic Cl brings a bit. Aluminum, maraging and graphite-epoxy can't operate that hot, superalloys rotate slowly. But carbon-carbon could make the rotors, maybe with a thin hermetic metal liner. 2D tubes resist even at heat around 200MPa, varying a lot among the suppliers, so near-azimuthal winding shall exceed that. 2000kg/m3 let a tube rotate at 316m/s, so 1/2*Delta(m)*V2=0.26*RT at 1000K, needing around 27 steps. More volatile compounds would reduce RT and enable faster maraging or graphite-epoxy. Salts of organic acids seem excellent, if finding data or measuring. Marc Schaefer, aka Enthalpy

-

Centrifuges have an additional trick that I had not grasped. The contents drifts slowly downwards at the bigger radius and upwards at the centre. This second movement establishes a vertical composition gradient in addition to the radial gradient, letting the centrifugal force act several times on the composition within a single tube. Very astute. They don't tell it there https://en.wikipedia.org/wiki/Zippe-type_centrifuge So that's why the shape is a long cylinder, despite not being the strongest against centrifugal force. Here Hf would separate from Zr in a single tube, Ta from Nb too. But with graphite composite, the vertical drift may not even be necessary, and this allows shapes that rotate even faster. ---------- Other processes achieved isotopic separation: gaseous diffusion, vortex, nozzles... https://en.wikipedia.org/wiki/Isotope_separation#Practical_methods_of_separation They look less interesting than centrifuges, but would separate the elements too.

-

Some rare earth metals have a distinctly smaller molar volume, especially Sc obtained from the same ore, and a few more. Sc is reportedly difficult to separate. Would a metallic melt of small molar volume dissolve selectively these elements? Or the most easily molten or evaporated target elements, combined with the molar volume. I've listed some small solvent metals, volatile to separate from Sc, Er, Ho. Some add drawbacks to the general explosion risk, Ag seems less dangerous. Alloyed solvents make eutectics. cm3 ========= Mn 7.35 Zn 9.16 Ag 10.27 Ga 11.80 Li 13.02 Mg 14.00 Hg 14.09 ========= Sc 15.00 Er 18.46 Ho 18.74 Dy 19.01 Tm 19.13 Tb 19.30 Sm 19.98 Y 19.88 Gd 19.90 ... Eu 28.97 ========= Marc Schaefer, aka Enthalpy

-

For copper, electrorefining needs a minimum of 0.5V to start (so the theories are oversimplified), and industries operate around 2V for decent speed. Monovalent ions consume then 0.2MJ/mol of electricity, an expensive energy that makes a significant fraction of copper cost, less so for silver. The evaporation of silver costs 0.3MJ/mol of much cheaper energy: heat. I found quickly the compared costs of energy for households, not industries. In €/MWh, including VAT. 150 Electricity (in France! Germany rather 300!) 100 Heating fuel 86 Natural gas 43 Logs 35 Wood chips xx Sunheat And a price for natural gas "at city gate", it's 4usd/1000cuft or variable 6usd/1000cuft for "industrial price" eia.gov and eia.gov 1000cuft contain 1156mol whose lower heating power is 242+394-75=561kJ/mol so 6usd buy 649MJ=0.18MWh. Electricity in industrial amount costs rather 0.08€/kWh: statista.com 80 Electricity, industry amount 30 Natural gas, industry amount The metal condensation heat is available at a lesser temperature than is needed to evaporate it. A kind of heat pump would save much heat but isn't trivial to build at such temperatures. After JC's comment, I also suggested the separation of Zn from CuZn, where distillation advantageously leaves all element in metallic form. Different aspect.

-

The effect of atom size matching between metal solute and solvent is long known. One example there (I access only the abstract) sciencedirect.com They measured the solubility of Ta and W in lanthanides at varied temperatures, and: "The solubility of [small] Ta and W vary inversely with the atom size of the lanthanide solvent." "W is a much better crucible material for the lanthanides than Ta" There too I access only the abstract: aip.scitation.org "Mol/mol solubility of W in Ce is 2ppm at 800°C, increasing to 240ppm at 1540°C." There on page 25: ameslab.gov "Sm, Eu, Yb and Tm can be melted in Ta crucible without contamination" (1815K for Tm) Also, they use metallic Ca to reduce R from RCl3, and later Ca is evaporated away from R. This gives me hope that, as I suggested previously Some refractory materials let a distillation apparatus separate the most volatile lanthanides. Liquid Ca or other can selectively dissolve the biggest (smallest too?) lanthanides and be later evaporated away.

-

The Moon has no significant atmospheric pressure, so the equilibrium would tell that ice sublimes. But this equilibrium can take very long to achieve if the ice is cold. Comets can keep ice for billions of years and release vapour only when passing nearer to the Sun. This leaves open the question of the origin of this ice on the Moon, since we don't find significant free water elsewhere. I could imagine - with no figures to support nor invalidate it - that the solar wind brings hydrogen that somehow reacts with local oxygen, say from silicates. The situation is quite different on Mars, which has an atmosphere with significant pressure. Water vapour in the atmosphere, even if in tiny proportion, can freeze on a soil cold enough, and sublime when the ground warms up. Very similar to Earth, just colder and with less vapour pressure.

-

The electron affinity does count, but not in a simple way. For instance the dipole moment of CO is small with 0.122D. So while it's a matter of charge and distance, predictions are difficult. Experiment tells. Heavy software that computes molecular orbitals from first principles has a chance. I wouldn't trust too much estimation software that supposes additive rules on molecule subsets.

-

I suggested to replace Picea abies (spruce) with lighter Paulownia tomentosa (kiri) at the table of bowed instruments too, here on March 05, 2019 Koto luthiers reportedly don't get their Paulownia tomentosa from a company that selects the trees and quarter-saws them. They season the pieces in 2 years and carve plates along the L and T directions. Though, the length-to-width ratio of the violin family fits the L and R directions from quarter cuts. To balance the instrument, the back should be lightened too. Presently of Acer pseudoplatanus (sycamore), it sounds less strongly than the table if I read properly violin frequency responses, so it would need a bigger change than the table. Replacing Acer pseudoplatanus with Picea abies at the back would change more than Paulownia tomentosa does at the table. For instance some Pinus have intermediate properties, but they are not available in music instrument quality. Thinner Acer pseudoplatanus can be stiffened by a bass bar or bracings that pass under the sound post. The replacements must keep the resonant frequencies, not the thicknesses nor the mass. The frequencies depend on EL and on ER, not so simple. Maybe two different thickness ratios can be computed, from the ratios in EL, ER and rho between the materials, and some mean value used for a first prototype. The bass bar should change like the table; bending it would matter more with Paulownia tomentosa. The width could be kept and the height scaled like the table thickness. The anisotropic stiffnesses act differently on the bass bar, so the height needs further tuning. Replacing only the table and bass bar in a first prototype would already tell the effect and whether the back needs improvement too. Marc Schaefer, aka Enthalpy

-

An ascending gas jet can levitate an alloy drop to evaporate the more volatile metals without any polluting contact. If for instance the drops are 10mm3 small on a 10mm×10mm pattern, then 1m2 can process 0.5-1kg at once. A robot would place and possibly pick the samples. Hot argon is one natural choice to levitate and heat the droplets. It would carry away the vapour of the more volatile metal. The nozzles must resist the temperature but don't risk to dissolve in the melt. The heat source can be cheaper than electricity. Maybe the condensation heat coud be recycled, but being available at a lower temperature than evaporation needs, it would take some heat pump equivalent, which isn't trivial at these temperatures. Smooth evaporation seems preferable to boiling. The carrier gas pressure shall realize that. Marc Schaefer, aka Enthalpy