-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

I'd strongly prefer a more knowledgeable person to answer... From page 3 of Claudio Casciano Tesi di Dottorato, the emission depends on the second time derivative of the source's quadrupole moment. Since the moments add when you add more items (at least at the center of mass of the rotating bar) the existence and fixed position of an additional heavy body should make no difference. Is that for not too strong fields only?

-

So we'd block the man-made radiowaves? Such a shield is made from a metallic fabric, for instance at Faraday cages, and lets much (enough?) light through. I feel difficult to let the shield orbit the Earth if it must cover all directions. Several layers would be needed, and it gets quite complicated. But one single part would be badly difficult too, almost as difficult as a space elevator, because it must resist its own weight. The cage would also need active steering with thrusters to stay centered on Earth instead of falling. Then you have to check the amount of matter. A huge enterprise for Mankind was to put wires to many homes, but they don't cover the whole area between the homes, and they run densely in towns and cities, not in countryside, deserts and oceans. Covering all Earth with metal wires isn't within our reach. The construction high there is a problem. Shield elements won't stay where we put them because of their weight, but we can't give them the orbital speed neither. Plus more objections. In short: impossible for Mankind presently. If really we wanted to stop transmitting radiowaves, we could. Connecting more homes to fiber optics is under way. Other communications, say with aeroplanes, could use light beams. Then we shut off all transmitters. All unintented radiations can be reduced as much as needed. This is within graps if badly necessary.

-

Newspaper headlines being an ambitious goal, the satellite would be near to Earth, and then it makes an orbit in 96min or slightly less, much faster than Earth's 23h56min rotation, and there is no means to keep the satellite over the same Earth location. It's so for every low-orbit satellite. They take a picture when they fly over the location of interest. More satellites permit to wait less. I suppose - but am not completely sure - that blurring is sometimes a limit. Turbulence occurs at low altitude, under 17km - let's say around 8km - and at locations bad for astronomy it's like 1 arcsecond, which would blurr the imaged target by 39mm. Though, other sites are better, and also this blurring is mainly a global move of the image; since Earth observation gets strong light from the Sun, simply a short exposure improves it. More refined methods exist, I feel they'd be difficult on a moving satellite, but such projects have money. So, yes, blurring is a difficulty. The resolution would be hard and expensive to achieve. A Keyhole is approximately the biggest that present launchers can put in orbit. It's main mirror is suposed to have 2.4m diameter, and it uses already the minimum altitude to achieve an estimated 75mm resolution. 7.5mm resolution for huge (40mm?) newspaper headlines would demand a D=24m mirror which looks impossible. Beware, though, that Sapiens think. Violet or near-ultraviolet light would reduce the need to D=12m. The aperture can be synthesized from several smaller mirrors as on the James Webb Space Telescope. All that would be a huge effort for usually detrimental goals, but money exists precisely for such goals. My interrogation would be: why? The resolution of Google Earth is already better than I need. Good enough for agriculture, home planing... The military need to see armoured vehicles, boats and aeroplanes, for which present images already tell the model.

-

Waveform of an electron

Enthalpy replied to petrushka.googol's topic in Modern and Theoretical Physics

It wasn't a good objection because I had not wroten such a thing. Sure, an orbital is the waveform around a nucleus and if stationary. If the (lone) electron is captive around a nucleus but not stationary, the wave is a linear combination of orbitals. If the electron is free, the wave can be written for instance as a linear combination of plane waves. Under other circumstances, different solutions appear. -

You still haven't understood that protons (composite particles) are not created like electrons (elementary particles). With enough energy, that is way over the mass equivalent of two protons, you may obtain protons, but also many more particles, and protons don't need to be balanced by antiprotons. http://www.fnal.gov/pub/science/inquiring/questions/jackie.html 120GeV to get a small proportion of antiprotons. Also, even for electrons, the creation from two photons is very rare. The usual process involves a single photon (if not colliding other particles) passing by an atomic nucleus. Then, the electron pair does not have opposite momenta. You could search pictures taken in bubble chambers of electron pairs created by a gamma. The angle between the electrons' trajectories serves to determine the energy of the gamma.

-

When the power factor is bad it's almost always inductive. Not only does this increase losses in cables and transformers because the current is bigger for the same effect, it also lets alternators work under very unfavourable conditions - alternators are the main victims of the power factor. It results from the induction created by the stator as current is obtained from the alternator. Because inductive load is very detrimental and nearly universal, regulations (in the EU) want all appliances of medium and high power (like computers) to have a good power factor. At electronic power supplies it means a power factor corrector at the input. To compensate the big reactive power of a line, the traditional method was to free-run a synchronous machine. Meanwhile, big expensive capacitors are used but not universally; they improve the shape of the current too. Better means would be very useful, it's just that nobody knows how to. Traditional electricity meters observe only the active power, so in a first approach the burden would be for the electricity supplier only, not for the consumer, but electronic meters do whatever they're meant for, and electricity suppliers want a minimum power factor from their customers.

-

It's good to have German newspapers, because the French population has known about nothing. On 9th April, 2014, some cooling water leaks in the nuclear power plant in Fessenheim, France. The incident is evaluated at severity 1 over 7. That's what the French citizens have learned of it up to now. Apparently, the people who have known more about didn't even bother to inform the French newspapers: they passed information to the German Süddeutsche Zeitung, definitely more useful. Details about the incident are better known through the request for information sent by the Autorité de Sûreté Nucléaire (ASN) to the electricity producer, Électricité de France (EDF). By the way, ASN is the controller who still keeps the severity at 1 and could have informed the population. http://www.sueddeutsche.de/wissen/frankreich-panne-im-akw-fessenheim-war-gravierender-als-gedacht-1.2890408 (less detailed papers in other languages have cited this one) What the journalists have learned from the documents is nothing banal like the initial story was. Some water at ground level, but in the basement the control bays were out of function. The reactor's control bars did not respond. The generator could not be decoupled from the power grid. The chain reaction had to be stopped by dumping boron in the primary water. Few weeks later, the pipe network was reput to work during the ASN inspection supposed to tell whether it could be put to work. This incident is certainly a case to worry about. All normal control means were lost. Boron is a strict emergency means. Luckily, cooling and power were not lost during this incident. That's one difference with Fukushima. But the total loss of normal control means is similar. How the bloody f*ck can a water leak let lose the control of a nuke? Who subm*ron put electronic control bays at a location liable to flooding? The whole Fessenheim plant is 8m deeper than the nearby Rhine river.

-

The material wouldn't be so exotic. A bunch of nonconnected conducting wires of proper set of lengths should do it on Earth. It's more a matter of amount... Change to electricity: sure, make DC current with rectifiers at the antennas, since they work at microwave frequency. The absorption can't be perfect, but only for technological reasons. The field strength is expectedly too small for usual rectifiers, but again I'd say not for physical reasons - as long as the received power clearly exceeds kT. And with the DC power, emit light to some direction that is neither Earth nor the shield. An easier way would convert the microwaves to heat radiated away as far infrared. And then? Our microwave emissions are insignificant to the thermal balance of the planet. Do whatever you want with them. For the thermal emission of microwaves, the theoretical feasibility of the shield gets difficult. It depends on if you want to reflect the waves (works) or absorb them (the absorber emits microwaves too, necessarily). But in every case, the effect on Earth's heat balance is negligible since bodies around 288K radiate mostly around 10µm, and if one band is blocked, the heat passes over the others.

-

Waveform of an electron

Enthalpy replied to petrushka.googol's topic in Modern and Theoretical Physics

I wrote in #5: "the electron is an orbital, when stable around a nucleus.". I'm still pleased with that formulation. In #6 you misquoted me as "the electron is an orbital", which is bad practice in English too, and misused it to drift to "Please describe the 'orbital' of a free electron." It still holds that: The "orbital of a free electron" is a nonsense I didn't write this nonsense You did write this nonsense and attributed it to me. I showed your bad practice. I have no regret about it. -

From what you wrote previously, I had supposed that you know to use your lab equipment. It's more the figures about thermal noise, quantum energy, and probability of two simultaneous quanta that are in apparence against you, hence I'm keen on reading your description and figures. It's the case of many experiments, for instance Ligo. Under conceivably unreasonable assumptions they'd have no chance to be that sensitive - but the experimenters have found tricks.

-

The observation of remote stars comes too late to resolve the state of the emitting atoms. Since the emission, each atom has already been "observed" by many collisions with other atoms.

-

What I wrote is still correct. No proton pair production at 1877MeV, no need for proton pairs to guarantee the conservation of all necessary quantities, and so on. Swansont, as usual when you made a blunder, you switch to rhetoric. I wish to remind that rhetoric is not a part of physics.

-

The difference I see (which should be small) is that with more movement, heat will spread more easily within the egg from the same +100°C. Heat exchange from the boiling liquid to the solid is known to be excellent, among others because vapour bubbles can condense at the solid to transfer as much heat is needed to keep it at +100°C. As opposed, the viscous egg interior is definitely less efficient in removing heat, and is the limiting factor - agreed, MacSwell. So movement shall accelerate cooking, but little, because under the shell where the egg is already solid it won't change anything. So I continue to save electricity by boiling the water as gently as possible.

-

Fun. But - and that's intriguing with electromagnetism - the effect on the conductor doesn't depend on the induction B at the conductor: it depends on the induction within the loop. Whether you write it d(flux)/dt or as a rotational, what matters is the induction B through the surface limited by the conductor loop. In your example, the shield changes the induction at the conductor, fine. But it doesn't change the flux through the loop. Take a square loop for simplicity and a symmetric shield around the wire: The shield soaks some flux and channels it Half that flux would have passed through the loop without the shield and half outside With the shield, half that flux passes through the loop and half outside So the flux hasn't changed and the induced voltage neither. No effect by the shield on the usefully consumed electricity, nor consequently on the resultant force. Sorry I again answered in a different way than you maybe hoped. Thinking in terms of loop voltage here is easier than checking where along the loop the voltage is induced. This isn't an intuitive part of electromagnetism. EM is tricky indeed. The A field, the vector potential, would be more physical just here because its value at the conductor affects the induced voltage. Problem: we measure the induction B, not the potential A, and the materials respond to B rather, for instance in their saturation. The vector potential A is often useful, especially to compute induced voltages in electromagnetic compatibility. Sometimes the shape of the conductors make infinite integrals if you try to evaluate a flux and a resulting voltage, while Biot&Savart give easily an answer from dA/dt. ---------- I know a similar example of induction at versus within the conductor. Take a voice coil motor - you know, like in a loudspeaker. But this one is built with a short coil in a long uniform magnetic field, for instance because the iron poles saturate. You expect a force B*I*L, be the induction B uniform or not. But as the force produces work, you expect a counter-electromotive force which needs a flux variation. The flux varies indeed, despite the induction is constant at the conductor, because the induction varies far from the conductor, at the magnet or pole pieces within the loop. Every flux that passes from one pole to the other at any height element isn't in the pole any more beyond that height element, since the flux is conserved.

-

No. Electric vehicles are much more silent. Petrol cars too when they roll on their momentum. Noise isn't a good indicator of losses neither. An electric heater dissipates every kW fed in but rather quietly. And at a good pace, aerodynamic losses exceed rolling losses for usual cars.

-

I know by experience that the best compilers convert decimal constants like 0.25 approximately in binary floats. This isn't even a mistake from them: they're within the granted tolerance. The programmers have to know it, not the compilers. It comes from 0.1 having no exact representation in binary, because 5 doesn't divide 2. Just like 1/3 has no exact representation in radix 10: it's 0.333 approximately, and it remains approximate however many places you take. Then, if you multiply by 3, you get 0.999 and not 1. If your arithmetic computes to three decimal places, the result is correct within the accepted tolerance, and it's the programmer's duty to live with that. Now, a compiler that reads the constant zero dot two five is likely to Load 5 (as a binary doublefloat) Multiply by 0.1 <= This is already inaccurate because 0.1 has no exact representation Add 2 Multiply by 0.1 <= Again a source of inaccuracy Dividing by 10 would improve nothing because all the binary machines I know make doublefloat divisions by first computing a reciprocal and then a multiplication. A compiler can't neither recognize all constants that have an exact representation as binary doublefloat because there are too many. The result is approximative as a consequence. It is allowed to by approximative. And I have observed it. It fully explains the loop's behaviour here. What seems to work up to now is that integer constants are well represented by doublefloats in javascript. ---------- Can someone tell us how Java converts the datatypes when asked to perform that? double % litteral_int To my opinion, something fails there too.

-

This way of thinking is extraordinarily immodest, even more so with biology, and can only lead to mistakes. Even in better established science, refusing an experimental result because you don't understand how it happens would be a mistake. How could this possibly be done in biology, where so little is known? 10 years ago, all cells of a body were supposed to carry exactly the same DNA. 35 years ago, prions didn't exist. 60 years ago, human retroviruses didn't exist. This was universal knowledge then (and rather dogmatic in the case of medicine). The combination of several viruses is recent, as well as the natural injection by viruses of one species' DNA fragment in an other species' DNA. How many observations would you have wrongly rejected then for being unexplainable? As for the study about parotid cancer, it has been repeated and confirmed.

-

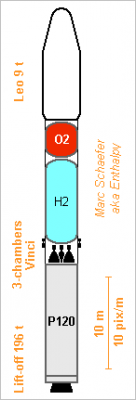

Europe has plans to develop a liquid stage put over one solid P120 booster made for Ariane 6 and Vega, nice, good. But sources suggest a new methane engine for it, which makes little sense. At identical expansion ratio, methane gains 9s Isp over Rg-1 "kerosene", yes. But so does the denser and easily produced cyclopropane. The storable safe Pmdeta gains 3s. http://www.chemicalforums.com/index.php?topic=79637.msg290422#msg290422 But a useful comparison must take the same pumping power and nozzle diameter, and then Pmdeta is as efficient as methane because its expansion is better. Cyclopropane does improve over Pmdeta, but only by 200kg despite this stage is difficult. Ariane 6 plans already hydrogen and the Vinci. Commonality tells to spread it to the middle-sized launcher, not to add a fuel and engine. Hydrogens puts 1.5x methane's payload in orbit. It needs only 3 existing Vinci chambers and short nozzles. A common turbopump can be just upscaled from the Vinci. A common actuator set is easy. Obviously cheaper than a new engine. Two US companies want methane to reuse hopefully clean engines, but hydrogen is even cleaner, and so should amines recomposition be, both in staged or gas generator cycles. http://www.scienceforums.net/topic/82965-gas-generator-cycle-for-rocket-engines-variants/ http://www.scienceforums.net/topic/81051-staged-combustion-rocket-engines/#entry785456 (with a safer amines mix). The Europeans can't overtake US companies by copying them with a decade lag. Two new engines would probably replace the Vulcain at Ariane 6, just like SpaceX will supposedly use two methane engines at a Falcon 9 first stage, but a seven-chambers Vinci does it better. Here's how the launcher can look like with the three-chambers Vinci: The design is difficult because a P120 pushes an estimated 2500kN at the end for Ariane 62, so the full liquid composite must weigh 52.5t to limit the acceleration to 4G and it must provide 6558m/s to Leo. This bad staging needs light tanks but achieves 9t in Leo, deserving a wider fairing than Soyuz ST. Details should come. Marc Schaefer, aka Enthalpy

-

At least one study found an increase in parotid gland cancer related with the use of cell phones. By Rakefet Czerninski, Avi Zini and Harold Sgan-Cohen at the Hebrew University in Jerusalem at the Hadassah School of Dental Medicine. The topic, which was heavily debated before any hard evidence existed, then vanished from the public place, for reasons I ignore. Be careful with logics like "not ionizing hence no cancer". Mankind ignores nearly everything about biology and medicine, so such reasonings fail often. It's more prudent to stick to the experimental approach.

-

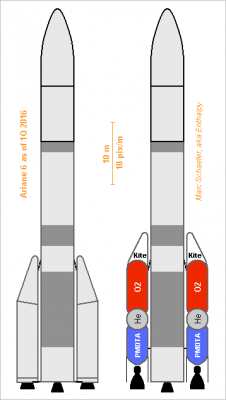

Reused "sailback" boosters can replace the solids at the new definitive version of Ariane 6. Here they have the same 143.7t and D = 3.5m as the solids. The D=2.4m nozzle pushes 3500kN in vacuum (2500kN at the end) and 3040kN sea level. 197:100 Pmdeta:O2 expands from 36bar (26bar at the end) to 62kPa, achieving Isp = 2897m/s = 295s in vacuum and 2518m/s = 257s at sea level. The solids achieve 2741m/s = 280s in vacuum. The thick welded Maraging steel (not cold-worked) lets the empty boosters weigh 126kg per ton of propellants while the solids' wound graphite achieves 84kg per ton. This cancels out the propellant efficiency. The sailback boosters are compatible, slightly taller, safer to manufacture and use, and reused. Marc Schaefer, aka Enthalpy

-

Integer values and 1/2n don't BUT the compiler is often inaccurate when converting "0.25" because this is written in radix-10 and 5 dos not divide the powers of 2. So the double representation of "0.25" may well differ a little bit and end with 111111..., which suffices to foil the "i<36" test. I'm surprized by the "i % 32" that apparently returns 1 everytime. It should have worked among doubles according to http://mindprod.com/jgloss/modulus.html but what happens in Java during the type conversion? 32 is some sort of int. This program is a collection of what shouldn't be done.

-

A banal PC too suffers from limited Dram bandwidth. The OS and many applications fit in some cache, but databases, Internet servers, file servers, scientific computing and more would need to access quickly a memory range that exceeds the caches, and they stall or need intricate programming. Many scalar Cpu with a small private Dram, as previously described, solve it but change the programming model, so the general PC market would unlikely adopt them. But for instance a quad-core Avx Cpu can be stacked with a common Dram as described there http://www.scienceforums.net/topic/78854-optical-computers/page-5#entry898348 to provide the good bandwidth and keep existing software. The Dram, in the same package as the Cpu, has a limited and frozen capacity. At end 2015, a 1GB Ddr4 chip measured 9.7mm*5.7mm hence 8GB take 20mm*23mm. This is half the Knights Landing area, so mounting and thermal expansion are already solved, and Dram accepts redundancy. Reasonable programming wants 2 reads/cycle at least, so a 3.5GHz quad Avx256 needs 112GW/s of 64b (900GB/s). If the Dram's smaller banks react in 18ns, then 2047 banks, with 64b wide access, suffice. Favour latency over density. The previously described scaled indexed and butterfly data transfers are desired. Cascaded logic of course. Transfers every 18ns between the chips need 131,000 contact pairs. At 5µm*5µm pitch that's 1.8mm*1.8mm plus redundancy. Routing in 4*1.8mm width takes 2 layers of 100nm pitch. 1ns transfer cycle eases it. No L3 should be needed, maybe a common L2 and private L1 pairs. Many-socket database or math machines don't need a video processor per socket neither. The computing chip shrinks. More cores per chip are possible but more sockets seem better. I've already described interconnections. Flash chips deliver 200MB/s as of end 2015. They should have many fast direct links with the compute sockets. Marc Schaefer, aka Enthalpy

-

Waveform of an electron

Enthalpy replied to petrushka.googol's topic in Modern and Theoretical Physics

You changed my meaningful sentence into a nonsense that you attributed to me. This is unfair and I underline it. Expect me to do it everytime. I did not write that free electrons are orbitals. You did and attributed it to me. -

Yes, I've found some math on 14 January 2016 - 08:32 AM there. I find the subject very interesting, so I'd like to make my opinion about the experiment. While I understand that you sample many pulses and that the SNR varying as the sqrt is an excellent sign, I'll be happy when you publish the electric diagram, details about the attenuator and antenna and their measured or estimated losses hence own noise. Also, whether it's important or not to the experiment that a single photon is brought to the near-field coupler, or if two photons from time to time are acceptable, and how this influences the experimental result. My feeling is that the quest about near field being quantized or not is absolutely legitimate, that many details (beginning with the noise) are susceptible to hamper an experiment so, as a scientist, I want to check them by myself at least on the paper, and that the experiment would be interesting to reproduce with more favourable parameters, maybe (if necessary) a higher frequency and a lower temperature.

-

After all... The boiling water transfers heat much more efficiently that the egg material in the shell, which is the limiting factor. Shaking the egg improves this limiting transfer, so strong boiling could harden the egg a bit faster. Anyway, I'll continue to save electricity by boiling as faintly as possible.