-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

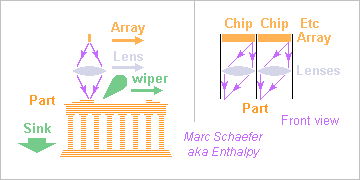

To go faster but keep the rapid prototype ability, the second proposal sweeps an array of switchable sources or faders over the new part layer. It sounds logical - though not mandatory - to sweep the array together with the wiper and have the array cover the machine's width. Then, the movement resembles much a flat bed scanner. One mature candidate as a fader array are Mems, micromachined actuators. Some can route an external light source like the ones developed long ago for telephone router; then a common lamp could feed many faders, for instance through a light guide. Liquid Crystal Displays too can align many small pixels, reflective or transmissive. I just doubt that they survive the UV. UV Led or laser diodes are the proper solution, but depending on the necessary wavelength, they may already exist or not quite. Led for 385nm and 365nm are excellent in 2015, for instance Nichia http://www.nichia.co.jp/en/product/uvled.html offers almost 50% power efficiency and 3.5W light in 45mm2, so that an array of 30 chips of hundred 50µm*50µm Leds side by side would emit 1.5W. Led provide up to now only 1mW at shorter waves (260nm) but this will improve, and lasers follow Led few years behind. Whatever the array's sources or faders, they can have lenses to maximize the light and keep some distance to the prepolymer bath. One lens per chip seems logical, and if it widens the image a bit, it permits clearance between the chips. Putting the chips on several rows also eases the distance requirement. All sources or faders arrays can have several rows where several active elements illuminate a point of the part successively. This increases much the optical power (for instance *20 with as many Led rows on a chip) hence speed and gives redundancy. If the active elements are smaller than the part's definition, software can correct movement imperfections. Marc Schaefer, aka Enthalpy

-

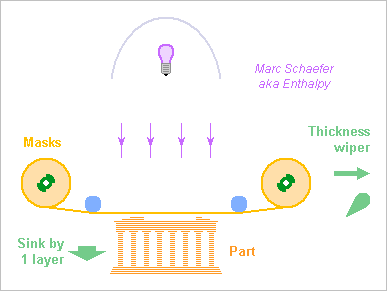

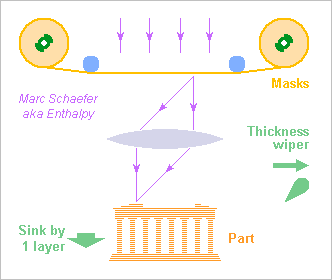

Hello everybody! 3D printing spreads out as a means to obtain parts without the shape, production volume, delays constraints of machining: https://en.wikipedia.org/wiki/3D_printing Among several processes, the older stereolithography still makes the most detailed parts https://en.wikipedia.org/wiki/Stereolithography and here I propose methods to make it faster (or less slow). Stereolithography produces a part as successive layers from a bath of a prepolymer that hardens by light. The part sinks by a small amount like 0.1mm, a wiper defines an accurate thickness of liquid, and UV light hardens it where the solid shall be, by mirrors steering the beam of a laser. Successive layers take time. Acousto-optic beam deflectors would be faster than mirrors but the He-Cd laser limits the speed anyway, with optical power and efficiency like 100mW and 0.01%. 325nm wavelength is supposedly needed to stop the light within a part's layer, and continuous wave avoids material ablation, leaving little choice among lasers. ---------- A first proposal irradiates the part with a lamp and a mask instead of a steered laser beam. The many masks - one per layer - must be produced first, speaking against rapid and cheap prototypes. Then, the exposure needs no laser, and >kW lamps, 10-40% efficient exist at 308nm (XeCl), 254nm (lp-Hg) and 365nm (Led and hp-Hg), to produce quickly each layer. A shutter helps with slow lamps. A polymer film can hold the successive masks. Fluorinated polymers offer UV resistance and transparency; some are decently strong. Moving the film between two rolls changes the mask; the accurate position needs active measure and adjustment. The mask can be lowered close to the future layer after the wiper passes, needing no extra optics. 2mm above the resin, diffraction by a 100µm wide line adds only 2*10µm, while a D=20mm lamp at 1m adds 2*20µm and outputs tens of watts. Or an optics projects an image of the mask on the future layer and the masks stay permanently at stable and clean distance from the resin. Semiconductor lithography can provide inspiration but the scale is easier here. Diffraction isn't a limit, and the lamp can be wide hence powerful. The masks can be smaller (or bigger) than the part with proper optics. The masks could be reflective instead of transparent, with the light source below. Up to now, 3D printing doesn't spring to mind for series production, but some usual functions do need fine intricate shapes: heat exchangers, filters, batteries, catalyst holders, injectors, chemical microreactors and more - as the final polymer part or as a support for a metal layer. Marc Schaefer, aka Enthalpy

-

Scenario for a shorter drift - two buoy groups must be the maximum: The flaperon drifted from 201403 to 201507 (all months are inclusive), or 17 months. The groups of buoys reproducing the end of the drift can arrive in 201607: it follows the flaperon 1 year later. If this group is immersed in 201510 (in 5 weeks), it reproduces 10 months of drift. The other group must wait 201603 for immersion near the supposed crash site: it follows the flaperon 2 year later. The 7 months not covered by the other group end in 201609. We get a result in 201609 (13 months from now) instead of 201707.

-

To better estimate MH370's crash location, a former seaman, François Vaudon, proposes to drop half a dozen of buoys near the present estimated location at the same season and follow them by radio. Comparing their arrival with La Réunion, where a flaperon landed, would narrow the zone of possible impact, as Mr. Vaudon claims currents reproduce accurately. I have no opinion about changes in the Indian Ocean induced by El Niño that affects presently the Pacific. I do know that winds have a big influence on the drift of floating items, even if they're widely submerged. Anyway, here are my two cents proposal to avoid waiting for 17 months the arrival of the buoys: Cut the supposed path in several segments. Drop more buoys, not just at the estimated crash site, but also further down on the path where the debris are supposed to be some months later. That way, one can observe simultaneously the drift over several segments of the estimated path, and get the data before the upstream buoys arrive in Africa. In the simplest example, all buoys can be dropped at the same season as the crash happened. The downstream buoys group is dropped where the debris are estimated to be 12 months later. This reduces the waiting from 17 to 12 months. The scheme can improve further. For instance, more buoys can be dropped about 6 months before the upstream groups (since presently we've past the season of the crash) at the estimated location 6 months downstream. The the two groups at 0 and 12 months locations need to drift for 6 months only, and we have a complete observation.

-

Human knowledge has progressed thanks to Borexino, a neutrino detector at Gran Sasso, Italy. The paper is at Arxiv (thank you!), ref. 1506.04610v2 http://arxiv.org/abs/1506.04610 As I understand (or not) the paper: The detector is not directional. The team could discriminate neutrinos from nuclear reactors versus Earth beta radioactivity. They could infer a total beta radioactivity for Earth, from which alpha radioactivity and heat is deduced. This heat is consistent with the total terrestrial heat flux. The detector doesn't tell from measures where the terrestrial radioelements are located, and the deduced radioactive heat depends on the assumed radioelements distribution in Earth. From a pre-existing estimate of the radioactivity of the crust, the authors deduce that more radioactivity originates deeper. "Deeper" is written as "in the mantle" in the paper, but this may be influenced by dominant theories. Unless I missed something, the observations don't discriminate between the mantle and the core. Or do they? Fantastic results. We know very little about the depth of our Earth. Most data is seismic and needs extensive interpretation to deduce some information. Here we have measures that rely less on models. I wish future experiments can refine the accuracy of the results, and (but how??) tell us some day where the radioactivity takes place.

-

Modify an engine, sure. At least, this will tell you what the real difficulties are: stability during the transients, destruction... while a simulation only tells sometimes sensible answers to already known questions. I didn't find o the Web explanations about elementary questions https://en.wikipedia.org/wiki/Homogeneous_charge_compression_ignition http://www.nissan-global.com/EN/TECHNOLOGY/OVERVIEW/hcci.html they just tell "fantastic progress towards the future with many advantages" BUT: Compressing the premixed air+fuel to ignition means that it ignites before the highest piston position. This is exactly what Otto engines try very hard to avoid since it destructs them. Diesel engines go the other way by injecting the fuel later. Why should an Hcci engine survive? Can the engine run slowly? If ignition occurs at some compression ratio, slow pace gives the combustion plenty of time to stall the engine. The fuel has a rather constant autoignition temperature, but the outer air not. How do you act on the temperature after compression? Wiki suggests the intake valve closes late. Or is the engine built differently? Model plane two-stroke semi-Diesel have a glow plug and compress the mixture a different way. This wouldn't be autoignition. While the maximum compression ratio is less good, at least it works.

-

Understanding the RSM (reluctance synchronous machine)

Enthalpy replied to CasualKilla's topic in Engineering

So you kept some iron below the copper bars? The patent and the pictures from ABB (which are heavily edited...) show thin iron bridges at several locations, supposedly because two locations wouldn't prevent bending. Not good for the torque, but they must be necessary. -

Oxides of varied metals combined to lessen the mean density would be a good reason, but ore like uraninite is UO2 pure enough for a density of 10.6 https://en.wikipedia.org/wiki/Uraninite that is, denser than the iron+nickel. So I can still imagine that uranium compounds are able to sink deep in Earth's core.

-

All your "arguments" would also apply against humans melting iron. Since we do have steel for quite some time, your arguments are wrong.

-

The difference is faint. Tunneling capability means that an alpha can escape the parent nucleus even if i misses some energy to do so. Consequently, nuclei that would be at the limit decay immediately, the ones we observe do have some stability. If alphas don't preexist in heavy nuclei, the description of their emission must be seriously more subtle than their sticking force being tunneled from time to time. At least, it needs to group four baryons properly during the process.

-

They can overlap, and have to overlap in multi-photon processes like air ionization by laser pulses or frequency doubling and tripling. One should not distinguish a photon "size" nor "duration" from the wave packet. You shouldn't imagine photons as small objects, and especially you shouldn't imagine them as smaller than the wave packet. That would mislead you. While the photon - just like an electron too - can concentrate into a small pixel if needed, there is no means to tell before that the photon was that small. Any attempt to measure the position or size of the photon during its flight would change the effect at the detector. For instance, for a telescope mirror to work, you need the photon to be reflected over the whole surface. A small photon would give a blurred image. A better working representation os that the particle has no other property than the wave (that's a basic idea of QM) but can keep some attributes when the wave adapts during an interaction. If a photon propagates from light-years away, it is light-years wide. If it interacts with the mirror, there it has the size of the mirror. If it is detected by an electron in a camera pixel, there it has the size of the electron, that is a few thousand atoms in a semiconductor or sometimes more. This resembles much a wave. What we really need from the particle is that the energy packet is still defined by the frequency, plus a few more attributes, like the charge in the case of an electron.

-

I have nothing against Bernoulli. It's just that because it's so easy to misuse, one should be careful with it. As already pointed out by the OP, symmetric and flat profiles do lift, planes fly on their back, air has no reason to spend the same time around the upper and lower faces of the foil, and so on and so forth. So while air is faster at the succion face, this does not result from the profile being convex there. What I wonder about is why misconceptions live for many decades despite simple observation and logic disprove them. ---------- For the people who need an external advice to make their opinion, and need for that a reputable source, I've found again Nasa's webpage: https://www.grc.nasa.gov/www/k-12/airplane/wrong1.html it has a "next" button at the bottom to the next theories, one per page.

-

If shock damping were acceptable for the function of a jack hammer, it would be easy to achieve by many cheap means. But how to break concrete with a soft shock?

-

Besides superconductors, electromagnets are limited to the saturation induction of the core, about 2.1T with plain soft iron. Only the size limits the force, but there is no hard limit on the size but your budget, as Swansont pointed out. Electricity consumption and cooling aren't a huge issue.

-

Why a reduction of high frequencies at 30m? Seawater attenuates higher frequencies, but it's a matter of distance, not depth. Why 3m? If the membrane has 1atm air behind it, it will be detroyed well before 3m depth. If it has water on both sides at equal pressure, the membrane will resist much more than 30m depth. I'm a little bit more optimistic here. An obstacle that makes the path from the rear about a quarter wave longer already avoids this loss. If the obstacle is smaller, there are losses, but we still hear sound - any loudspeaker designer has to accept heavy losses, as ausio quality items are about 1% efficient. Only resonating loudspeakers (buzzers) are more efficient. Sound velocity resulting from the compressibility limits the amount of medium that moves in phase to 1/4 of the wavelength, and for a plane wave, the pressure corresponds to the mass of about 1/6 wavelength. Moreover, this pressure is in phase with the displacement speed, rather than 90° in advance as mere inertia would tell. Which doesn't solve the worry of loudspeaker mass, sure. As some wall or cabinet is needed (with something that evens the fore and aft static pressures out), it shall bring inertia too. That's one reason why underwater acoustics engineers like piezo materials. These change not only their shape but also their volume with the electric field, so they can (and often do) operate without a cabinet. Since water's caracteristic impedance isn't so much different from a solid (especially Pvdf), the sound passes rather well from the solid to water without any membrane nor adapter. But adapters are possible, sure - they must just resist the pressure.

-

Understanding the RSM (reluctance synchronous machine)

Enthalpy replied to CasualKilla's topic in Engineering

Nice to read you again! Where do your laminations hold together? Right below the aluminium bars? Usual designs have small bridges across the so-called flux barriers. I haven't invested the necessary time in this motor. It can be that, at the limited stator current usual for kW motors, the induction can't cross the flux barriers, and then more barriers create more induction transitions and a bigger torque. I attempted it in the MW range years ago (for a slow wind turbine generator) but there, the full pole distance was needed to limit the flux leaks, so splitting this distance brought nothing. Without conduction losses at the rotor, the efficiency may improve, and also, it eases the more difficult rotor cooling. -

Would you tell us why you believe that no metal has ever been heated to 1000 or 2000°C, and by the way, why this should need inexisting materials, and as we're there, how do you imagine the Pidgeon process makes the magnesium we use? Copper uses electrolysis, fine. The ore contains many metals, some of which possibly evaporate about as easily as copper. Here we have tin and silver, which distillation separates easily.

-

Important experiment request: Distant single photon

Enthalpy replied to Theoretical's topic in Speculations

Hi Theoretical, to reduce the number of photons transmitted to the detector, you can emit short pulses. Typical Led have about 50ns response time, a Vcsel rather 50ps. In addition to a small hole, or several holes in series, you can also have a light absorber on the path. Some examples there http://www.scienceforums.net/topic/84660-quantum-entanglement-need-explanation/page-2#entry822805 These are more practical than increasing the distance. Possibly a simple matter of wording; more generally than "distance" it would be the "attenuation". To detect single optical photons, you need not only a very sensitive detector (like a photomultiplier). It takes also a very dark environment and excellent shielding from the source to the detector. Now, I have some doubts about detecting individual photons at radiofrequencies. The photon noise gets predominant above many 10GHz with very quiet receivers, and radioastronomers have superconducting detectors at 300GHz that observe individual photons. It's seriously difficult and, after big efforts, has been achieved only at highest frequencies. "Antennas" from John D. Kraus has a chapter about background noise and antenna noise. Would you tell us more about your experiment: frequency, sensitivity...? I suspect a numerical mistake somewhere. -

Fully agree with you Asimov. The explanation based on different length is wrong from begining to end and goes against most observations, wing profiles and so on. To this, you could add that for the air to push the plane upwards, the plane has to push the air downwards. This is more basic, and safer to apply than for instance Bernouilli which can become tricky. Be reassured that more people have understood it. The real interrogation is why so many people still propagate an obvious nonsense, but hey, that's common in science too. Nasa has a full webpage to tell exactly that: not a path difference but air deflection.

-

Passing sound from a solid to water is waaaaay easier than to air because the hard impedance mismatch vanishes. Then, a standard commercial loudspeaker is optimized for air operation, for instance with a large thin membrane, which fits water less well. You could try to use a tweeter as medium or boomer in water. Just put a stiff panel around it, wide but not closed. Take a stethoscope to check the result. And if your goal is to inject sound in water, a piece of piezo material suffices, no need for a fragile loudspeaker.

-

Several hours would be just impossible. Someone having already used such a mixer for epoxy glue thinks "unuseable" when he sees it again, and decides either to buy a different product or to mix it with a bit of wood. That's why I say, if you improve it, write it BIG on the package.

-

Why aren't electric cars more efficient in charging?

Enthalpy replied to Elite Engineer's topic in Engineering

If you live in a country where electric cars are imported, you can hear and read very easily that they have only drawbacks. But for instance in California they sell, and not little. Would there be so many stupid buyers? Cheap charging is one argument in favour of electric cars. You can make the estimate yourself. Induction might have some losses, but presently it's done through a cable - very efficient, only lengthy. -

The depth and gravitation potential of our Earth would suffice to separate elements and isotopes based on their density, what the height of a bottle doesn't suffice to. It's more a question of available time and diffusion speed. To my (wrong?) understanding, we explain alumina silicates in the mantle and iron at the core just by the difference of density. Why shouldn't that apply to uranium oxide?

-

Distillation costs little energy and it separates compounds (here the metals) due to the difference of vapour pressure. Nothing comparable with plasma, especially not the expense. In theory, between electrodes of identical composition, no voltage would be dropped - but chemistry is not theory. You hardly get below half a volt across a cell, and this is already a lot of energy, especially if compared with heat. 300K equals only 26meV. Even better, heat can be recycled in part.

-

The proton decay was fashionable at a time when the proton was considered a fundamental particle. Now as a complex building of more particles, it would involve many reactions simultaneously to decay into, say, two elementary particles. So is the decay still so fashionable?