-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

From a QM point of view, particles are necessary, but not for many properties. Not necessarily for being points, but to count them, and as a nice way to say that whan the extension of a particle changes, some properties keep conserved, for instance the charge. Also, to avoid that a particle repels itself.

-

Whether mw4rusty was still awaiting an answer...? The size of an electron has no fixe answer because, as far as we know, it's an elementary particle. You can observe it with a particle of any energy accessible to us, hence in a volume as small as possible to us, and it still behaves like one single particle. So depending on what interaction is considered, different sizes can be defined, which are all arbitrary.

-

1. Continuous 2. Soft magnetic versus hard magnetic. Transformer laminations are soft magnetic. Some more expensive materials are even softer, like Permalloy. As much as possible: this is called a permanent magnet. The usual champion presently is neodymium (in fact Nd-Fe-B) but precisely because it's hard magnetic, it's (really) difficult to magnetize, typically by capacitor discharge in the megawatt region. You buy them already made.

-

There are some less expensive solutions than a track of permanent magnets. One hoverboard design has permament magnets rotated quickly to create a repulsive force over a conductive track. Though, it has little to do with what the company describes on the website with laser, ionisation, nor hovering over a street. And what size has the Mattel thing? On the pictures it looks very small, possibly for a doll. Neodymium magnets can be bought at many places, for instance over eBay. Just type "neodymium" there and choose the proper dimensions. The material can vary a bit but this isn't so important.

-

I did write that I'm not convinced about lithophile uranium - maybe you read it from me. http://www.scienceforums.net/topic/83245-a-question-on-radioactive-decay/#entry806705 The usual classification seems to consider that metals that reduce iron oxides will themselves make oxides that are lighter than metallic iron hence belong to the mantle. Though, I note that most uranium oxides are denser than metallic iron, and that in a surrounding of much molten metallic iron, uranium oxides will also lose their oxygen, just because of the abundences. While I agree on this with one sidestream author, I do not support a priori his claim of a uranium reactor at Earth's center. And, well, the composition of the core results from models only. Observations are only seism velocity measured across the Globe - not a harsh constraint on the models. I wish we have soon neutrino observatories that locate the radioactivity in our Earth. Please take with much disconfidence, since I know nearly nothing about geophysics.

-

Solar sails have been used - not very frequently... Most were demonstrators just trying to unfurl the huge area needed to produce a significant thrust, and most failed to unfurl. Presently, Ikarus uses its propulsion by solar sail. Though, the size and mass make it still a demonstrator. More ideas are needed, first to unfurl reliably a sail as hiuge and light as we know to produce presently http://www.scienceforums.net/topic/78265-solar-sails-bits-and-pieces/ and then to produce sails even bigger and lighter than today's 7.5µm thin polymer films. Mankind needs this technology for many missions impossible or inconvenient by other means: overfly the poles of the Sun, go near to it, change several times the orbit radius and inclination, put a payload in Mercury orbit... Presently, this effort is too small. A very frequent use presently isn't exactly propulsion, but serves to keep the orientation of geosynchronous satellites. Two tilted panels at the ends of the solar arrays give for free a torque that passively keeps the panels towards the Sun, and the panel's motors at the satellite then align the body towards the Earth. This elegant method saves much fluid otherwise used to keep the orientation.

-

Centrifuge?

-

Nobody talks about plasma and electric fields. Sensei, if you have such bizarre ideas, please don't suggest anybody else brought them. I propose distillation because evaporating a metal takes less energy than most chemical reactions do, which separate atoms too and often ionize them, and involve more reactants. In addition, much of this heat can be recycled (in the preheating of the input material), and heat is a cheap form of energy.

-

So removing stains would just mean dilute them with the proper liquid?

-

Car manufacturers put 100kW fuel cells in their products, so they const less than 8$/kW. But they run on hydrogen, not methane.

-

Is the alleged link between aluminium and neurologic diseases still considered? One study believed to have found a link, and did I read that subsequent studies didn't, so that theory would be outfashioned?

-

Yes it does. A photon in glass is slower than in vacuum. You can really forget and erase from memory that explanation by the bizarre book. It is disproven by observation and it results from misconceptions about QM. Observation: Materials have a permittivity even at DC, and when no process slows down the permittivity, the speed of EM waves results directly from this permittivity. For many materials, the MHz and GHz index is exactly the square root of the DC permittivity; for silicon it's accurate up to the near infrared. No delay involved here. If "the atoms" were briefly excited, we would measure a photocurrent when light passes through glass. Misconceptions: An interaction, for instance photon with electron, does not happen at a point. It happens over all the volume common to both particles, which can be the volume of an atom, or of many atoms in a solid. It is even computed that way. There is no vacuum between the atoms of a crystal. The valence electrons take all the volume. The valence electrons spread over many atoms in a solid. The always filled states are as big as the solid itself; near the Fermi level, we may define an electron extension differently, from its mean thermal energy for instance, and then it spans over thousands of atoms, and so does the interaction. Photons spread over many atomic distances, so their propagation "between the atoms" is nonsense. The absorption or emission of a photon is a slow process. If it takes 1ns for instance (the transition duration between the states, also the light's coherence duration, and related with the line bandwidth) then the photon spans over 2 billion atoms, so the flight time between two atoms makes little sense. One cannot measure a photon propagation time that is much smaller than one light period, and far less so if the photon has a limited bandwidth as usual. Distinguishing light from photons is a bad idea. All the information, everything we can observe is in the wavefunction. This is not just a matter of concepts. In semiconductors, quantum wells, quantum dots are accurately designed and computed with electrons spanning many atoms, and the energy levels match the observed optical properties. Photon absorption and emission happens over this whole extension, generally tens of atoms thick, because an electron more localized after the interaction would give completely different results. Even the direction of polarization of the photon fits the interference of the electron wavefunctions of both eigenstates - it wouldn't be the case with an interaction limited to one atiom or to a point. Energy is conserved when all light enters a transparent material (say, with an antireflective coating). A slower speed doesn't mean less energy per photon, which depends just on the frequency. In case of doubt: don't compare photons with bullets whose kinetic energy relies on mass and speed. The energy of light corresponds to electric and magnetic fields; in a medium with permittivity, the electric field is smaller but the polarization bigger, resulting in the same energy. At a slower speed, the same number of photons per second results in more photons per metre. No worry with that, except that photons normally aren't counted on their way. No accumulation neither. Anyway, photons interact very little under normal circumstances, so they can accumulate without consequence.

- 10 replies

-

-1

-

electron electron collider

Enthalpy replied to petrushka.googol's topic in Modern and Theoretical Physics

To my very limited understanding, at TeV energies the nature of the colliding particles defines little the produced particles. They must just collide. So many particles are produced in a collision that the conservation rules have little influence on individual products. I'm not quite convinced that the initial collisions produce many neutrinos. The processes to neutrinos tend to be slow. At least the experiments at the LHC use intermediate beams (of muons?) that take tens of metres to decay partially and produce neutrinos then. A very strong constraint is synchrotron radiation, which lets particles lose power when they turn, more so with lighter electrons. This is why the LHC uses protons or atomic nuclei. Though, protons and their internal structure reportedly make the analysis of the collisions more difficult, and elementary particles like electrons would bring finer results. One research direction would go back to straight electron accelerators, provided the energy can be given within few km. Present technology with superconducting cavities doesn't permit it, so designers hope to use the electric field of a strong light pulse to accelerate the electrons. µm-sized patterns of transparent material could give a longitudinal component to the electric field, despite in vacuum it's perpendicular to the propagation direction. Sums of tilted and slowed beams maybe, if accurate enough. -

Quarks don't exist alone, so would they be expected as a decay product?

-

Neutron stars and black holes

Enthalpy replied to petrushka.googol's topic in Astronomy and Cosmology

You can understand the production of neutrons very much like a chemical equilibrium influenced by the density of the species. The process is a capture versus beta emission, and a higher density favours the neutron over a proton plus an electron because these are together twice as many. Or equivalently, a higher density favours the reactions that rely on the encounter of many reactants. Do I remember that some quark star were allegedly observed, or at least a bang fitting their model of formation? How credible were these claims? -

Alternative explanations would have existed, for instance if small objects that create most craters concentrate more in the ecliptic plane than bigger, observed ones, do. Though, such questions have been made obsolete by images of Pluto that show smooth glaciers covering partly craterized terrains.

-

Energy creates gravitation too, so "massless" is a dangerous idea. If the temperature of said vacuum is for instance that of a black body, and say at 2.7K, then the microwave power passing through a surface is known (as well as the energy contained in a volume). Better than 0.5*LI2 which doesn't apply easily here (the currents are polarization rather than conduction), the Poynting vector gives a mean value for the electric and magnetic fields. 1m2 of black body at 2.7K radiates 3µW and receives as much from its surroundings. This, and vacuum's 377 ohm impedance, makes an average E=34mV/m spread over hundreds of GHz and H=90µA/m, B=0.1nT.

-

Photon-phonon interactions are daily life in semiconductors, especially the ones (GaAs and many more) where some phonons are polar. Some phonons there have also frequencies in the IR spectrum. Even with silicon, temperature is commonly measured from distance by comparing Stokes and anti-Stokes intensity of Raman scattering. Due to slower sound propagation, a few 100MHz achieve acoustic wavelengths similar to light, and this permits to make the equivalent of a diffraction grating, whose pitch determines a diffraction direction. The local index depends on the pressure there. Carbon microphones rely on contact between carbon grains. The tiny contacts concentrate the force, and some phenomena (tunnel, thickness of superficial insulators) must be more sensitive to the force than conductivity is. A recent research paper describes a piezo antenna. I suppose that the mechanical resonance lets more charges move with a bigger displacement. Anyway, piezo materials are commonly used from <20Hz to >>1GHz in electric circuitry, which is an interaction with photons. Interation with free photons as in that antenna looks newer. QM has no direct answer to "photon-phonon interaction". Someone can try to model the behaviour of a given material like GaAs or quartz or BaTiO3, and then he'll naturally do it within QM. More generally, QM itself doesn't tell "a particle behaves like this". Additional modelling is needed; for instance that an electron is sensitive to electric fields due to its charge, but sometimes the magnetic field matters due to the magnet quantum, sometimes gravity too due to the mass, and maybe a fifth force exist that we still ignore, so "the electron's Hamiltonian" is a misleading expression. Same for photons and phonons.

-

Same frequency as the strain, I assume too, especially for such a small effect. The link doesn't suggest a reason for the cutoff frequency, but - conductivity of moist or water is expected - Water might also dissolve some piezo salts

-

Could biofuel, hydrogen, electric or solar replace jet fuel?

Enthalpy replied to nec209's topic in Engineering

The F-111 has a variable-sweep wing that looks really efficient, achieving L/D=15,8 at best subsonic speed. Maybe the mass and complexity are acceptable for an airliner. Present M=0.85 airliner achieve ony L/D~22 at their optimum cruise, for comparison; Concorde offers just 11.5 @M0.95 and 7.1 @M2.0 and is bad at take-off (no abort possible) and landing. While a fixed swept-wing may suffice for M1.3, the variable sweep must bring a lot for M2.0. I've put there some figures about a hydrogen-powered M1.3 airliner http://www.scienceforums.net/topic/73798-quick-electric-machines/#entry878522 and it looks neither feasible now nor interesting - as opposed to slower planes. -

Epoxy, used occasionally as a glue and bought from the tinkerer's supermarket (where professionals buy too here). So the epoxy's and mixer's manufacturers make money over many supermarket customers. Though, every users thinks first of these mixers that the paste is very hard to flow through and the mixers retain much or the components which are hard to clean, so an improvement on the pressure and on the dead volume would be very welcome. Do write it on the package, because users are repelled by the known drawbacks and prefer to mix by other means because of this.

-

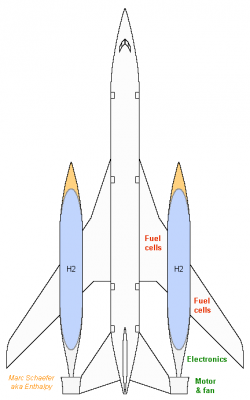

Hydrogen and fuel cells bring less to a supersonic airliner. Fuel cells are too heavy in 2015 for this power, and hydrogen volume creates more drag at high speed. This example is to cruise at Mach 1.3 - but M=0.85 over the continents. Despite the swept wing, I've kept the lift-to-drag ratio L/D=9.3 from Concorde at M=1.3 and added Cd=0.23 drag from two D=4m liquid hydrogen tanks, so L/D=7.6. (Click to see full-sized) The airliner with D=5m body shall weigh 255t with tanks half-full, needing 329kN and 127MW for 387m/s at 17000m. The 85%, 98% and 99% efficient fan, motor (speed makes it easy) and electronics take together 154MWe from the fuel cells. Present car cells weigh 100kg/100kW, so the plane's 154t fuel cells are prohibitive - this is hence prospective. >3 times lighter fuel cells are needed; they improve quickly and have further potential, but through a big effort. The drawn tanks contain 40t together, from which 60% efficient fuel cells permit to fly 7300km at M1.3. Hydrogen needs less mass than kerosene, but the volume wouldn't let carry so much more at high speed. If pyrolyzing to 2*H2, CH4 would cost slightly less than kerosene for the same flight presently; since propane and butane are still torched at oil wells, they too could provide much hydrogen, in addition to propene for plastics and butene for alkylates. Design options and variants: The tanks and cells spread the weight nicely over the wing. The cells can move to the fore and aft to balance the airframe. The tanks need compartments. Between the three hulls is a nice location for stacked wings whose interaction minimizes the wave drag. The F-111's variable-sweep wing would bring low drag at all speeds and improves take-off and landing. In constrast to slower aircraft and helicopters that can fly right now with hydrogen and fuel cells, reduce their noise, gain autonomy and range, operate promptly, save taxiing fuel, supersonic aircraft need more power and must await lighter fuel cells. Marc Schaefer, aka Enthalpy

-

Could biofuel, hydrogen, electric or solar replace jet fuel?

Enthalpy replied to nec209's topic in Engineering

The Concorde flew at Mach 2 which needs much thrust. A better speed for economy is the soft spot around Mach 1.3, where the necessary thrust is but stronger than at Mach 1. Mach 1 needs much more thrust (which converts into energy for the same distance) than Mach 0.7, which is the present-day soft spot used by every long-haul airliner. Biofuels cheaper than kerosene are presently wishful thinking, alas. When taxed as little as food, biofuels can compete with gasoline taxed at 400%, but kerosene isn't taxed or very little. If no-one has found a solution for cars, the sub-tiny prospective market of supersonic airliners has zero chance to do it better. Better engines... Obviously this is a key, but how? Manufacturers invest huge money in better Mach 0.7 engines, hypothetic supersonic airliners can't invest that amount. It will remain an adaptation of engines for fighter jets - which did improve since the Concorde for sure. But not only does more speed need more thrust: supersonic flight demands other choices (delta wing instead of wide wingspan, low dilution engines, narrow body...) that are inherently worse. The L/D drops from 30 to 5 or less, ouch. I'll have a look at fuel cells (the weak point), hydrogen and electric motors - some day. The other killer of supersonic airliners is the sonic boom. It prevents supersonic flight over most continents (except Siberia, Antarctica and few more places). If speeding only over the oceans, the plane would be slow on all routes via the Arab peninsula, Hong Kong - an awful many ones. (Much) research was made to reduce the boom but didn't bring (by far) the necessary amount of improvement. One good point is that a plane made for Mach 1.3 can be designed to fly at Mach 0.7 more or less decently, while Mach 2 is less compatible with subsonic performance. If decent Mach 0.7 flight is necessary (I fear so) it may demand a variable geometry wing, nothing easy nor lightweight nor cheap. -

It would be most useful in none of the cited construction, marine and manufacturing applications, but for the one-shot users. They waste product in the mixer and get exhausted by hand-pumping the components through the mixer. We had some such mixers at an engineering subcontractor company where we produced in single unit amounts, and mixers requiring less pressure would have been very useful.

-

Why is the Stirling Engine Not More Widely Used?

Enthalpy replied to jimmydasaint's topic in Engineering

Then the true strength of the Stirling is to choose a really bad competitor. Because my Citroën Cx is also from 1987, has also a (turbo)-Diesel with also nearly 150hp, but it needs only a 2.4L 4-cyl to achieve the power instead of a 6.2L V8, and at 160km/h (100mph) it consumes 6L/100km or 0.026gal/mile or 39mpg, not 6.3mpg.