-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

When two planets collide what is their terminal velocity?

Enthalpy replied to Robittybob1's topic in Classical Physics

About the initial speed difference of the planets: Only objects near enough to the central mass orbit in the same plane, and only if the system is old enough. Around Jupiter and Saturn, the outer moons have random orbit inclinations, half of them being retrograde. Around our Sun, objects of Oort's cloud make a spherical shell. And in our Galaxy, the globular clusters are outside the plane. Objects out of the plane, or with very elliptic orbits, can have speeds very different from Earth's one, in magnitude or direction. We see it when a meteorite comes in our atmosphere or when a near-Earth object passes by. Two half-Earths falling against an other: The Sun makes it complicated. A trailing accelerated planet will pass over the other, a leading braked one will pass below. But let's imagine planets far from any star, since such planets have been observed. Tidal forces will break both planets before they collide, since these forces suffice to make a single planet spherical. But let's imagine a superglue. The attraction force of spheres near to an other is stronger than the distance of the centers tell, because R-2 is... concave or convex, I don't care - anyway, it increases more steeply than it decreases, so the sum over both volumes exceeds what concentrated masses tell. But let's neglect this as well. So I take M and R for each half-Earth, then the gravitational energy at contact is GM2/2R (put signs if you like) and spreads over M+M as kinetic energies, so each speed is V2=GM/2R. To compare, a small object m falling on one half-Earth M would convert GMm/R into V2=2GM/R. So two identical planets (whether half-Earths or not) get relative to an other (2*V) a bit more speed (due to mass spread over a volume) than an object falling on one planet. -

Do electrons radiate from electostatic acceleration?

Enthalpy replied to Lazarus's topic in Classical Physics

Do we have any theory to answer that question? My impression is "no". Models of radiation by accelerated particles hold for a non-gravitational force and a Galilean acceleration. We have no solid theory that includes electromagnetism and gravitation. Maybe some qualitative argument could tell, like an observer falling together with an electron - no idea. Can experiments tell it? My impression is again "no", since the accelerations due to mass attraction are too small. Around an atom, a electron radiating light moves by 100pm at 1PHz, accelerating by 1021m/s2 - and the radiated power depends steeply on the acceleration. Maybe miniature black holes could accelerate electrons strongly enough, but we haven't seen any small black hole up to now. Noise produced by the Van Allen belts, as they comprise many particles? The origin of the noise is essentially the magnetic twist of the trajectories, but could we distinguish a low-frequency component due to gravitation? -

The takeoff location isn't very fruitful to measure a gain and an efficiency. A first improvement would account for the gravitational energy in addition to the kinetic one, but even that brings few benefits and big drawbacks. Take a probe heading to the Moon: as Earth rotates, once a day the takeoff location approaches the Moon and once it goes away. Then the probe's kinetic energy (plus gravitational) changes versus the takeoff location without any further propulsive action, and so does the efficiency. And when the probe is nears the Moon enough that it feels its attraction, the probe's kinetic plus gavitational energy changes versus the start location too, without propulsive effort. Or a probe to Jupiter. Once a year, Earth's orbital speed adds to the probe's speed, the other half-year it subtratcs. Again a fluctuation in energy and efficiency. We could choose differently the origin of the speed measure, but every time it would bring bizarre results and interpretations. Earth's centre, Sun's centre... avoid some drawbacks but not always, say if a probe orbits the Moon. As a consequence, mission planners are not interested in efficiency. They look primarily in speed variations, because this is what costs fuel, with a direct relation when the speed increment is measured when the thrust is made. Though, energy is conserved, not speed... if measured properly and defining cleanly what it includes and versus what! So while only speed costs, the effect of a push radically differs, depending on when it is applied. To make it simple, a speed increment brings a bigger benefit if it applies when external factors result in a big speed already - since the kinetic energy depends on the square of the speed - like near a planet or moon that has accelerated the probe or satellite. It's the point of the Oberth effect (Wiki). The Oberth effect and the slingshot effect, or gravitational assistance (Wiki) make a huge difference in both the attained speed and energy. Missions to our solar system are possible only thanks to them with chemical propulsion. But since the result differs so much from the same expense, mission planners avoid the idea of efficiency. They compare speed increments instead, and not even again an absolute or objective value, which doesn't exist - they only compare different scenarios. It's an interesting domain. Accessible to simple math if we simplify, but extremely misleading.

-

And peroxide certainly isn't the first idea to "neutralize" a solution. Before mixing two meta-stable oxygen-rich compounds, are there incompatibilities?

-

There is no fixed rule, and designs vary a lot, accordingly. If each stage adds a bit more speed than its own exhaust speed, that's a good start - but only a start. The idea of stages is to throw away masses becoming useless, especially empty tanks - and also engines too powerful for the remaining mass. Keeping a stage for too long is a waste; if exaggerating, its empty mass can be all the mass it can accelerate to the excessive speed objective, and then the stage carries zero payload, zero next stage. Throwing a stage too early means carrying dry mass too many times - this limit is never approached in existing designs - and, above all, designing too many stages. The heavy tendency is to have fewer stages and accept a heavier mass at lift-off, because development is the main cost of a launcher, not mass. The recent Zenit and Falcon go to Gto (geosynchronous transfer orbit) with only two kerosene stages; that's 12,000m/s performance with some 3,300m/s exhaust speed - a serious stretch, but is saves a third stage. Lighter dry masses improve that, to the point that we could go to Leo in one stage if we wanted it. This is one point that has progressed more than the engines and has still potential for improvement. It is also an arguments against solid first stages. As solids provide only 3500m/s at best, one single next stage reaches only Leo and uncomfortably, Gto never, Gso even less. That is, whether you add a solid first stage or not, you need two liquid stages over it, so you better avoid the solid altogether. It's an even stronger argument against air-breathing first stages, which stop at 2,000m/s. So this time mass *8, acceleration at the surface *2, gravitation energy at the surface *4, escape speed *2. 19,000m/s to reach a low orbit including losses. 3 hydrogen stages provide each 5100m/s with 100kg/t dry mass and 4400m/s exhaust speed: each m*3.80 1 kerosene stage provides 3700m/s with 100kg/t dry mass and mean 3200m/s exhaust speed: m*3.78 The lift-off mass is 207 times the payload in low orbit. 7 times worse than Saturn V with better tech. It's only 4 times more than Vulkan http://www.buran-energia.com/energia/vulcain-vulkan-desc.php http://www.buran-energia.com/energia/vulcain-vulkan-carac.php

-

Efficiency is a tricky idea for rockets... One would need to define a useful work (relative to what object?), a costly energy - and at the end get a figure not very useful or even misleading. Efficiency is, as a consequence, never used in space travel. People think in terms of speed - relative to that object, including this gravitation energy, etc.

-

Oops, I had drifted towards Imatfaal's figures of mass*4. Back to Moontanman's original 4g, admitting the same density as Earth (despite pressure compresses stones and metals in planets): Radius *4, mass *64, gravitation energy *16 at the surface, escape speed *4. Scaling Earth's 9500m/s to low-orbit, that's now 38,000m/s, horribly much. It exceeds Earth's orbital speed, something that humans have never made with their engines. Even if all stages expel gas at 4500m/s and have zero dry mass, the ratio of initial/final masses is exp(38,000/4,500)=4649 - bad start, which the dry mass can only make worse. With dry masses: let's take 7 stages bringing 5429m/s each, with 4560m/s exhaust speed (even for the first one) and 100kg dry mass per ton of propellants. Then each stage is 3.97 times heavier than the next one, and the rocket starts 15,500 times heavier than its paylod only to reach the low orbit. At Earth, existing rockets are ~300 times heavier than their Leo payload. That's 52 times worse, not 4 nor 8, because Tsiolkovski's equation contains an exponential. My unverifiable bet is that humans would have made it nevertheless.

-

Launchers are meant for, and often achieve it, fly faster than they expal gas. The best exhaust speed for chemical rockets in service is 4560m/s, but satellites orbit at 7800m/s on the low orbits. Which implies that the rocket continues to accelerate even if the gas it expels moves forward versus the Earth. Which is an excellent thing for the validity of our physics theories, because the rocket should accelerate independently of who observes it, whether he's on Earth or he's flying near the rocket, as for instance astronauts do aboard the space station when a capsule or cargo module joins or leaves them.

-

Understanding the RSM (reluctance synchronous machine)

Enthalpy replied to CasualKilla's topic in Engineering

Maybe... I consider that the torque results from the flux variation per unit angle and from the stator current (summed over several phases, accounting properly the poles, and so on). My understanding is that Vagati's rotor offers the same flux variation as the more classical rotor, narrower but plan, and that the change in flux or reluctance happens over the same angle. Then the torque must be essentially the same as for a plain rotor. The air separation between iron pertaining to different poles is essentially the same, only split in several steps; at least there is no drawback here. It means that the same maximum stator current can be used before the flux neglects the rotor's iron. Though, loss considerations may impose a lower limit to the stator current. This would lead to the comparison: a small reluctance motor can be more compact than a squirrel cage thanks to lower rotor loss, and Vagati's design reduces the cogging of a reluctance motor. ---------- Improving... The flux varies more quickly than one pole separation. It takes about one slit separation. Though, only a few slits contribute efficiently at any time, so this gain isn't huge. Then, only one air separation prevents flux leaks. This is why Abb offers only small motors (<400kVA) of this design. Designing the magnetic circuit so the flux varies quickly with the angle was already known, with pole shoes having several teeth each, but (1) it needed a higher drive frequency (2) cogging was still strong. Though, how much torque it creates and how big the stator losses are isn't obvious to compare qualitatively with other designs. -

Permanent magnet thought experiment, help with hypothesis.

Enthalpy replied to CasualKilla's topic in Engineering

Nearly paraphrasing Swansont... The current drops due to the wires' resistance, yes. The flux that you deduce from the current is only the contribution by this current. The flux created by the permanent magnets stays. And since the coil's behaviour is to oppose the flux variations: It will first prevent the flux by the permanent magnets enter the coil (if the magnets approach so quickly that the coil achieve it, which is difficult) As the induced current vanishes, only the flux by the permanent magnets stays. A superconducting coil would prevent indefinitely the flux entering... if the induction is small enough for the material. This is used by some gravity gradiometers of astounding sensitivity. -

Spice simulation, was is the current dropping?

Enthalpy replied to CasualKilla's topic in Engineering

I expect Spice to drift less than that. It has many drawbacks, is useless for general electronics, but at least its algorithm is pretty good. Is there a default value for the series resistance of a voltage source, or the series resistance of an inductor? I don't remember in detail. And what does "1m" mean in the pulse parameters? 1mohm and 1H would drop the current by 10% after 100s just as on the plot. -

I've put an example for a wind turbine at deep sea there http://www.scienceforums.net/topic/59338-flywheels-store-electricity-cheap-enough/#entry860512 feasible, but flywheels are better.

-

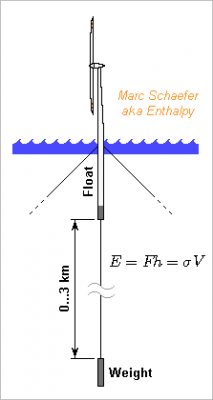

Lifting a weight stores energy as well; a wind turbine at deep sea offers 3km amplitude. Peak 5MWe average to 2MW; providing the 2MW over one calm day needs 173GJ, or 5900t that descend 3km, still feasible. The cable that lifts the weight needs half the mass of a flywheel, but this storage needs also a weight and a float (D=8m h=116m for 5900t only). Low speed, scaling, sea operations don't help neither. Wherever possible, a flywheel is better. Marc Schaefer, aka Enthalpy

-

At identical density, mass *4 and radius *cuberoot(4) imply a gravitation energy per mass unit *2.52 and the escape velocity *1.59 as compared with the Earth, agreed. Escaping the Earth takes mean 11186m/s plus some 1300m/s due to the limited acceleration at the beginning. 7367m/s more are a hard penalty: with hydrogen and oxygen ejected at 4500m/s, it takes two stages more and - depending on the stages' inert mass - 6.0* more lift-off mass. That wouldn't stop Mankind but is a big difficulty. The atmosphere brakes a rocket very little, a big one far less. Its real drawback are the potential bending moments and vibrations that let build the rocket and payload heavier and let go too slowly through the lower atmosphere, giving gravitation more time to waste some propulsive force. Up to now, our atmosphere brings nothing useful to reach orbit. Many launcher attempts wanted to use it (Skylon presently) but basically, getting a push by an aerobic combustion at 2km/s (M6) is quite difficult; this may be 1.5km/s more efficient than tranporting the equivalent liquid oxygen, good and fine - but the added complexity saves only 1.5km/s over the 9.5km/s to the low orbit. For that, I wouldn't afford exotic engines, wings, a landing gear, the extra mass, the conflicting requirements of plane and rocket, and so on. ---------- Bigger planets retaining light elements: from popular science papers, this knowledge isn't really established. The magnetic field seems important to avoid the dissociation of vapour by ionizing particles, followed by the loss of hydrogen. Before extrasolar planets were discovered, our solar system let believe that big planets are gaseous and far from the star, small ones rocky and near. This has changed, and the explanation by the star ripping the light elements away has weakened. One should also remember that the composition of our "gaseous" planets at depth is much unknown. And up to now, models for protoplanetary systems ignore the segregation of elements and isotopes by gravitation.

-

Understanding the RSM (reluctance synchronous machine)

Enthalpy replied to CasualKilla's topic in Engineering

I have the strongest doubts that Vagati's design increases the torque. By the way, his patent doesn't even suggest that. But you believe what you want. -

Atmospheric humidity for water production.

Enthalpy replied to Robert Clark's topic in Classical Physics

Hi Bob, nice to see you again! Different water users have varied cost constraints. Drink water can be expensive, washing water less so, irrigation water must be very cheap. To my understanding, the first two uses have enough supply - easy enough, since some beverages are even imported from an other continent and keep affordable. The hard part is: get water at proper amounts and price for agriculture. Air does contain humidity in sufficient amount even when it doesn't rain, fully agree. But how much does it cost to extract it? Even moving air has a cost, cooling it even more. I'd like to see such estimates. Water is extracted from air in some towns of the Andes, I believe Perú. The process is made affordable by exploiting favourable conditions: in the morning, humidity condenses as fog in the air but doesn't rain down, and a gently wind blows, so people extend big nets where the droplets adhere and converge to a collector. One family net is about 100m wide and 1m high from memory, it's purposely designed (a punched film more or less) to catch the fog efficiently and to transport the water to one point. Not very different from what local vegetables do. California is said to have foggy areas in the morning, so it should work there also, supposedly cheaper than active condensation processes. Question is: how much would it produce, how much is needed? -

Write some heights of mercury on both sides and of water on one side. Compute the hydrostatic pressure at the bottom. Both sides create the same pressure, deduce the necessary water height, remembering that higher mercury at one side means lower at the other.

-

Could An Antimatter Rocket Be Used On Earth?

Enthalpy replied to Future JPL Space Engineer's topic in Physics

But in what amount? -

Understanding the RSM (reluctance synchronous machine)

Enthalpy replied to CasualKilla's topic in Engineering

It is my understanding, stated by the inventor's patent as well, that the improvement is a smoother operation. In others aspect, I believe the performance is pretty much the same, whether the rotor laminations are solid or now slotted. What Alfredo Vagati has nicely seen is that several thinner slots add their reluctance as if they were the traditional thicker spacing between the pole shoes, so they keep its performance, well done. And then, a well-chosen number of thinner slots and shoes (narrower than one slot) permit a smoother operation. This number shall not equal the number of slots at the stator; quite the opposite, it must differ, and the patent gives some rules of choice. The idea behind smooth operation is that the iron teeth at the stator and at the rotor do not meet and leave an other all at the same time, which would be the case with equal numbers. As far as possible, different pairs of stator+rotor teeth shall meet and leave at angles regularly spread over one rotation. You know the Vernier scale at calipers? On the third picture there http://en.wikipedia.org/wiki/Vernier_scale the mover has 10 divisions on the length of 9 stator divisions. This spreads the coincidences of stator and mover divisions regularly over one period. Same trick; now at the motor, the cogging torques of teeth pairs don't add because they appear at different angles. This one idea wasn't new for other motors. Salient poles DC motors can have 3 or 5 pole shoes at the rotor for 2 poles at the stator, to cog a little less. Squirrel cage motors also have a number of rotor bars in complicated relation with the number of pole shoes at the stator. A first attempt would be numbers relatively prime at the stator and rotor, but electromagnetism imposes some restrictions in the choice. The patent tells which numbers are good. http://www.google.com/patents/US5818140 -

Why Radiation, Convection & Conduction

Enthalpy replied to Atomic_Sheep's topic in Classical Physics

Thermodynamics theorizes equilibrium but is nicely misused outside any equilibrium as well... Consider our atmosphere. Its "equilibrium" is not isothermal. It's a convective "equilibrium", where sunheat arrives much at the bottom and escapes much at the top. Thermodynamics permits us to compute the mean temperature gradient over the altitude. -

Could An Antimatter Rocket Be Used On Earth?

Enthalpy replied to Future JPL Space Engineer's topic in Physics

I've seen some papers for space probe propulsion. Nothing useable in any foreseeable future, sure. -

Even if it were permanent, a different possibility would have been the interaction energy, which lets particles weigh together less than if separated. This tends to be more important at smaller scale.

-

could matter be converted into light?

Enthalpy replied to TheThing's topic in Modern and Theoretical Physics

Since every energy form increases mass, every single time light is emitted, the emitter gets lighter, and every single time light is absorbed, the absorber gets heavier - for instance because it gets warmer. -

And I learned the hard way that dissimilar metals is not the answer - despite being written and taught universally. My answer presently is that you need at least one non-galling alloy, or better both, and pairing has no effect at all (well, it's not just my answer; two teams investigated galling and both tell the same). I experienced titanium (Ti-Al6V4, not little bit of Cr in it) gall horribly against hard chromium (no mentionable quantity of Ti in it). Which also disproves the hardness theories, as both parts were nicely hard. But you can run bronze against bronze, just fine.

-

One argument against a neutron composed of a proton plus an electron (...that idea was fashionable looooooong ago) is the beta plus radioactivity, where the proton decomposes in a neutron plus a positron (positive electron, or antielectron) and a neutrino. It happens with nuclei that have too many protons and too few neutrons. In that case, the mass difference between the older and newer nuclei favours the transformation in this direction. Which implies that all protons and neutrons in the nuclei have an influence, it's not a matter of one neutron or one proton alone that decomposes. Well, this was already clear from the neutron's stability in many nuclei, while a lone neutron decomposes.