Everything posted by studiot

-

Youtube says the 2nd Law is Broken.

Only if we accept your changing my post to the specific value of work you introduced. If you can only work with that particular figure, rather than the general one I introduced, then we can use your w/2. However that leads to what appears to me to be a self conflicting pair of statements. How can we have "increased its entropy overall by W(T2-T1/2)/T1T2 and also have "there isn't a change in entropy" ? Please explain. ... which I think you need to gracefully withdraw. No shame. Just own the error. I can't see the connection between this and the first example designed specifically to show why the process needs to be cyclic to obey the second law ( as stated by its originators as I have already posted) and the second example which might have been put better, but was designed to show something else. A particle bouncing back and for in a perfectly elastic manner along a predifined track suffers no change in entropy. You have still not stated the version of the second law you wish to employ, despite several requests. That is pretty rude in my opinion.

-

Youtube says the 2nd Law is Broken.

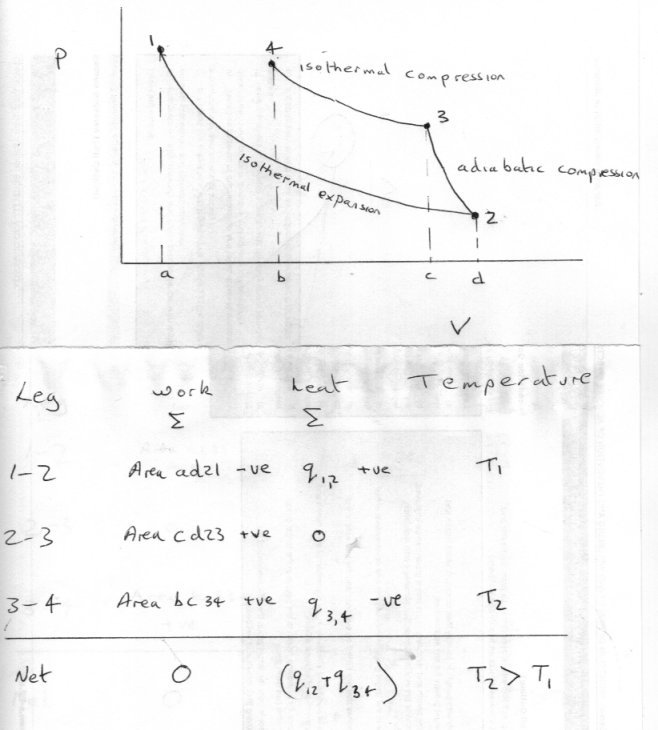

dS = dQrev/T by definition. Your process may be ideally reversible, but it absolutely is not isentropic other than the stage you call 'adiabatic compression'. If you also called this stage 'isentropic compression' it may help alert you to the fact that isothermal compression processes are very far from isentropic. So if you've drawn inferences from this line of thinking that you believe will help me with my box problem (I no longer have one), I fear that you have managed to confuse yourself. Read it again properly and post the extract where I also called this stage isentropic If I did that why do you think I allocated q1,2 to stage 1 - 2 and q3,4 to stage 3 - 4 resulting in a net heat change? So the system entropy changes are [math]\Delta {S_{3,4}} = \frac{{{q_{3,4}}}}{{{T_2}}}[/math] and [math]\Delta {S_{1,2}} = \frac{{{q_{1,2}}}}{{{T_1}}}[/math] What I said was the that net w = 0

-

Should Police Departments Be Given More Money?

I have a question about the barchart. I don't know how the state and local funds are raised in your chart. In the UK much of the 'local' spending is provided by central government, only some is raised locally Can you say how this works in other countries? I agree +1 It is a knotty problem.

-

Youtube says the 2nd Law is Broken.

To continue. So how does my process stack up against classical statements ? Well since the process does take transfer heat from a colder body to a warmer one it is a good job that it is not a cyclic process so does not satisfy all the conditions of the classical second law. In other words the Second Law (classical formulation) should not be applied. That example is exactly why the originators included the cyclic requirement. But of course it is unsatisfactory not to be able to apply the Second Law to non cyclic processes, there are many such in Chemistry. This is where the Chemists' version comes in useful. The key to this is now to consider the system and surroundings together as one 'Universe'. This may be applied to my one shot reversible process. The process considering the reordering of particles in a box is also a one shot process. Considering my example the particle track is entirely reversible so there is no change of entropy. However you are wrong to say that the box must be included as part of the system. The system can be anything I want it to be so long as I can draw (define) a boundary round it. Of course an injudicious choice of system and boundary can make calculations difficult or even impossible, as you are finding out with the box. A System is whatever is inside the boundary. The boundary is not part of the system. In the box case I choose the box as the boundary. Thermodynamics provides the exchange variables of work and heat which are not state variables to connect the system to its surrounding across the boundary. I would recommend comparing a thermodynamic discussion of the latent heat of fusion of a pure substance with the multiparticle case for the box.

-

Youtube says the 2nd Law is Broken.

What a pity you are preventing yourself from seeing the answer to your original question, which is contained in the answer to my question. I have never claimed it breaks any laws, (quite the reverse in fact if you read my post properly) or that it will provide a supply of free energy. All I asked was how it fits with the second law (which is what you did).

-

Youtube says the 2nd Law is Broken.

Wow, what a lot of invective just to dodge answering a question, similar to the one you asked in the OP. The joke is that it is based on one from a textbook entitled Thermodynamics for Chemical Engineers written by three professors from the Dept of Chem Eng at Imperial College.

-

Youtube says the 2nd Law is Broken.

If you don't know how to answer my question, which did not mention a cycle or cycles, then please just say so and don't mess around wasting everyone's time. If there was anything unclear in my description please just ask and I will amplify the point.

-

Youtube says the 2nd Law is Broken.

https://en.wikipedia.org/wiki/Constantin_Carathéodory So consider the following process as outlined on the PV diagram where working fluid moves from state 1 to state 4 in three reversible stages or legs. Stage 1 -2 heat is accepted via an isothermal expansion at the lower temperature T1 and expansion work is therefore done. Stage 2 - 3 work is again done but no heat exchanged during an adiabatic compression, buit the work is of the opposite sign to that of the first leg. Stage 3 - 4 Heat is now rejected at a higher temperature T2 and work done in an isothermal compression to reach a point where the combined work of stages 2 and 3 equal that of stage 1. Thus exactly zero external work is performed, but a quantity of heat is transferred from a lower temperature reservoir to a higher temperature heat reservoir. So can you explain why this does not contravene the Second Law ?

-

Youtube says the 2nd Law is Broken.

There declares the man who also writes Is it? Never heard of it. in the same post. This was a far better statement as it leads to yet another version of the second law (The Chemist's version) Of course there were at least three different versions of the second law by those who originally wrote it and they are the subject of my first line of enquiry. However since my kinetic line was so ill received I don't know whether to bother. The original three statements, which are the classical one's most used by Chemical Engineers contain an extra condition that is all to often forgotten, and you seem to have forgotten it this time. It is that condition that I wanted to discuss first, before going off down a kinetic/statistical track.

-

Attempting to create a generalized graph of mathematics

Yeah it is certainly a big project, with a capital B that will take a lot of work. I am genuinely worried about this idea that one thing sits on another. This is true for some things, but for most subject areas you need to know a bit of other areas to do anything. When you have developed the new subject a bit you often then find you need to/can develop the 'supporting' subject a bit further and in turn can progress the top one. That is how we learn mathematics (and other technical subjects). What did you think of my tiling v tree comment ? I note Ghideon's picture does some of that.

-

Youtube says the 2nd Law is Broken.

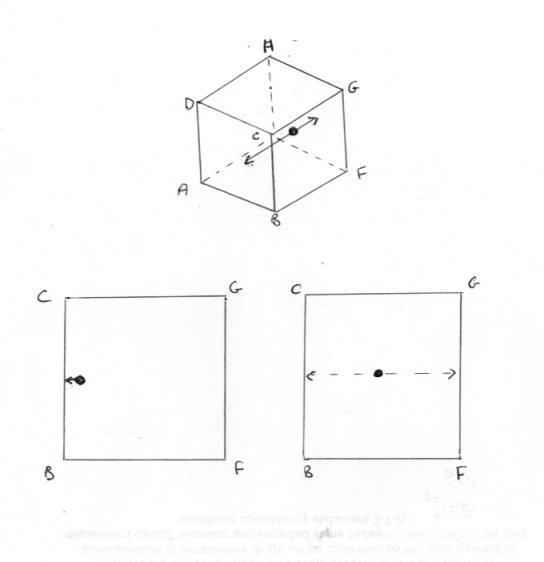

Of course it is possible. There are two lines of inquiry I was intending to pursue. Swansont raised one of them before I did so I will say something on that one first, although the better and natural order would be to consider the classical approach first. You did not reply to my question Which version of the second law are you considering ? There reason for this will become apparent in due course. So let us consider the activity of a single particle (molecule if you like). But let us simplify matters even further. Let us place the particle in a cubical box so that it bounces normally back and fore between opposite faces ABCD and EFGH. As a result of this it always follows the same track as in the third sketch. The consequences of this are that no force is exerted on any of the other four faces of the box. Now the thermodynamic pressure is defined as the average force of impact on all the faces of the box and being the same in all directions. Yet only two of the six faces experience any pressure force at all. Further when the particles is somewhere between faces no face at all experiences a pressure force. Pressure is an intensive variable, which should be the same throught the box or it is not defined. What about volume, an extensive variable ? Well the particle, being confined to its track, cannot access most of the volume of the box. So is volume a defined property either? Or is this a question of these Caratheodory microstates in his definition of the second law? Finally how can a particle striking a boundary wall be in equilibrium?

-

Youtube says the 2nd Law is Broken.

I labelled my points firstly and secondly to help those who perhaps do not read postings carefully enough. What is the difference between accepting firstly in your own mind but saying nothing about It and ignoring it? Firstly is the key to the fluctuations you seem so keen to discuss. Secondly is about the status of these fluctuations. One thing that would be useful would be to state the version of the Second Law we are meant to be comparing the situations described to ? The issue is not to explain it but to ask how does it compare with the Second Law ? Consider this We rely on the observation that throughout the Universe electrons will be in the appropriate place and energy level for bonding and other activity (when required) despite the probability that they will be somewhere else at the appropriate time interval. When you compare the number of instances of such activity we have observed, to the probability of them doing something else must be incredibly small. Isn't the kinetic theory of gas molecules a coarser example of the same statistics?

-

Youtube says the 2nd Law is Broken.

I did read it, perhaps not carefully enough. But I note that this thread has jumped around a good deal and plenty of additional material has been introduced but not in any coherent way. I would also observe that I only added a couple of very small points to swanson'ts original response, although I consider my point important. You do not seem to have addressed either of them. I now find myself in the situation of being puzzled as to whether to proceed with classical macroscopic thermodynamics where the typical version of the second law is being misrepresented by your references. System Entropy can and does decrease in appropriate circumstances. Or whether to look at the misapplication of statistics of your youtube reference. Misapplication is one word bullshit or baloney are others for those authors.

-

Attempting to create a generalized graph of mathematics

I don't know if you have heard of David Hilbert but he tried to do just this. The axiomatisation of Mathematics. You should read about his fate. https://en.wikipedia.org/wiki/David_Hilbert

-

Attempting to create a generalized graph of mathematics

Again some history (of mathematics) books publish timelines, These can also be a valuable source of information and inspiration.

-

Youtube says the 2nd Law is Broken.

A couple of things to add to swansont''s excellent post. Firstly Thermodynamics largely ignore the time variable. In particular it says nothing about how long a system will remain in a given state or how long it will take to reach that or another state. So these so called second law violations are 'instantaneous' and short lived, but the second law always wins on a time averaged basis. Secondly what makes you think these are actually formal 'states' ? Formal states have state variables that are properly defined. You cannot apply most of classical thermodynamics to improperly defined states.

-

Attempting to create a generalized graph of mathematics

I think I get the general idea. +1 for ambition, I will remember to log on again in 3020 to find out how you got on. Meanwhile here are a few thoughts. If you have access to a library look at the contents pages of compendia of mathematics books. I have a couple by that name, one by Manzel (2 vols) and one by Meyler and Sutton. There is also the Princeton Companion to Mathematics and the Cambridge Encyclopedia of Mathematics. The contents pages should give you some subject headings to think about. Also some authors publish dependencies or dependecy diagrams such as "chapters 4 -7 should be read before chapter 11". though this is more common in Engineering than Mathematics. However such information from either subject could be very useful, as it shows what depnds on what. As regards the subject areas themselves I suggest you don't use the tree analogy. This reuqires that the 'branches' are separate areas or subjects. In truth there is considerable overlap and I don't thionk there is a single 'branch' that could stand alone by itself. There is considerable overlap, For instance although Geometry does not require measurement, you could not do Geometry without numbers even for shape and form. How else could your distinguish triangles, squares, pentagons hexagons etc? So I suggest you go for a tiled presentation, perhaps a bit more formal than in Ghedieons diagram (+1 for finding that). With suitable overlap or overlay you can sowh the interactions. Go well in your endeavour.

-

Will entropy be low much of the time?

I am not, and never have, disagreed with your outlining of basic mathematical set theory in respect of the word 'partition'. This is one reason why I have tried to avoid using the word since its use in classical thermodynamics is somewhat different. However the devil is in the detail as always. You are quite right to identify STRUCTURE and marking out differences between sets with the same Mi. This is the point I have been trying to make. However you have missed one important point. Mathematical partitioning of Mi (is based on) equipartition and implicitly assumes the 'equipartition theorem' of thermodynamics. You have also mentioned disjoint partitions, which is important in the mathematical statistics of this. STRUCTURE, as observed in the physical world, creates some stumbling blocks to this. I was hoping to introduce this in a more measured way, but you have jumped the gun. As I said before, we both mentioned Turing so suppose the tape running through the machine includes the following sequence ........1 , 1 , 1, 0, 0, 0...... STRUCTURE includes the possibility that the first 1 in that sequence can affect the entry currently under the inspection viewer of the machine. Disjoint require that it cannot. So a Turing machine cannot analyse that situation. Nor can it arise in information technology, whose partitions are disjoint. The anomalous behaviour of Nitrogen etc is an example of this, as already noted. The interesting behaviour of Nitric Oxide, on the other hand provides an example of a genuine statistical two state system behaviour. Finally, I do hope, you are not trying to disagree with Cartheodory. However you come at it, statistically or classically, you must arrive at the same set of equations. And you seem to be disagreeing with my classical presentation, because it is much shorter than the same conclusion reached in the statistical paper you linked to ?

-

Will entropy be low much of the time?

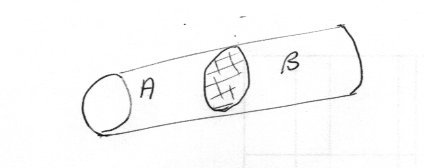

I'l just answer this one for now since it is an example of Caratheorory's formulation of the Second Law. You have correctly anticipated part of my answer. I do think our discussion is beginning to get somewhere now. +1 Perhaps you have come across this? Anyway here is an analysis of the system Suppose that when the piston is at the position shown, the system is in equilibrium. So the left hand chamber equilibrium volume is VA and the right hand volume VB. For the subsytems (partitions in your parlance) nearby states have volumes (VA + dVA) and (VB + dVB). So their entropy changes are [math]d{S_A} = \frac{{d{E_A}}}{{{T_A}}} + \frac{{{P_A}}}{{{T_A}}}d{V_A}[/math] and [math]d{S_B} = \frac{{d{E_B}}}{{{T_B}}} + \frac{{{P_B}}}{{{T_B}}}d{V_B}[/math] But [math]d{E_A} = - Pd{V_A}[/math] and [math]d{E_B} = - Pd{V_B}[/math] so [math]d{S_A} = d{S_B} = 0[/math] Therefore [math]dS\left( {system} \right) = d{S_A} + d{S_B} = 0[/math] So the entropy is the same in all positions of the piston. Thank you for the link I have downloaded the paper for later reading. I am glad you mentioned Turing because his machine is the crux of the difference between information entropy and the entropy of physical systems. So I will come back to this.

-

Will entropy be low much of the time?

I don't understand. I quoted directly from your post before your last one. But my comment was a bit cheeky. I simply meant that thermodynamics was developed to enable us to predict (and therefore use) the time evolution of systems, including boxes of gas. It was not a criticism. Salt and pepper are part of the ncessary scientific apparatus for this. Take a sheet of paper and shake out some ground pepper over it. Mark where each grain falls on the paper. Dust off the pepper and take a ruler. You will find that a random ruler line matches the position marks of some of the pepper dots. Leylines are not magic. (I believe you recently referred to someone's experiment with random chords)

-

Will entropy be low much of the time?

I really don't understand what you are getting at here. I am not saying the thermo partition is the only one. Quite the reverse. That is the whole point about my flats and pigeonholes analogy or chessboard squares, that you have yet to understand. Perhaps this statement of yours will help since I am matching the flats/pigeonholes or squares as a definition of particular classes (not of equivalence classes in general but different ones) There is a one to one correspondence between the state structure in thermodynamics systems following Boltzman's distribution and some information systems. Of course there is, the layout of available 'boxes' follows the same law for both. But the use of this is different and there are other laws which also apply to one or the other individually, which are different. Can you point to an emergent phenomen in information theory? I can offer you one from the physical world, that as far as I know, has no counterpart in informatuon theory. You have answered my question rather briefly Can you point to a QM law applied to information theory to produce QM effects in information behaviour? I am listening out for your detailed explanation of the anomalous ionisation energies and its alleged counterpart in informatuion theory. I am sorry if I misunderstood you but that was the impression I gained reading your previous posts. If I did please consider that other may do as well. However if you now confirm your view that two structures may have some similarities or identities but also some differences. I can happily accept that. My point then becomes, you cannot (necessarily) separate off the differences and declare them identical. Though you may, of course, take advantage of the similarities in using one to model the other. Hopefully the above now puts my view on this into context. Just as you have said that you didn't say there is only one law, I didn't say that all the laws of thermodynamics are different, I claimed that some are the same and some are different. Can you now offer me the same courtesy? In relation to this, have you hear of the division into "The Relations of Constitution" and the "The Conditions of Compatibility" ? If not it might pay you to study them a little. They are an important way of analysing things. @Tristan L and @joigus Surely that is the point of Thermodynamics - To model what a box of gas is going to do ? However I have not been following the runes example very closely, but perhaps I can offer my 'salt and pepper' set explanation of Leylines here ?

-

Will entropy be low much of the time?

Here is another simple problem Suppose you have a sealed adiabatic tube containing an adiabatic frictionless piston dividing the tube into two chambers, A and B as shown. Let both sides of the system contain an ideal gas. Discuss the time evolution of the system in terms of entropy.

-

Will entropy be low much of the time?

And I'm sorry to point out that you seem to me to be bent on finding fault with my attempts to explain my principle point to you, rather than understanding the point itself. As I understand your thesis here, you are proposing that there is one and only one Law or rule that applies to your partitions, that due to Boltzman. However it remains your responsibility to support your thesis so please explain the anomalous first ionisation energies of Nitrogen, Phosphorus and Arsenic in terms of your proposition.

-

Will entropy be low much of the time?

This is the unaswered question. +1 Consider a system of molecules in some volume. Unless both molecules have the same velocity they do not have the same kinetic energy and therefore the internal energy is not evenly distributed between the molecules (maximum entropy). So the famous 'hot death' of the universe must be a static (as in unchanging) situation from the point of view of maximum entropy. But this view ignores the fact there are twin drivers in the thermodynamic world that often pull in opposite directions. The principle of minimum energy can be used to devise a system that will oscillate indefinitely at fixed (maximum) entropy.

-

Will entropy be low much of the time?

Thank you for considering my comments. I'm sorry my analogy was not clear enough for you to understand. So try this one instead. Both chess and draughts are played on the same board. But they are very different games with very different rules, and different end results. Events can happen in chess that cannot happen in draughts and vice versa. The same can be said of the partitions of your master set. This carries over to the other part of your answer. There are umpteen relationships in physics where something is proportional to something else. And very often the contant of proportionality carries the units as in strain (a dimensionless quantity) is proportional to stress. But that does not mean we can disregard the constant and say therefore stress and strain are the same as thermodynamic entropies. Otherwise you could model one on the other, but if you tried you would obtain conflicting results, just as if you tried to play chess with a draughts set or vice versa. Information entropy and Thermodynamic entropy are not the same, or subject to the same laws (as in the rules of chess and draughts).